1955

Pilot-Tone Motion Estimation for Brain Imaging at Ultra-High Field Using FatNav Calibration1Centre for the Developing Brain, School of Biomedical Engineering and Imaging Sciences, King's College London, London, United Kingdom, 2London Collaborative Ultra high field System (LoCUS), London, United Kingdom, 3Department of Radiology, University of California Davis, Sacramento, CA, United States, 4MR Research Collaborations, Siemens Healthcare Limited, Frimley, United Kingdom

Synopsis

3D FatNavs were used to provide a rapid, robust pre-scan to calibrate motion estimation from pilot-tone. The amount of training data required to make reliable forward predictions was investigated. This method robustly predicts motion within a dataset and can be applied to other datasets with lower accuracy. Improving the accuracy and speed of this method is ongoing work. This independent method of motion estimation shows promise for rapid calibration as part of routine examinations and paves the way to offer motion correction at ultra-high field where motion-correction is particularly relevant.

Introduction

Motion artefacts in the head are well documented1 and have a significant impact on high resolution image quality, particularly relevant at ultrahigh field.2Pilot-tone is a motion-estimation method that uses an externally generated RF signal consisting of a single frequency chosen such that it falls outside the field of view of an image but is still sampled in the k-space data, within the over-sampled region of the frequency encoded direction.3 We have previously shown that pilot-tone signals can be applicable to head motion via a linear model using a head transmit-receive coil at 7T.4

Previous work took ~20 minutes to acquire enough data to construct the motion model, making it impractical for realistic application. 3D FatNavs (highly undersampled, low resolution, rapid, fat-selective 3D images) have previously been shown to provide robust means for registration based motion correction5. In this work we explore their use to calibrate the linear motion model for pilot-tone motion estimation in a potentially reasonable pre-scan.

Methods

All measurements were performed on a MAGNETOM Terra (Siemens Healthcare, Erlangen, Germany) 7T scanner in research configuration, with an 8-Tx-channel head coil (Nova Medical, Wilmington MA, USA). Human subject scanning was approved by the Institutional Research Ethics Committee (HR-18/19-8700). All data presented was acquired from a single, healthy volunteer. Registrations were carried out with FLIRT (FMRIB, Oxford, UK). All data analysis was carried out using MATLAB R2021b (MathWorks, Natick, MA, USA).A pilot-tone was generated with an RF signal generator (APSIN3000, AnaPico, Glattbrugg, Switzerland) with an offset frequency in the over-sampled frequency-encoded data (adjusted per scan). The tone was broadcast into the magnet room by a monopole antenna fixed to the scanner room door; power level was set to –20.0dBm.

Two sets of 360 FatNav calibration scans were acquired at 1.4s/volume with the volunteer instructed to hold a different static position every 20 seconds, providing positional references for the pilot-tone data. FatNav protocol: TE/TR = 1.35/3s; FA: 7°; 86x58x128 matrix (ROxPE1xPE2), 256x256x384 mm3 field of view (FOV); GRAPPA 4x4 with separate ACS line prescan.

A hybrid k-space5 was generated by applying a Fourier transformation to each readout line in the acquired, oversampled k-space. The detected peak in the oversampled region was selected as the pilot-tone signal. This was amplitude normalized across coils and phase was referenced to the complex mean across coils.

Since the subject was moving during acquisition, some volumes were rejected where motion corrupted the individual image acquisition. Two separate runs of FatNav scans were acquired, separated by ~10 minutes.

Motion parameters $$$b$$$ were obtained by using FLIRT rigid-body registration (least squares cost function)6,7 to register each image to the first in the acquisition after data rejection. These registration parameters were taken as input to a linear model:

$$b = Ax$$

Where $$$x$$$ is the complex pilot-tone data and $$$A$$$ the model matrix. The model is trained by solving the inverse using a range of training data4:

$$A = x^{-1}_{train}b_{train}$$

We investigated the amount of training data required to make reliable forward predictions and the ability of a model trained on one dataset to predict another.

Results

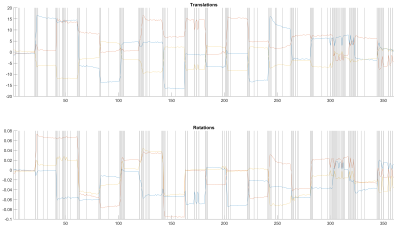

Figure 1 shows an example FatNav of the head at 7T. The skull can be clearly seen and used for registration by FLIRT.Figure 2 shows rejection of time points overlaid on registration parameters.

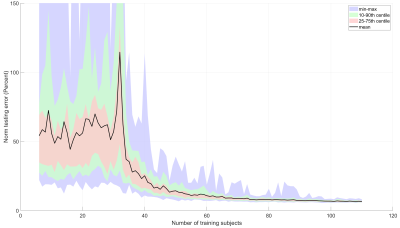

Figure 3 shows errors in model testing using random training sets tested on the same 50 test datapoints; for each number of training sets 100 different random instances were selected from the data. The model is poorly conditioned below 32 training datasets and mean normalised error drops to 10% after 60 datasets.

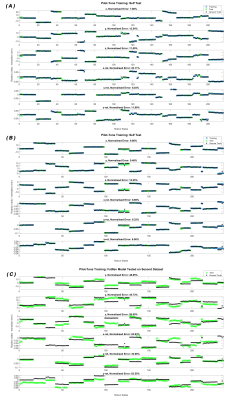

Figure 4a & 4b shows the model self-tested when trained on data from the same FatNav acquisition (blue points are training data, green are testing, all randomly selected); there is good agreement of test parameters and ground truth. Fig 4c shows the result of using the model obtained by training on dataset 1 to predict motion parameters from dataset 2: here the agreement is good in places but there are also positions where prediction fails.

Discussion & Conclusion

A pilot-tone model trained with FatNavs works robustly as a method for motion prediction when trained and tested on random subsets of the same data acquisition. 60 or more training points are required to build a good model. The same forward model can then be applied to other datasets though prediction accuracy drops; reasons for this are, as yet, not clear.Continuous movement of the subject when training the data can negatively affect the calibration of the model and additional data points must be acquired and rejection employed based on poor registration due to subject movement.

Pilot-tone provides a potential real-time readout of head position during brain imaging at 7T and can be obtained through the standard receiver chain without disturbing normal data acquisition. However, a calibration step is required and in our original work this was slow. This update shows that images needed for calibration can be obtained quickly, paving the way for deploying the method as a routine part of 7T examinations. Optimal ways to deploy the method have yet to be explored, proving fruitful future work.

Acknowledgements

The authors would like to thank Francesco Padormo for the loan of the RF signal generator.

This work was supported by core funding from the Wellcome/EPSRC Centre for Medical Engineering [WT203148/Z/16/Z] and by the National Institute for Health Research (NIHR) Biomedical Research Centre based at Guy’s and St Thomas’ NHS Foundation Trust and King’s College London and/or the NIHR Clinical Research Facility. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health and Social Care.

References

1. Andre JB, Bresnahan BW, Mossa-Basha M, et al. Toward Quantifying the Prevalence, Severity, and Cost Associated With Patient Motion During Clinical MR Examinations. J Am Coll Radiol. 2015;12(7):689-695. doi:10.1016/j.jacr.2015.03.007

2. Zaitsev M, Maclaren J, Herbst M. Motion artifacts in MRI: A complex problem with many partial solutions. Journal of Magnetic Resonance Imaging. 2015;42(4):887-901. doi:10.1002/jmri.24850

3. Bacher M. Cardiac Triggering Based on Locally Generated Pilot-Tones in a Commercial MRI Scanner: A Feasibility Study. October 2017.

4. Wilkinson T, Godinez F, Brackenier Y, et al. Motion Estimation for Brain Imaging at Ultra-High Field Using Pilot-Tone: Comparison with DISORDER Motion Compensation. In: Proc Int Soc Mag Reson Med 29.

5. Gallichan D, Marques JP, Gruetter R. Retrospective correction of involuntary microscopic head movement using highly accelerated fat image navigators (3D FatNavs) at 7T. Magnetic Resonance in Medicine. 2016;75(3):1030-1039. doi:10.1002/mrm.25670

6. Jenkinson M, Smith S. A global optimisation method for robust affine registration of brain images. Medical Image Analysis. 2001;5(2):143-156. doi:10.1016/S1361-8415(01)00036-6

7. Jenkinson M, Bannister P, Brady M, Smith S. Improved Optimization for the Robust and Accurate Linear Registration and Motion Correction of Brain Images. NeuroImage. 2002;17(2):825-841. doi:10.1006/nimg.2002.1132

Figures