1882

Inter-scanner harmonization of T1-weighted brain images using 3D CycleGAN

Vincent Roca1, Grégory Kuchcinski1,2, Morgan Gautherot1, Xavier Leclerc1,2, Jean-Pierre Pruvo1,2, and Renaud Lopes1,2

159000, Univ Lille, UMS 2014 – US 41 – PLBS – Plateformes Lilloises en Biologie & Santé, Lille, France, 259000, Univ Lille, Inserm, Lille Neuroscience & Cognition, Lille, France

159000, Univ Lille, UMS 2014 – US 41 – PLBS – Plateformes Lilloises en Biologie & Santé, Lille, France, 259000, Univ Lille, Inserm, Lille Neuroscience & Cognition, Lille, France

Synopsis

In MRI multicentric studies, inter-scanner harmonization is necessary to avoid taking into account variations due to technical differences in the analysis. In this study, we focused on CycleGAN models for 3D T1 weighted brain images harmonization. More precisely, we didn't follow the classical 2D CycleGAN architecure and developped a 3D cycleGAN model. We compared harmonization quality of these two kinds of models using 20 imaging features quantifying T1 signal, contrast between brain structures and segmentation quality. Results illustrate the potential of 3D CycleGAN for better synthesize images in inter-scanner MRI harmonization tasks.

Introduction

Inter-scanner harmonization has become an important challenge in MRI multicentric studies to limit inter-scanner effects, that is the variabilities which are not biologic but come from image acquisition differences between scanners. In the last few years, this challenge has been tackled with CycleGAN models which use Deep Learning and adversarial training for domain image-to-image translation. But almost all these models have been applied in 2D1-4, potentially leading to discontinuities between slices and missing volume contextual information. In this study, our goal was to develop a 3D CycleGAN approach for T1-weighted (T1) images harmonization and to compare it with a common 2D CycleGan approach.Methods

We selected 1146 3D T1 brain images from 124 healthy subjects of the ADNI 2 database. Two datasets of 305 and 841 3D T1 images were acquired on 3T GE Signa HDxt and 3T Siemens TrioTim scanners, respectively. Preprocessings included skull-stripping, affine registration to MNI template and min-max intensity normalisation.Using these two datasets , inter-scanner harmonization was performed with 2D CycleGAN and 3D CycleGAN. 2D and 3D CycleGan were implemented using U-net Generator and Markovian discriminator5 with patch size of 382 and 383, respectively. These choices are quite common in MRI image-to-image translation6,7.

To assess the harmonization quality, we used 20 imaging features (IQM) inspired by the MRIQC tool8 to quantify T1 signal and contrast between brain structures, and segmentation quality. Statistical analyses were performed to assess the inter-scanner effects between the two datasets before and after harmonisation. Siemens scanner was used as the reference and GE MRIs were harmonized toward it.

Results

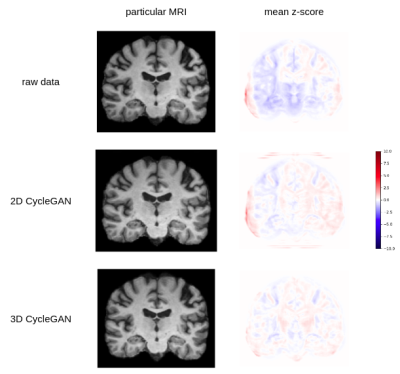

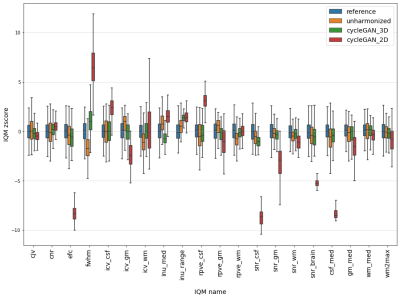

Figure 1 illustrates harmonizations obtained with 2D CycleGAN and 3D CycleGAN. Z-scores computed at voxel level enable us to observe that main heterogeneities were reduced by harmonization. In addition, we note that 2D CycleGAN produced small intensity artifacts outside the brain whereas it was not the case for 3D CycleGAN. In Figure 2, we can observe that most imaging features have different distributions after 2D CycleGAN harmonization while with 3D CycleGAN, some distributions are more similar with those of the reference scanner. Welch’s t-tests confirm these points and indicate 18 IQMs significatively different (p-value < 0.05) after harmonization whereas there were 10 before. On the other hand, only 5 IQMs are still considered statistically different after 3D CycleGAN harmonization.Discussion

Visualisation of harmonized images suggests that intensities harmonization between two 3D-T1 datasets are improved with 3D CycleGAN. Quantitative analyses with imaging features are consistent with this observation, showing that inter-scanner effects were effectively captured and removed with the 3D approach while the 2D version failed in this task. 2D CycleGAN is mainly constrained by the fact that the same harmonization is applied on all slices whereas 3D CycleGAN is focused on the entire volume level and enables more complex processing.While the results presented suggest 3D CycleGAN is more relevant for 3D-T1 brain images harmonization, further work will certainly be needed to evaluate the method in different contexts such as cross-site generalization of predictive models. It would also be interesting to test 3D approaches in multicentric studies with StarGAN models9.

Conclusion

This work demonstrates the potential of 3D CycleGAN for T1-weighted MRI brain images harmonization in multicentric studies. We show that relevant imaging features are more homogeneous with our approach compared to common ones in 2D.Acknowledgements

No acknowledgement found.References

1. Nguyen H, Morris RW, Harris AW, Korgoankar MS, Ramos F. Correcting differences in multi-site neuroimaging data using Generative Adversarial Networks. ArXiv180309375 Cs [Internet]. 12 avr 2018 [cité 6 juill 2021]; Disponible sur: http://arxiv.org/abs/1803.093752. Modanwal G, Vellal A, Buda M, Mazurowski M. MRI image harmonization using cycle-consistent generative adversarial network. In 2020. p. 36.

3. Gao Y, Liu Y, Wang Y, Shi Z, Yu J. A Universal Intensity Standardization Method Based on a Many-to-One Weak-Paired Cycle Generative Adversarial Network for Magnetic Resonance Images. IEEE Trans Med Imaging. sept 2019;38(9):2059‑69.

4. Bashyam VM, Doshi J, Erus G, Srinivasan D, Abdulkadir A, Singh A, et al. Deep Generative Medical Image Harmonization for Improving Cross-Site Generalization in Deep Learning Predictors. J Magn Reson Imaging [Internet]. [cité 28 sept 2021];n/a(n/a). Disponible sur: https://onlinelibrary.wiley.com/doi/abs/10.1002/jmri.27908

5. Isola P, Zhu J-Y, Zhou T, Efros AA. Image-to-Image Translation with Conditional Adversarial Networks. ArXiv161107004 Cs [Internet]. 26 nov 2018 [cité 27 janv 2021]; Disponible sur: http://arxiv.org/abs/1611.07004

6. Cackowski S, Barbier EL, Dojat M, Christen T. comBat versus cycleGAN for multi-center MR images harmonization. 17 févr 2021 [cité 17 avr 2021]; Disponible sur: https://openreview.net/forum?id=cbJD-wMlJK0

7. Zhong J, Wang Y, Li J, Xue X, Liu S, Wang M, et al. Inter-site harmonization based on dual generative adversarial networks for diffusion tensor imaging: application to neonatal white matter development. Biomed Eng OnLine. 15 janv 2020;19(1):4.

8. Esteban O, Birman D, Schaer M, Koyejo OO, Poldrack RA, Gorgolewski KJ. MRIQC: Advancing the automatic prediction of image quality in MRI from unseen sites. PLOS ONE. 25 sept 2017;12(9):e0184661.

9. Choi Y, Uh Y, Yoo J, Ha J-W. StarGAN v2: Diverse Image Synthesis for Multiple Domains. ArXiv191201865 Cs [Internet]. 26 avr 2020 [cité 11 nov 2021]; Disponible sur: http://arxiv.org/abs/1912.01865

DOI: https://doi.org/10.58530/2022/1882