1881

Paired CycleGAN-based Cross Vendor Harmonization

Joonhyeok Yoon1, Sooyeon Ji1, Eun-Jung Choi1, Hwihun Jeong1, and Jongho Lee1

1Seoul National University, Seoul, Korea, Republic of

1Seoul National University, Seoul, Korea, Republic of

Synopsis

CycleGAN shows good performance with harmonization task. However, generative models have risk of structure modification. we proposed a cross-vendor harmonization model with paired CycleGAN based architecture for both high performance and structural consistency. We acquired 4 in-vivo dataset from Siemens and Philips scanner. For algorithm, we adapted CycleGAN for generation model, and we utilized L1 loss from pix2pix and patchGAN discriminator for structural consistency. Evaluations were performed both quantitatively and qualitatively. To quantitative evaluation, we assessed means of structural similarity index measure (SSIM). Proposed model shows better results compared to CycleGAN architecture.

INTRODUCTION

While deep learning-based methods have demonstrated great potentials in MRI1-2, the variation across MR images acquired using different scanners or protocols hamper the generalization performance of those methods.3-4 Therefore, harmonization across MR images is of great importance. Recently, deep learning harmonization models using cycle-consistent adversarial network (CycleGAN)5 based algorithms6-7 and Unet8 based end-to-end algorithms9-10 have been proposed, providing great performance. CycleGAN based models, trained using unpaired dataset, show good performance in the terms of intensity and contrast similarity, but it suffers from structural modification.11 On the other hand, end-to-end network models with paired dataset training provided high structural similarity, but only under a condition with paired datasets. In this study, we design a cross-vendor harmonization model takes advantage of both methods by combining the two concepts. CycleGAN was adapted for generation model to benefit from high performance, and L1 loss inspired from pix-to-pix12 and patchGAN13 discriminator were implemented for structural consistency. Both quantitative and qualitative results demonstrate the higher performance of the proposed method compared to CycleGAN.METHOD

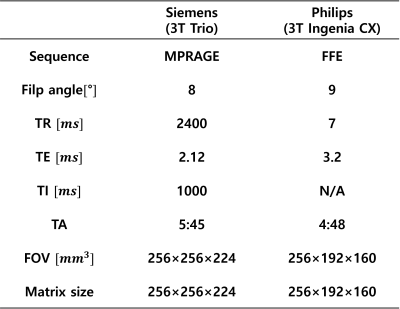

[Dataset and preprocess]A total of 4 healthy subjects were scanned twice within 5 days on two 3T scanners (Trio, Siemens; IngeniaCX, Philips). 3D T1-weighted images were acquired with parameters in Figure 1. Region outside the brain was masked out and the images were rigidly registered. 2D axial slices were extracted for the 2D image network and all pixels were scaled linearly to the range 0-255. 3 subjects’ data were assigned as train sets and the others from 1 subject were used for the test set. Training images were cropped to 64 by 64 size patches with 32 stride intervals. Images with low or zero information were filtered out, leaving a total of 9,763 patches out of 32,256 patches. For evaluation, 131 out of 256 slices were used.

[Architecture]

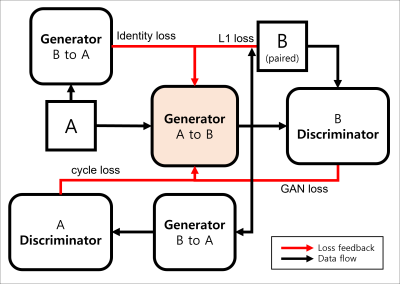

Firstly, for the generator, we adapted the CycleGAN architecture5, which consist of two convolutions (stride-2), nine residual blocks connected to two convolutions (stride 1/2). Four losses including CycleGAN loss (adversarial loss ($$$ L_{GAN} $$$), cycle consistency loss ($$$ L_{cyc} $$$) and identity loss ($$$ L_{idt} $$$) and $$$L_{1}$$$ loss are used for generator:

$$L_{GAN}(G,D_{B},A,B)=E_{B\sim P_{data}(B)}[logD_{B}(B)]+E_{A\sim P_{data}(A)}[log(1-D_{B}(G(A)))]$$

$$L_{cyc}(G,F,D_{A},D_{B})=E_{A\sim P_{data}(A)}[||F(G(A))-A||_{1}]+E_{A\sim P_{data}(A)}[||G(F(B))-B||_{1}]$$

$$L_{idt}(G,F)=E_{B\sim P_{data}(B)}[||G(B)-B||_{1}]+E_{A\sim P_{data}(A)}[||G(A)-A||_{1}]$$

$$L_1(G,F)=||G(A)-B||_{1}+||F(B)-A||_{1}$$

Where $$$G$$$ is the generator maps from $$$A$$$ to $$$B$$$, while generator $$$F$$$ maps $$$A$$$ from input $$$B$$$. $$$D_{B}$$$ is a discriminator aims to distinguish between generated $$$B$$$ or $$$G(A)$$$ and $$$B$$$. Motivated from pix2pix12, we take advantage of $$$L_{1}$$$ loss for structural consistency. By setting a pixel-by-pixel loss, we expect the model to suppress modification of configuration that can be critical to medical analysis. With the weighting factors $$$λ_{cyc}$$$, $$$λ_{1}$$$, final loss function is defined as:

$$L(G,F,D_{A},D_{B})=L_{GAN}+L_{cyc}*λ_{cyc}+L_{idt}+λ_{1}*L_{1}$$

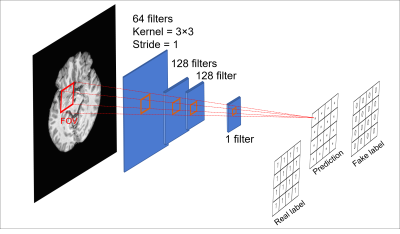

Secondly, for the discriminator, we utilize patchGAN13 for preserving the structure characteristic. We designed a discriminator with FOV 12x12 with 3 convolutional layers. (Figure 3)

We trained 200 epochs with both weighting factors λ_{cyc} and λ_{1} set as 10. We evaluate SSIM of each model and qualitative assessment was performed with generated images and their intensity.

RESULTS

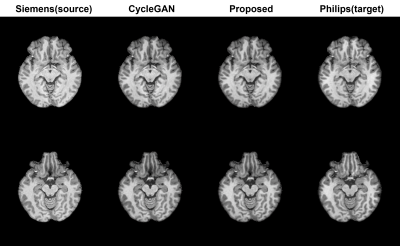

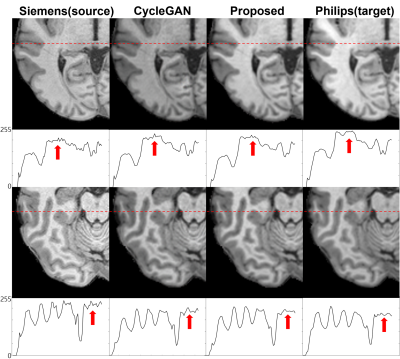

Proposed paired CycleGAN-based model is evaluated quantitatively and qualitatively. After training for 200 epochs, the SSIM values between the model output and the label were 0.965 and 0.960 for the proposed model and CycleGAN model, respectively. Figure 4 represent transferred images from source domain (Siemens) to target domain (Philips) with both proposed and CycleGAN model. (Figure 5) Intensity plot of a single line in image illustrate that the harmonization results of proposed method have similarity to target domain. (see the red arrows) With the proposed metric, coarse line parts in the source image are smoothed to target image which is similar to target image feature, while CycleGAN does not generate target features.DISSCUSSION & CONCLUSION

In this work, a paired-CycleGAN model for cross-vendor harmonization is proposed. The algorithm takes advantages of both CycleGAN’s generating performance regarding intensity and the structural reproducibility of pix2pix and patchGAN. The high SSIM 0.965, qualitive results of intensity pattern reveals the high performance of the proposed method. When visually compared, the method showed better performance compared to the CycleGAN model with less structural modification. These results indicate that using pixel-by-pixel loss (e.g. L1 loss) and a structure specified assessment approach for discriminator (e.g. patchGAN) are valid for harmonization model improvement.Acknowledgements

This work was supported by Creative-Pioneering Researchers Program through Seoul National University(SNU).References

- Jin Liu, Yi Pan, Min Li et al., Ziyue Chen et al. Application of deep learning to MRI images: A survey, Big Data Mining and Analytics, vol. 1, (2018): 17807123

- Lee, J.-G., Jun, S., Cho, Y.-W., Lee, H., Kim, G.B., Seo, J.B., Kim, N.: Deep learning in medical imaging: general overview. Korean J. Radiol. 18(4), (2017): 570–584

- Qi Dou, Cheng Ouyang, Chen Chemn, et al. PnP-AdaNet: Plug-and-Play adversarial domain adaptation network at unpaired cross-modality cardiac segmentation. IEEE Access, vol. 7, (2019): pp. 99065–99076

- Mi Badža, M.M.; Barjaktarovi´c, M.C. Classification of Brain Tumors from MRI Images Using a Convolutional ˇ Neural Network. Appl. Sci. (2020): 10, 1999

- Jun-Yan Zhu, Taesung Park, Phillip Isola, Alexei A. Efros, Unpaired Image-To-Image Translation Using Cycle-Consistent Adversarial Networks. Proceedings of the IEEE International Conference on Computer Vision, (2017): pp. 2223-2232.

- Gourav Modanwal, Adithya Vellal, Mateusz Buda, Maciej A. Mazurowski, MRI image harmonization using cycle-consistent generative adversarial network, Proceedings Volume 11314, Medical Imaging 2020: Computer-Aided Diagnosis, (2020): 1131413

- Jian Chen, Yue Sun, Zhenghan Fang et al. Harmonized neonatal brain MR image segmentation model for cross-site datasets, Biomedical Signal Processing and Control, vol. 69, (2021)

- Olaf Ronneberger, Philipp Fischer, Thomas Brox, U-Net: Convolutional networks for biomedical image segmentation, Medical Image Computing and Computer-Assisted Intervention 2015, vol. 9351, (2015)

- Blake E. Dewey, Can Zhao, Jacob C. Reinhold et al. DeepHarmony: A deep learning approach to contrast harmonization across scanner changes, Magnetic Resonance Imaging, vol. 64, (2019): pp.160-170

- Lipeng Ning, Elisenda Bonet-Carne, Francesco Grussu et al. Cross-scanner and cross-protocol multi-shell diffusion MRI data harmonization: Algorithms and results, NeuroImage, vol. 221, (2020): 117128

- Jawook Gu, Tae Seon Yan, Jong chul Ye, Donh Hyun Yang, CycleGAN denoising of extreme low-dose cardiac CT using wavelet-assisted noise disentanglement, Medical Image Analysis 74 (2021): 102209

- P. Isola, J.-Y. Zhu, T. Zhou, and A. A. Efros. Image-to-image translation with conditional adversarial networks. Conference on Computer Vision and Pattern Recognition, (2017): pp. 136-144

- Ugur Demir, Gozede Unal, Patch-Based Image Inpainting with Generative Adversarial Networks, arXiv preprint arXiv:1803.07422, 2018

- Gourav Modanwal, Adithya Vellal, Mateusz Buda et al. MRI image harmonization using cycle-consistent generative adversarial network, Medical Imagingm, (2020): 1131413

Figures

Figure 1.

Table of scan parameters.

Figure 2. Diagram of mapping function generating B domain images from A domain

input. GAN loss, cycle loss, identity loss, $$$L_{1}$$$ loss represent $$$L_{cyc}$$$, $$$L_{idt}$$$, $$$L_{1}$$$, $$$L_{1}$$$ respectively.

Figure 3. Architecture

of discriminator. Each pixel of prediction map represents probability from 12ⅹ12 in real image input. We designed small FOV for better harmonization

performance based on related study.14

Figure 4. The images are generated from CycleGAN

metric and proposed model. Target domain show smoother texture with overall images.

Both metrics well performed with generating Philips style image task without any

artifact.

Figure 5. Zoom-in images from both metrics are represented. Philips images shows smooth texture, whereas Siemens images have salt-and-pepper crispy feature. It

is represented better with proposed metric. The plot below the images visualizes the intensity of marked red line. In the plots, the coarse line from the source

image is smoothed in the generated results, similar to the feature of target

image, while the results of CycleGAN still display coarse lines.

DOI: https://doi.org/10.58530/2022/1881