1880

QC of image registration using a DL network trained using only synthetic images1Subtle Medical, Santa Clara, CA, United States

Synopsis

To perform robust quality control of medical imaging registration, we propose a method that can QC co-registration for multiple organs, without the restriction of the modalities. A rule-based image synthesis pipeline is used to generate random contrasts and shapes as training images. ResNet34 is trained to predict image alignment. Two MRI datasets with the spine or brain as subjects are used as external validation sets. The proposed model trained with synthetic images is validated on either one of the real MRI datasets and outperforms the same model trained on the other MRI dataset, which shows better generalizability.

INTRODUCTION

Medical image registration is the process of aligning several medical images based on anatomical structures1. It is a widely used preprocessing step for many medical imaging-related clinical applications like image reconstruction2, and it strongly affects the performance of algorithms that rely on image alignments, such as multimodal segmentation. Thus, the quality control (QC) of medical image registration is essential for controlling the failure rates of many downstream clinical applications. In real-world use cases, for example, MR images, the need for registration QC might come from different contrast (e.g. QC the image registrations of T1, T2 or FLAIR, etc.), or different subjects in a multi-site study (e.g. brain or spine, etc.), which raises the need for a generalizable model that is contrast and subject agnostic. Another issue to address is that images that require registration are generated during two acquisitions of the same patient at different time points, so there’s neither a ground truth label for image movement nor perfectly aligned image pairs, which is challenging for supervised models using real datasets. We propose an image synthesis pipeline that can generate perfectly aligned or misaligned image pairs of arbitrary contrast and shapes, for building a deep learning registration QC model with better generalizability.METHODS

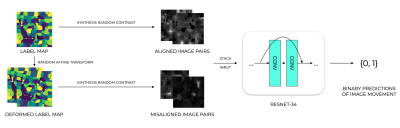

Synthesis pipelineA medical imaging synthesis pipeline was designed based on SynthMorph3. This is based on generating a label map of random geometrics shapes from noise images and random stationary velocity field (SVF) transforms and assigning labels to the image by selecting the maximum intensity. To generate non-aligned image pairs, a random affine transformation is applied to get a deformed label map. Then, a gray-scale image is synthesized from a label map by drawing the intensities of the labels from a normal distribution. And the image is further convolved by a gaussian kernel and corrupted by an intensity-bias field. The final image is obtained by min-max scaling and contrast augmentation. We call this synthetic dataset “synthmed” dataset.

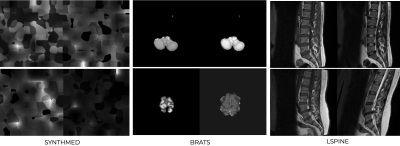

Datasets

To validate the generalizability and performance of the model on real MRI data, we also included two other datasets in our study: the spine open dataset4 (464 image pairs) for spine MRI and the BRATS5 dataset (1251 image pairs) for brain MRI. “lspine” contains 464 image T1 and T2 image pairs, with 452 images labeled as “aligned” and 12 images labeled as “not aligned”. Misaligned images were excluded and the aligned images were used for synthetic misalignments. The BRATs dataset contained 1251 T1 and T2 image pairs that were pre-registered with SimpleElastix and then deformed with affine transformation.

Model training and evaluation

A deep learning image net based on ResNet34 without pre-training is used in this study to train binary classification models that predict the alignment of input image pairs. The models were trained using binary cross-entropy loss with Adam optimizer and with a learning rate scheduler that decreased the learning rate over epochs. The input of the model was image pairs with two channels, each channel containing an image of different contrast. And the models were trained to predict binary labels of whether movement exists in the input image pair.

The proposed model was trained on “synthmed” for 6000 equivalent image pairs and evaluated on its own test set, which is generated with the same setting as the training set, as well as spine and BRATs datasets, to test its generalizability over real MRI images. And for comparison, two models were also trained on the L-spine and “BRATs” datasets respectively, with optimal learning rate and scheduler.

In this work, 2D networks were used, but in real use cases, 3D image alignment is also often considered. So the models are evaluated slice-wise and image-wise, with F1 score, precision, recall, and confusion metrics respectively.

RESULTS

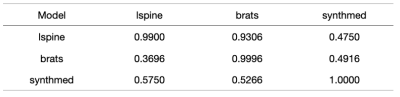

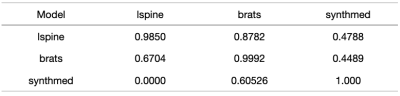

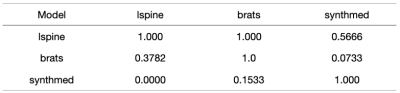

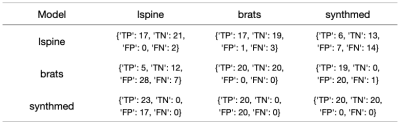

The models trained are validated on "synthmed" dataset as well as the other two real image datasets as well. The evaluation results are shown in Figures 1-4, with each column to be a validation set, and each row to be the dataset that the model was trained on. F1 score, precision, recall are used to present slice-wise results, while confusion matrix is used to show the image-wise results. Figure 4 shows examples of model labeling correct aligned or misaligned image pairs in 3 datasets.DISCUSSION

For the two models trained on specific types of real MRI images, even though the two models can perform well on the validation set of the corresponding training dataset, they did not generalize well to each other, especially for models that were trained on the BRATs dataset. They also failed to generalize to the “synthmed” dataset, which contained no anatomical structure but purely random shapes. This indicates that the models trained on real MRI datasets partially rely on image anatomical structure when judging misalignments. This feature inhibited the model from further generalization to other organs or subjects in medical imaging.CONCLUSION

In summary, we designed a synthetic image pipeline that enables better generalizability of the image registration QC task.Acknowledgements

No acknowledgement found.References

1. Fu Y, Lei Y, Wang T, Curran WJ, Liu T, Yang X. Deep learning in medical image registration: a review. Physics in Medicine & Biology. 2020 Oct 7;65(20):20TR01.

2. McClelland JR, Modat M, Arridge S, Grimes H, D’Souza D, Thomas D, O’Connell D, Low DA, Kaza E, Collins DJ, Leach MO. A generalized framework unifying image registration and respiratory motion models and incorporating image reconstruction, for partial image data or full images. Physics in Medicine & Biology. 2017 May 5;62(11):4273.

3. Hoffmann M, Billot B, Greve DN, Iglesias JE, Fischl B, Dalca AV. SynthMorph: learning contrast-invariant registration without acquired images. arXiv preprint arXiv:2004.10282. 2020 Apr 21.

4. Sudirman, Sud; Al Kafri, Ala; natalia, friska; Meidia, Hira; Afriliana, Nunik; Al-Rashdan, Wasfi; Bashtawi, Mohammad; Al-Jumaily, Mohammed (2019), “Lumbar Spine MRI Dataset”, Mendeley Data, V2, doi: 10.17632/k57fr854j2.2

5. Menze BH, Jakab A, Bauer S, Kalpathy-Cramer J, Farahani K, Kirby J, Burren Y, Porz N, Slotboom J, Wiest R, Lanczi L. The multimodal brain tumor image segmentation benchmark (BRATS). IEEE transactions on medical imaging. 2014 Dec 4;34(10):1993-2024.

Figures