1878

MR image enhancement using a multi-task neural network trained using only synthetic data1Subtle Medical, Menlo Park, CA, United States

Synopsis

Faster MRI scans can be achieved by acquiring low resolution images or low SNR images and enhancing them to standard-of-care using deep learning techniques. However, to achieve clinical diagnostic quality images, this requires a large number of paired clinical datasets to train the model. Here we show that a multi-task deep convolutional neural network (DCNN) trained using only simulated motion artifact, low SNR, and low resolution images is capable of improving the quality of clinically acquired images from motion corrupted and accelerated sequences.

Introduction

MRI acquisition is often a slow modality, requiring several minutes to acquire a single contrast. This is exacerbated by cardiac, respiratory and patient motion during the long acquisitions. One prominent method for accelerating this modality is to degrade image quality (by either reducing the number of excitations or image resolution) and using either denosing or super-resolution approach to recover the compromised image quality [1, 2]. However, these deep convolutional neural network (DCNN) based algorithms are often trained using paired data sets, which may be logistically complicated and expensive to acquire. Moreover, images from the fast acquisition are often degraded on a single imaging parameter (e.g. acquisition matrix or number of excitations), necessitating the use of multiple models.In this study, we hypothesized that by simulating multiple types of image degradations simultaneously, we could train a single multi-task DCNN to enhance images from accelerated protocols.Methods

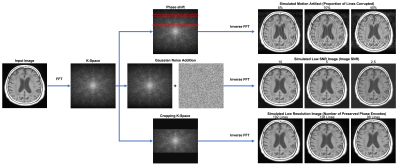

Simulations:To create a library of paired degraded images and high-quality images, we created a K-space based simulation pipeline (Figure 1). First, MR images were converted to corresponding K-space using Fast Fourier Transform (FFT). We then simulated various image quality degradations by applying various image simulation transformations. To simulate motion artifacts in images, we induced phase shift errors into K-space, altering the number of lines of K-space corruption (ranging from 0% to 50%) and directionality of motion (rotational and translational). To simulate noisy images, we combined K-space data with Guassian noise to simulate poor SNR acquisitions, varying the amount of noise added, ranging from 2.5 to 15 SNR. Finally, to simulate low resolution images, we cropped and zero-padded K-space ranging from 96 to 192 phase and frequency encodes. After applying K-space based simulations, images were converted back into the image domain by using an inverse FFT.

Multi-Task DCNN Training:

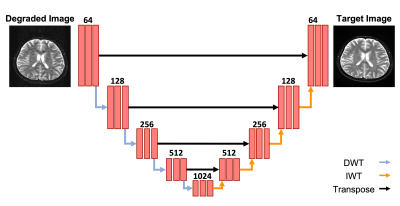

To recover the standard-of-care quality from the degraded images, we developed a multi-task DCNN to simultaneously reduce motion artifacts, denoise images, and perform super-resolution. We implemented a wavelet based DCNN architecture which utilizes a U-net backbone (which we refer to as Wt-Unet) (Figure 2) [3]. Wt-Unet replaces down sampling operations with Discrete Wavelet Transform (DWT) operations and replaces up sampling operations with Inverse Wavelet Transform (IWT) operations, allowing the network to preserve spatial and frequency information during these operations. Pairing the simulated degraded images with their ground truth counterparts, we trained the Wt-Unet generator using 50% SSIM and 50% L1 loss. To ensure our network produced realistic enhanced images, we used an image-conditional GAN with an adversarial discriminator based on DenseNet. Training was performed end to end on 906 T1, 442 T2, and 62 FLAIR images.

Accelerated Image Evaluation:

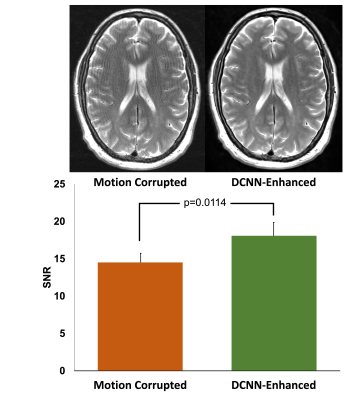

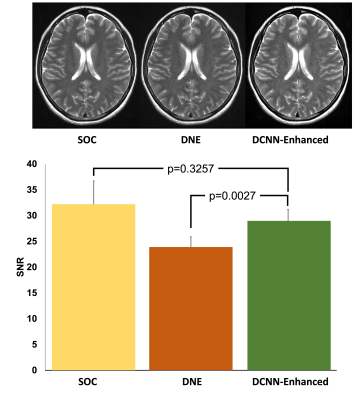

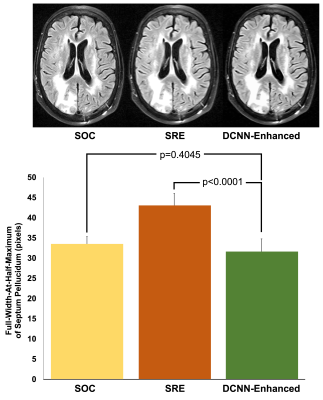

To evaluate the performance of our multi-task DCNN for reducing motion artifacts, we identified motion corrupted images (8 T1, 3 T2, and 1 FLAIR) and measured the white matter SNR between DCNN-enhanced motion corrupted images and motion corrupted images. To evaluate the performance of denoising, we retrospectively identified standard of care (SOC) images and matching fast images with reduced number of excitations (termed DNE) (6 T1 and 4 T2). We evaluated denoising performance by comparing the white matter SNR of the DCNN-enhanced DNE images with both the SOC images and their DNE counterparts. To evaluate the performance of supersolution, we retrospectively identified SOC images and matching fast images with reduced image acquisition matrix (termed SRE) (1 T1, 9 T2, and 12 FLAIR). We evaluated super-resolution performance by comparing the full-width-at-half-maximum (FWHM) of septum pellucidum (SP) in DCNN-enhanced SRE axial images with measurements from both the SOC images and their SRE counterparts. All statistical analyses were performed with paired t-tests. All work carried out in this study was HIPAA compliant and under IRB approval.

Results

To evaluate the performance of motion reduction, we compared the SNR of motion degraded images to DCNN-enhanced images. We found that DCNN-enhanced images had higher SNR compared to their non-enhanced counterparts (p=0.0114) (Figure 3). To evaluate the performance of denoising tasks, we compared the SNR of both the SOC and DNE to DCNN-enhanced images. Comparing DCNN-enhanced images and SOC images, we found that there was no statistically significant difference between SOC and DCNN-enhanced images (p=0.3257) (Figure 4). Comparing DCNN-enhanced images and DNE images, we found that DCNN-enhanced images had higher SNR compared to their DNE counterparts (p=0.0027). To evaluate the performance of the super-resolution task, we compared the thickness of the SP on axial images. Comparing the thickness of the SP from both DCNN-enhanced and SOC images, we found that there was no statistically significant difference between SOC and DCNN-enhanced images (p=0.4045) (Figure 5). Comparing the thickness of the SP from both DCNN-enhanced and SRE images, we found that DCNN-enhanced images had thinner SP than their SRE counterparts (p<0.0001), demonstrating improvements in resolution.Conclusions

Using only simulated data we were able to enhance degraded images that were clinically acquired using accelerated protocols. Moreover, this strategy allowed us to simultaneously enhance images for the tasks of super-resolution, denoising, and motion artifact reduction. This simulation based approach may be useful for reducing the amount of paired clinical datasets required for training.Acknowledgements

NoneReferences

1. Masafumi Kidoh, Kensuke Shinoda, et al. Deep Learning Based Noise Reduction for Brain MR Imaging: Tests on Phantoms and Healthy Volunteers. Magn Reson Med Sci. 2020 Aug 3;19(3):195-206. Epub 2019 Sep 4.

2. Can Zhao, Aaron Carass, Blake E. Dewey, et al. A Deep Learning Based Anti-aliasing Self Super-Resolution Algorithm for MRI, IEEE Trans Med Imaging. 2021 Mar;40(3):805-817. Epub 2021 Mar 2.

3. Liu P, Zhang H, Lian W, Zuo W. Multi-level wavelet convolutional neural networks. IEEE Access. 2019 Jun 6(7):74973-85.

Figures