1848

Deep learning-based Automatic Analysis for Free-breathing High-resolution Spiral Real-time Cardiac Cine Imaging at 3T

1Biomedical Engineering, University of Virginia, Charlottesville, VA, United States, 2Medicine, University of Virginia, Charlottesville, VA, United States, 3Radiology, University of Virginia, Charlottesville, VA, United States, 4Medicine, Stanford University, Stanford, CA, United States

Synopsis

Cardiac real-time cine imaging is useful for patients who cannot hold their breath or have irregular heart rhythms. Free breathing high-resolution real-time cardiac cine images are acquired efficiently using spiral acquisitions, and rapidly reconstructed using our DESIRE framework. However, quantifying the EF from free-breathing real-time imaging is limited by the lack of an EKG signal to define the cardiac cycle, and through-plane cardiac motion resulting from free-breathing. We developed a DL-based segmentation technique to determine the end-expiratory phase and determine end-systole and end-diastole on a slice by slice basis to accurately quantify EF from spiral real-time cardiac cine imaging.

Introduction

Cardiac magnetic resonance (CMR) free-breathing real-time cine imaging is useful for patients with limited breath-hold capacity and/or arrhythmias1. We have developed a rapid-free breathing spiral cine acquisition with a rapid DL-based image reconstruction (DESIRE)2. One challenge is quantifying left ventricular ejection fraction (LVEF) accurately from free-breathing data without ECG-gating. We sought to develop a convolutional neural network that segments the left ventricle of real-time cine cardiac images to compute the LVEF. In order to accurately quantify LVEF, the developed technique determines end-expiration based on the center of mass of the segmentation mask, and determines end-systole and end-diastole based on the temporal changes in area of the segmentation mask. In this way, spiral cine images can rapidly be acquired, reconstructed and automatically quantified.Methods

Data Acquisition and Deep Learning Image ReconstructionA variable density (VD) gradient echo spiral pulse sequence with a trajectory rotated by the golden angle between subsequent TRs with an in-plate spatial resolution of 1.25 mm, slice thickness of 8mm and a temporal resolution of 39ms was used for free-breathing data acquisition on 3T SIEMENS scanners (Prisma or Skyra; Siemens Healthineers, Erlangen, Germany)3. Data was acquired for each slice over a free-breathing acquisition of 360 frames (16 seconds). Images were reconstructed using the DESIRE 3D-UNET4 framework we have described previously.

Deep learning-based Image Segmentation

The left ventricular endocardial border for each slice was segmented using a 2D UNET architecture. The UNET segmentation network was trained using manual contours as the reference to define ground-truth binary masks. 8 cases containing 9-15 individual slices and 40 frames were used to train the model. 10 fold data augmentation was performed to train the model with a total of 36800 images. For data augmentation, the following permutations were performed: randomly rotating [-360, 360]°, randomly zooming in [0,30]%, and randomly shearing [-3,3]° each of the images in the training data.

Ten cases, reconstructed from the DESIRE image reconstruction network, with image size 192 x 192 x 360 (Frames) were used for evaluation. Each case had between nine and fifteen slices. DESIRE was used for rapid image reconstruction. Left ventricle segmentation results from the model were post processed to remove erroneous extra segmentations. In each frame, if there was more than one contour, only the contour with the closest center of mass to the previous contour’s center of mass was kept.

Ejection Fraction Computation The ejection fraction was quantified for the ten cases using manual contouring of breath-held segmented SSFP images and SPARCS as the reference standard, and using the proposed DL technique. End-expiration was determined by detecting the vertical location of the centroid of each contour. This data was plotted and filtered using a bandpass filter with pass-band frequencies within the range 0.09 Hz and 2 Hz. For each slice, the point in time (frame) in which the contour’s centroid was on end-expiration was automatically determined from the peaks of the Filtered Vertical Location of Centroid vs Frame graph (Figure 1). This is to ensure that the slices quantified are when the diaphragm is in an end-expiratory location. Then area of the automatic segmentation was determined for each frame and plotted against time (frame); this graph was created for each slice. The largest area (peak) and smallest area (trough) closest to when the diaphragm is in an end-expiratory location represented an end-diastolic (ED) area and end-systolic (ES) area respectively (Figure 3). The area for each ED and ES mask in pixels was multiplied by the voxel volume (1.25×1.25 x 8 mm3), and the data was summed across the slices to obtain the end-diastolic and end-systolic volumes. LVEF was calculated using the formula LVEF=(ED volume-ES Volume)/ED Volume.

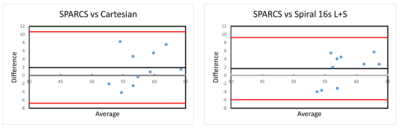

Image Analysis Using Bland-Altman plots (Figure 4), the LVEF value of each case was assessed as compared to the Cartesian SSFP and 16 seconds low-rank and sparse (L+S) CAT-SPARCS technique.

Results

Excellent quantification of real-time cine cardiac images was demonstrated. Figure 1 shows the proposed workflow in incorporating the deep learning-based segmentation technique. Video 1 shows an example case of left ventricle segmentations. Figure 2 shows one of the frames from Video 1 in the first slice, with its corresponding input image and binary mask. Figure 3 shows a slice from a different case with ED area and ES area displayed, as well as the graph of its left ventricle endocardial contour area through time (frame). Figure 4 shows the Bland-Altman plots comparing the LVEF from the free-breathing DL technique as compared to the breath-held SSFP and SPARCS cine images. There was no significant difference in the mean LVEF between the DL technique and either the breath-held SSFP (mean bias 1.9 ml (p=0.19)) or the SPARCS (mean bias 1.6 ml (p=0.20)) respectively.Discussion and Conclusion

Ejection fraction measurements from free-breathing non-EKG gated cine has been limited by difficulties correcting for respiratory motion, and detecting ED and ES frames. The combination of the DL reconstruction framework (DESIRE) with the DL segmentation/quantification pipeline will enable rapid automatic quantification of LV function from high-resolution free-breathing spiral cine imaging.Acknowledgements

This work was supported by NIH R01 HL155962-01 and Harrison Research Award.References

1. Feng L, Srichai MB, Lim RP, et al. Highly accelerated real-time cardiac cine MRI using k–t SPARSE-SENSE. Magnetic Resonance in Medicine 2013;70:64–74.

2. Wang J, Zhou R, Salerno M. Free-breathing High-resolution Spiral Real-time Cardiac Cine Imaging using DEep learning-based rapid Spiral Image REconstruction (DESIRE). In Proceedings of the ISMRM 29th Annual Meeting and Exhibition, 2021, p. 877

3. Zhou R, Yang Y, Mathew RC, et al. Free-breathing cine imaging with motion-corrected reconstruction at 3T using SPiral Acquisition with Respiratory correction and Cardiac Self-gating (SPARCS). Magnetic Resonance in Medicine 2019;82:706–720.

4. Ronneberger O, Fischer P, Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. arXiv:1505.04597 [cs] 2015.Figures

Figure 1. The deep learning-based segmentation workflow and analysis. A sample frame is shown from the NUFFT method, then DESIRE and L1-SENSE reconstruction, where the DESIRE image was inputted to the network to create an automatic binary mask and contour of the left ventricle. Two graphs are shown, quantifying the respiratory motion of the left ventricle cavity, and then graphing the area of the segmentation mask to determine end-diastolic areas and end-systolic areas.

Video 1. The real-time cine video using the DESIRE technique with left ventricle segmentations. Video has a length of 30 frames per slice. Quantification of this case was computed to have a left ventricular ejection fraction of 57.22%.

Figure 2. One of the frames in the first slice from Video 1. From left to right, the input image, output binary mask, and segmented image.

Figure 3. The DESIRE real-time cine LV segmentation of a frame. Excellent segmentation is seen using the deep learning-based technique. Graph demonstrates quantification of the slice corresponding to the frame by looking at the area of the contour over frame. Average end-diastolic area is the average of areas indicated with green markers. Average end-systolic area is the average of areas indicated with cyan markers.

Figure 4. Bland-Altman plots comparing the LVEF of 10 cases from SPARCS and cartesian, and SPARCS and spiral 16s L+S. The difference in LVEF for each case between the two techniques was plotted on the y-axis, and the average of the LVEFs obtained from the two techniques was plotted on the x-axis. The LVEF computed from real-time cine cardiac imaging is in agreement with those of cartesian and spiral L+S.