1790

Convolutional Neuronal Network Inception-v3 detects Partial Volume Artifacts on 2D MR-Images of the Lung for Automated Quality Control1Institute of Diagnostic and Interventional Radiology, Hannover Medical School, Hannover, Germany, 2German Center for Lung Research (DZL), Biomedical Research in Endstage and Obstructive Lung Disease Hannover (BREATH), Hannover, Germany

Synopsis

The partial volume effect (PVE) is an often-observed artifact in MR imaging. Especially images with a low spatial resolution, will show an averaged voxel signal of multiple tissue components. These artifacts can be so substantial that a further image analysis can be omitted. This is e.g. the case for phase-resolved functional lung imaging (PREFUL), which is based on the 2D acquisition of coronal image-time-series to assess ventilation and perfusion dynamics. In this study the pretrained convolutional neural network Inception-v3 was trained via transfer-learning to detect images, which show substantial PVE with a classification accuracy of 91%.

Introduction

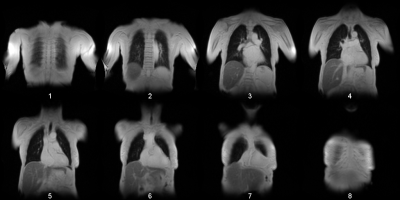

The partial volume effect (PVE) is a well-known artifact in MR imaging. PVE leads to voxel signals, which are a linear combination of signals originating from multiple tissues. PVE can be so substantial that a further image analysis can be omitted. One application case, where especial consideration of the PVE is required, is phase-resolved functional lung imaging (PREFUL1). This method is based on the 2D acquisition of coronal image-time-series to assess ventilation and perfusion dynamics. Typically, multiple coronal slices are acquired. Especially if a full lung acquisition is required, it will be difficult to avoid including some anterior or posterior slices with PVE (see Figure 1). Since the whole PREFUL analysis relies on accurate voxel time-series originating from lung parenchyma, this can result in compromised results. Therefore, it is desirable to find an automated method, which can identify such slices. The hypothesis of this study is that a convolutional neural network (cnn) can be used to detect images containing strong PVE artifacts with at least 90% accuracy.Methods

The study was approved by the institutional ethical review board and all subjects gave written informed consent. Seven healthy volunteers (age range 25-39 years, 3 female, 4 male) and 34 patients with suspicion of chronic thromboembolic pulmonary hypertension (age range 51-85, 17 female, 17 male) underwent 1.5T MRI. For PREFUL MRI multiple coronal slices with a slice gap of 20% were acquired (see Figure 1) in free breathing using a 2D spoiled gradient sequence with a temporal resolution of 220 ms for a total of 250 frames (please see Lasch et al. for details2). After a non-rigid registration, the image time-series was averaged in the temporal dimension and preprocessed as follows:1. Cut to center (exclude 20 voxels from each edge)

2. Resize image to input size of 299 x 299

3. replicating the image to 299 x 299 x 3 (pseudo RGB)

4. Normalize the value range by maximal value

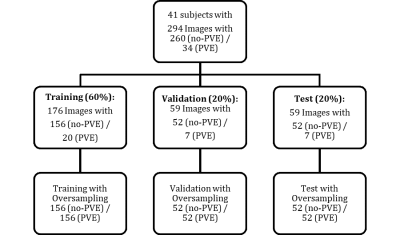

The pretrained Inception-v3 (~1.000.000 training images from ImageNet database) was adapted for the transfer learning by changing the fully connected layer and last layer to two classes. Data (n=294) was labeled by a MR-physicist (with 8 years experience in lung MR imaging) and randomly split according to a 60% training (n=176), 20% validation (n=59) and 20% test (n=59) scheme. Due to a high class-imbalance, the sets were oversampled by duplicating randomly selected images to establish an even class distribution3. Image augmentation with random shearing in x- and y-direction [-5°, 5°], translation in x- and y-direction [-10, 10 voxels], scaling [0.75, 1.25] and rotation [-20°, 20°] operators was performed for each epoch to prevent the net from overfitting. Image augmentation was also applied for the oversampled data to introduce some variation between the duplicated images.

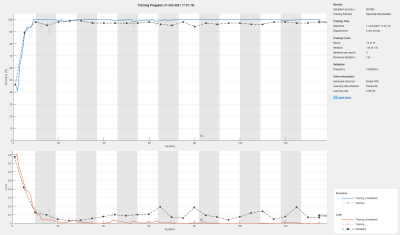

Training was performed on GPU with NVIDIA Titan V. The settings were: Stochastic gradient descent with momentum algorithm for optimization, an initial learning rate of 0.005, a batch size of 32, shuffling of training and validation data every epoch and 15 max epochs. The classification accuracy was calculated for the original test data and for the oversampled test data. To reduce random bias introduced by oversampling selection, the oversampling was repeated 100 times to obtain an averaged accuracy.

Results

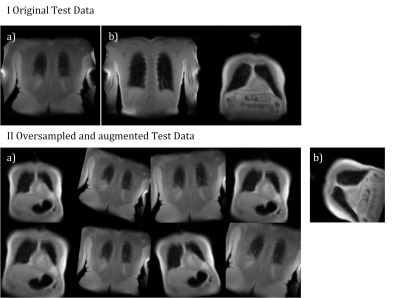

Training was completed after ~7 minutes and converged to a validation accuracy of 98% (see Figure 3). An accuracy of 95% was achieved for the test data without oversampling. For oversampled data, an average accuracy of 91% (σ=0.03) was obtained. See Figure 4 for the visual presentation of the cases, which were falsely classified by the Inception-v3 net.Discussion

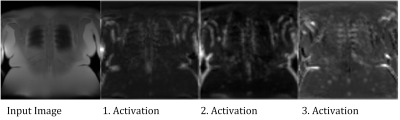

As hypothesized, an accuracy over 90% was achieved. The falsely classified cases are all located in the more anterior and posterior sections of the lung and thus contain at least some proportion of voxels with PVE. Therefore, the classification of such slices is not unambiguous and can probably vary from observer to observer. Analysis of the activations in the convolutional layers (see Figure 5) suggests, that the net actually learned to identify ribs structures.Since a typical MR acquisition includes only a small fraction of slices with substantial PVE, the class distribution of the data was highly imbalanced. Such a class imbalance (88% vs. 12%) can lead to problems during training and also evaluation period. For example, an accuracy of 88% can be achieved by simply choosing the major class in all cases. To avoid such problems a simple oversampling with random image duplication was employed3 successfully. This required to repeat this process to reduce random effects on the accuracy estimation.

One major drawback of this study is that manual classification was only performed by one observer for single-center data. Therefore, an analysis of the interobserver variability was not possible and generalization of the net might be limited.

Conclusion

Recently, CNNs were demonstrated to rate motion artifacts in neuroimaging4. Similarly, this study demonstrates for the specific MR processing example of PREFUL1, that CNNs can also be employed as a tool for automated quality management by excluding slices, which show partial volume artifacts. This automation was shown to work with high accuracy and without producing critical misclassification.Acknowledgements

This work was supported by the German Centre for Lung Research (DZL). The authors thank Melanie Pfeifer and Frank Schröder for their help during data acquisition.References

1. Voskrebenzev A, Gutberlet M, Klimeš F, Kaireit TF, Schönfeld C, Rotärmel A, Wacker F, Vogel-Claussen J. Feasibility of quantitative regional ventilation and perfusion mapping with phase-resolved functional lung (PREFUL) MRI in healthy volunteers and COPD, CTEPH, and CF patients. Magn Reson Med. 2018;79(4):2306-2314. doi:10.1002/mrm.26893

2. Lasch F, Karch A, Koch A, Derlin T, Voskrebenzev A, Alsady TM, Hoeper MM, Gall H, Roller F, Harth S, Steiner D, Krombach G, Ghofrani HA, Rengier F, Heußel CP, Grünig E, Beitzke D, Hacker M, Lang IM, Behr J, Bartenstein P, Dinkel J, Schmidt KH, Kreitner KF, Frauenfelder T, Ulrich S, Hamer OW, Pfeifer M, Johns CS, Kiely DG, Swift AJ, Wild J, Vogel-Claussen J. Comparison of MRI and VQ-SPECT as a Screening Test for Patients With Suspected CTEPH: CHANGE-MRI Study Design and Rationale. Front Cardiovasc Med. 2020;7:51. doi:10.3389/fcvm.2020.00051

3. Kenta. Oversampling for deep learning: classification example (https://github.com/KentaItakura/Image-classification-using-oversampling-imagedatastore/releases/tag/2.0), GitHub. Retrieved November 1, 2021.

4. Fantini I, Yasuda C, Bento M, Rittner L, Cendes F, Lotufo R. Automatic MR image quality evaluation using a Deep CNN: A reference-free method to rate motion artifacts in neuroimaging. Comput Med Imaging Graph Off J Comput Med Imaging Soc. 2021;90:101897. doi:10.1016/j.compmedimag.2021.101897

Figures