1785

Benchmarking learned non-Cartesian k-space trajectories and reconstruction networks1NeuroSpin, Joliot, CEA, Université Paris-Saclay, F-91191, Gif-sur-Yvette, France, 2Inria, Parietal, Université Paris-Saclay, F-91120, Palaiseau, France

Synopsis

We benchmark the current existing methods to jointly learn non-Cartesian k-space trajectory and reconstruction: PILOT1, BJORK2 and compare them with those obtained from recently developed generalized hybrid learning (HybLearn) framework3. We present the advantages of using projected gradient descent to enforce MR scanner hardware constraints as compared to using added penalties in the cost function. Further, we use the novel HybLearn scheme to jointly learn and compare our results through retrospective study on fastMRI validation dataset.

Introduction

Compressed Sensing in MRI involves the optimization of k-space sampling trajectories and image reconstruction from the undersampled k-space data. In this regard, PILOT1,4 was developed to learn k-space trajectory jointly with a U-Net as reconstruction network. However, it relies on auto-differentiation of the NUFFT operator, which may not be very accurate as shown in5, thus resulting in suboptimal local minima.More recently, BJORK2 learned trajectories using a more accurate Jacobian approximation of the NUFFT operator5 . Yet, both BJORK2 and PILOT1 enforce the hardware constraints as penalty terms in the overall loss function, thus requiring the tuning of at least one hyperparameter associated with these terms. Additionally, such penalty could affect the overall gradients, thereby resulting in suboptimality. Further, BJORK2 was parameterized with B-spline curves, which could severely limit the shape of trajectories and prevent them from better exploring the k-space. Finally, both above mentioned methods do not make use of any density compensation (DCp) mechanism for image reconstruction, although the latter plays a critical role in obtaining cleaner MR images in the non-Cartesian deep learning setting6.

In this work, we compare BJORK2 and PILOT1 with the proposed generic hybrid framework3 for learning k-space trajectories with projected gradient descent.

Model and Notation

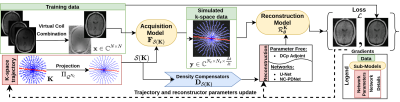

We used the generic model (Fig.1) developed in3 to learn hardware compliant k-space trajectories \(\mathbf{K}\). The model was trained on complex-valued brain images \(\mathbf{x}\) obtained by virtual coil combination7 of the per-channel images in fastMRI dataset8, to account for the phase accrual and make the forward model more realistic. Here, projection \(\Pi_{\mathcal{Q}_{N_c}}\) was carried out after every gradient descent step to enforce constraints. Later on, the trajectories are interpolated with a linear operator \(\mathcal{S}\) to model the analog-to-digital converter in the scanner. The acquisition model is simulated by a forward NUFFT operator \(\mathbf{F}_{\mathcal{S}(\mathbf{K})}\). The density compensator \(\mathbf{D}_{\mathcal{S}(\mathbf{K})}\) is estimated with9 and is used by reconstruction network \(\mathcal{R}_{\boldsymbol{\theta}}^\mathbf{K}\) giving reconstructed image \(\widehat{\mathbf{x}}\).Methods

The model described above was trained with combined L1-L2-MSSIM loss \(\mathcal{L}\) as described in3. The trajectories were learned with ADAM optimizer and reconstruction network \(\mathcal{R}_{\boldsymbol{\theta}}^\mathbf{K}\) was trained with Rectified-ADAM. The training was done with a learning rate of \(10^{-3}\) and batch size of 64 on the fastMRI training data, which was split into training and validation in a 90%-10% ratio. This enabled early stopping to prevent overfitting. The original fastMRI validation dataset was used only for later evaluation. The entire training was carried out at different resolution levels and using HybLearn as presented in Sec.3.3 and Tab.1 in3.We learned k-space trajectories with \(N_c=16\) shots and \(N_s=512\) samples per shot (observation time \(T_{\textrm{obs}}=5.12\textrm{ms}\), raster time \(\Delta\textrm{}t=10\text{µs}\), dwell time \(\delta\textrm{}t=2\text{µs}\)). For comparison with an earlier baseline, we use SPARKLING trajectories generated with the learned sampling density using LOUPE10 as obtained in11 and trained NC-PDNet6 as a reconstructor for it.

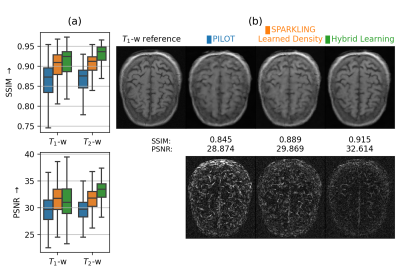

We compared our results with PILOT and BJORK trajectories, which were obtained directly from the respective authors. As we didn’t receive their trained reconstruction networks, we trained NC-PDNet by ourselves for a fair comparison: NC-PDNet makes use of DCp and its Cartesian version stood 2nd in the 2020 fastMRI challenge12. This way, we used the same reconstructor for all the trajectories, with the same network parameters and which was trained individually. Our comparison with PILOT (Fig.3) was carried out for \(T_1\) and \(T_2\) contrasts in the fastMRI dataset.

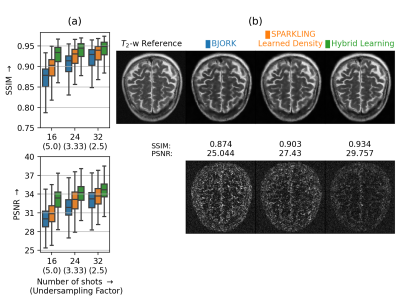

As the BJORK trajectory was learned for \(\Delta\textrm{}t=4\textrm{µs}\), to ensure fair comparison, we obtained trajectories with the same specifications. This comparison (Fig.4) was done at different undersampling factors (UF).

Results

When comparing the zoomed portions of optimized trajectories (Fig.2), we observe that PILOT has a k-space hole at the center while BJORK samples the k-space densely slightly off the center, which is suboptimal. In contrast, HybLearn and SPARKLING methods sample the central region of k-space more densely, which could help obtain improved image quality.We see that PILOT and BJORK do not efficiently use the gradient hardware and have similar gradient and slew rate profiles, while SPARKLING and HybLearn trajectories, are hitting the gradient constraints more often for the maximal gradient and almost everywhere for the slew rate. This difference could be attributed to using a projector for hardware constraints as compared to handling a penalty.

Next, we compared the retrospective results with PILOT (Fig.3) and BJORK (Fig.4) obtained on 512 slices from fastMRI validation dataset. We observe that both SPARKLING with a learned density and HybLearn outperform PILOT and BJORK, with HybLearn performing the best with a gain of nearly 0.06 in SSIM and 3-4dB in PSNR scores as compared to PILOT and BJORK.

Conclusion

In this work, we benchmarked the trajectories obtained by HybLearn with PILOT1 and BJORK2. Although the learned neural networks in PILOT and BJORK were not available for a full end-to-end comparison, we performed a fair assessment by training a NC-PDNet6 as common reference for image reconstruction. Through restrospective studies on the fastMRI validation dataset, we showed that this hybrid learning scheme works across multiple resolutions and leads to superior performance of the trajectories and improved image quality overall.Future prospects of this work include prospective implementations through modifications of \(T_1\) and \(T_2\)-w imaging sequences.

Acknowledgements

This work was granted access to the HPC resources of IDRIS under the allocation 2021-AD011011153 made by GENCI. Chaithya G R was supported by the CEA NUMERICS program, which has received funding from the European Union’s Horizon 2020 research and innovation program under the Marie Sklodowska-Curie grant agreement No 800945. We would like to thank the authors of BJORK2 and PILOT1 for sharing the trajectories for comparisons done in this abstract.

References

1. Weiss, T., and others (2020). PILOT: Physics-informed learned optimal trajectories for accelerated MRI. arXiv:1909.05773v4.

2. Wang, G., and others (2021). B-spline parameterized joint optimization of reconstruction and k-space trajectories (BJORK) for accelerated 2d MRI. arXiv preprint arXiv:2101.11369.

3. R, C.G., Ramzi, Z., and Ciuciu, P. (2021). Hybrid learning of Non-Cartesian k-space trajectory and MR image reconstruction networks.

4. Vedula, S., and others (2020). 3D FLAT: Feasible Learned Acquisition Trajectories for Accelerated MRI. In Machine learning for medical image reconstruction: 3rd intern. WS mlmir 2020, held in conjunction with miccai 2020 (Springer Nature), p. 3.

5. Guanhua, W., Douglas, C. Noll, and Fessler, J.A. (2021). Efficient NUFFT Backpropagation for Stochastic Sampling Optimization in MRI. In 29th proceedings of the ismrm society.

6. Ramzi, Z., and others (2021). NC-PDNet: a Density-Compensated Unrolled Network for 2D and 3D non-Cartesian MRI Reconstruction.

7. Parker, D.L., and others (2014). Phase reconstruction from multiple coil data using a virtual reference coil. Magnetic Resonance in Medicine 72, 563–569.

8. Zbontar, J., and others (2018). fastMRI: An open dataset and benchmarks for accelerated MRI. arXiv:1811.08839.

9. Pipe, J.G., and Menon, P. (1999). Sampling density compensation in MRI: Rationale and an iterative numerical solution. Magn. Reson. Med. 41, 179–186.

10. Bahadir, C.D., and others (2020). Deep-learning-based optimization of the under-sampling pattern in MRI. IEEE Trans. Comput. Imaging 6, 1139–1152.

11. Chaithya, G.R., and others (2021). Learning the sampling density in 2D SPARKLING MRI acquisition for optimized image reconstruction.

12. Muckley, M.J., Riemenschneider, B., Radmanesh, A., Kim, S., Jeong, G., Ko, J., Jun, Y., Shin, H., Hwang, D., Mostapha, M., et al. (2021). Results of the 2020 fastMRI challenge for machine learning MR image reconstruction. IEEE Transactions on Medical Imaging 40, 2306–2317.

Figures

The optimized hardware compliant non-Cartesian k-space trajectories using (a) PILOT, (b) SPARKLING with learned density using LOUPE, (c) HybLearn scheme, (d) BJORK. The number of shots Nc=16. The number of dwell time samples are set to match the same number of sampling points overall. Zoomed in visualizations of the center of k-space (bottom) and slightly off-center (top) is presented at the right of corresponding trajectories. The corresponding gradient \(||G||_2\) (in mT/m) and slew rate \(||S||_2\) (in T/m/s) profiles are depicted below each trajectory.

(a) Box plots comparing the image reconstruction results on a retrospective study using 512 slices on T2 contrast (fastMRI validation dataset) using BJORK (blue), SPARKLING with learned density (orange) and HybLearn (green). We present the results at varying undersampling factors characterized with Nc=16, 24 and 32. SSIMs/PSNRs appear on top/at the bottom. (b) Top: T2-w reference image and reconstruction results for a single slice from file_brain_AXT2_205_2050175.h5 with corresponding strategies. (b) Bottom: The residuals, scaled to match and compare across methods.