1762

sim2real: Cardiac MR image simulation-to-real translation via unsupervised GANs1Biomedical Engineering Department, Eindhoven University of Technology, Eindhoven, Netherlands, 2Philips Research Laboratories, Hamburg, Germany, 3MR R&D - Clinical Science, Philips Healthcare, Best, Netherlands

Synopsis

There has been considerable interest in the MR physics-based simulation of a database of virtual cardiac MR images for the development of deep-learning analysis networks. However, the employment of such a database is limited or shows suboptimal performance due to the realism gap, missing textures, and the simplified appearance of simulated images. In this work we 1) provide image simulation on virtual XCAT subjects with varying anatomies, and 2) propose sim2real translation network to improve image realism. Our usability experiments suggest that sim2real data exhibits a good potential to augment training data and boost the performance of a segmentation algorithm.

Introduction

A cohort of virtual cardiac magnetic resonance images (CMR) can be simulated to aid the development and adaptation of data-hungry deep-learning (DL) based medical image analysis methods. Recent studies have shown the effectiveness of image simulation in the context of training a DL model for CMR image segmentation1,2. Although such models provide accurate anatomical information, their performance is still suboptimal as a result of the realism gap, missing texture and simplistic appearance of the simulated images. This holds especially for models trained completely on simulated images and evaluated on real ones. Generative adversarial networks (GANs)3, on the other hand, promise to synthesize realistic examples, as demonstrated by applications for multi-modal medical image translation4,5,6. However, GAN-generated images may not necessarily represent plausible anatomy.The purpose of the current research is to reconcile the two worlds of simulation and synthesis, as defined in [7], and take advantage of recent developments to reduce the realism gap between simulated and real data using GANs for unpaired/unsupervised translation. The contributions are two-fold: 1) Physics-based simulation of cardiac MR images on a population of XCAT subjects 2) GANs-based image-to-image translation for style (texture) transfer from real images. The framework is named sim2real translation.

Materials and Methods

The 4D XCAT phantom8 is utilized as the basis of the anatomical model for creating virtual subjects by carefully adjusting available parameters for altering the geometry of the human anatomy. We employ our in-house CMR image simulation framework based on the analytical Bloch equations to generate varying contrast on the labels of XCAT virtual subjects2.A GAN model based on contrastive learning, known as CUT9, is used for the task of unpaired translation between the real and the simulated images to transfer the realistic style (texture) while preserving the anatomical information (content) of the simulation. Contrastive learning encourages encoded features of two patches from the same location in the real and translated images to be similar yet different to other patches. Compared to other unpaired translation frameworks such as CycleGAN10, CUT is a one-sided network with a much lighter generator architecture hence requiring few data for training. The content is preserved through a multilayer patch-wise contrastive loss added to the adversarial loss, as shown in Figure 1.

The M&Ms challenge data11 are used as the source of real cardiac MR images. To explores the effects of multi-vendor data, we utilize a subset of the data consisting of 150 subjects with a mix of healthy controls and patients with a variety of heart conditions scanned using Siemens (Vendor A) and Philips (Vendor B).

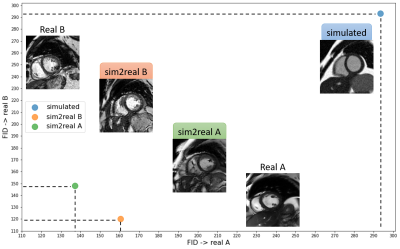

Two identical sim2real models are trained using the data from vendor A and vendor B (sim2real A, and sim2real B) to investigate the network’s ability to transfer vendor-specific appearance images on simulated ones. We calculate the widely-used Fréchet Inception Distance (FID) score12 between feature vectors calculated for real and translated images to evaluate the similarity between the simulated database and its respective real data, before and after translation. Additionally, we evaluate the usefulness of our sim2real data in aiding a DL segmentation model for the task of cardiac segmentation. We utilize a nnUNet13, trained to segment the left ventricle (LV), right ventricle (RV), and the left ventricular myocardium (MYO).

Results

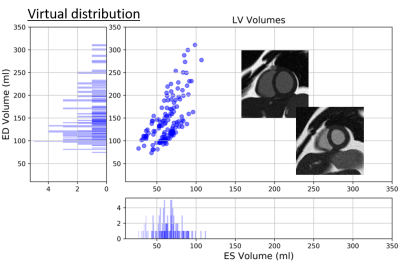

Two examples of simulated images and statistics of the XCAT virtual subjects’ distribution in terms of LV volumes are depicted in Figure 2.The FID score is computed between the simulated data, sim2real A data, sim2real B data and the data from vendor A and vendor B. The lower value for the FID score suggests more realistically generated images and thus higher similar feature statistics to real database. The results are shown in Figure 3. As expected, the original simulated data has a high FID score on both real A and real B data. Generally, the sim2real model substantially reduces the FID between the simulated data and real images, indicating improvement in image realism. Moreover, the vendor-specific imaging features are captured by the network and transferred to the simulated images.

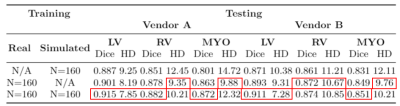

The segmentation performance of three different models can be observed in Table 1, presenting the evaluation of all models on a separate test set. The results suggest that the model trained with sim2real images already adapts well to real data, exhibiting a slight drop in performance compared to the model trained with real data. Additionally, we observe that augmenting the training with sim2real data has a positive impact on segmentation accuracy (Dice score), particularly for the LV.

Discussion and Conclusion

In this work, we created a database of virtual cardiac MR images simulated on the XCAT anatomical phantom and investigated the effectiveness of an unsupervised GAN for the task of simulation-to-real translation, named sim2real. We attempted to reduce the realism gap between the simplified image simulation and complex realistic image textures. Our sim2real model could learn the vendor-specific imaging features and map them onto the simulated images, resulting in reduction of the FID scores which can be attributed to more similarity between the simulated and real databases. Our usability experiments suggested that sim2real data exhibits a good potential to augment real training data, particularly in scenarios where data is scarce.Acknowledgements

This research is a part of the OpenGTN project, supported by the European Union in the Marie Curie Innovative Training Networks (ITN) fellowship program under project No. 76446

References

[1] C. G. Xanthis, D. Filos, K. Haris, and A. H. Aletras, “Simulator-generated training datasets as an alternative to using patient data for machine learning: An example in myocardial segmentation with MRI,” Comput. Methods Programs Biomed., vol. 198, p. 105817, Jan. 2021.

[2] Y. Al Khalil, S. Amirrajab, C. Lorenz, J. Weese, and M. Breeuwer, “Heterogeneous virtual population of simulated cmr images for improving the generalization of cardiac segmentation algorithms,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), 2020, vol. 12417 LNCS, pp. 68–79.

[3] I. J. Goodfellow et al., “Generative Adversarial Networks,” Commun. ACM, vol. 63, no. 11, pp. 139–144, Jun. 2014.

[4] D. F. Bauer et al., “Generation of annotated multimodal ground truth datasets for abdominal medical image registration,” Int. J. Comput. Assist. Radiol. Surg. 2021 168, vol. 16, no. 8, pp. 1277–1285, May 2021.

[5] C.-B. Jin et al., “Deep CT to MR Synthesis Using Paired and Unpaired Data,” Sensors (Basel)., vol. 19, no. 10, May 2019.

[6] A. Chartsias, T. Joyce, R. Dharmakumar, and S. A. Tsaftaris, “Adversarial Image Synthesis for Unpaired Multi-modal Cardiac Data,” Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 10557 LNCS, pp. 3–13, 2017.

[7] A. F. Frangi, S. A. Tsaftaris, and J. L. Prince, “Simulation and Synthesis in Medical Imaging,” IEEE Trans. Med. Imaging, vol. 37, no. 3, pp. 673–679, Mar. 2018.

[8] W. P. Segars, G. Sturgeon, S. Mendonca, J. Grimes, and B. M. W. Tsui, “4D XCAT phantom for multimodality imaging research,” Med. Phys., vol. 37, no. 9, pp. 4902–4915, 2010.

[9] T. Park, A. A. Efros, R. Zhang, and J.-Y. Zhu, “Contrastive Learning for Unpaired Image-to-Image Translation,” Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 12354 LNCS, pp. 319–345, Jul. 2020.

[10] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks,” Proc. IEEE Int. Conf. Comput. Vis., vol. 2017-October, pp. 2242–2251, Mar. 2017.

[11] V. M. Campello et al., “Multi-Centre, Multi-Vendor and Multi-Disease Cardiac Segmentation: The M&Ms Challenge,” IEEE Trans. Med. Imaging, 2021.

[12] M. Heusel, H. Ramsauer, T. Unterthiner, B. Nessler, and S. Hochreiter, “GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium,” Adv. Neural Inf. Process. Syst., vol. 2017-December, pp. 6627–6638, Jun. 2017.

[13] F. Isensee, P. F. Jaeger, S. A. A. Kohl, J. Petersen, and K. H. Maier-Hein, “nnU-Net: a self-configuring method for deep learning-based biomedical image segmentation,” Nat. Methods 2020 182, vol. 18, no. 2, pp. 203–211, Dec. 2020.

[14] T. Park, A. A. Efros, R. Zhang, and J.-Y. Zhu, “Contrastive Learning for Unpaired Image-to-Image Translation,” Lect. Notes Comput. Sci. (including Subser. Lect. Notes Artif. Intell. Lect. Notes Bioinformatics), vol. 12354 LNCS, pp. 319–345, Jul. 2020.

Figures