1759

Transfer Learning in Ultrahigh Field (7T) Cardiac MRI – Automatic Left Ventricular Segmentation in a Porcine Animal Model1Chair of Cellular and Molecular Imaging, Comprehensive Heart Failure Center (CHFC), University Hospital Wuerzburg, Wuerzburg, Germany, 2Center for Computational and Theoretical Biology (CCTB), University of Wuerzburg, Wuerzburg, Germany, 3Department of Internal Medicine I, University Hospital Wuerzburg, Wuerzburg, Germany

Synopsis

Cardiac magnetic resonance (CMR) is considered the gold standard for evaluating cardiac function. Tools for automatic segmentation already exist in the clinical context. To bring the benefits of automatic segmentation to preclinical research, we use a deep learning based model. We demonstrate that for training small data sets with parameters deviating from the clinical situation (high resolution images of porcine hearts acquired at 7T in this case) are sufficient to achieve good correlation with manual segmentation. We obtain DICE-scores of 0.87 (LV) and 0.85 (myocardium) and find high agreement of the calculated functional parameters (Pearson‘s r between 0.95 and 0.99).

Introduction

CMR is the gold standard medical imaging technique regarding the analysis of functional parameters and ventricular volumes.1 The calculation of clinically relevant functional parameters, such as ejection fraction, stroke volume, left ventricular mass, etc. requires segmentation of endo- and epicardial contours, which is not only time-consuming, but also examiner-dependent. Thus, reliable automatic segmentation tools are not only convenient, but also improve the reproducibility of results, which improves treatment decisions. While commercially available software that provides automatic algorithms already exist for human data, they often fail to properly segment non-human images used in preclinical research (Figure 1). In this study we apply transfer learning to create a deep learning model for rapid automatic segmentation of cine images acquired in a large animal model of acute and chronic infarction at ultrahigh field strength (7T).Methods

The study was approved by the District Government of Lower Franconia, Germany (55.2.2-2532.2-1134-16). We included 11 female German Landrace pigs in the study. We induced myocardial infarction (MI) in 7 of them by 90min balloon catheter occlusion of the left anterior descending artery (LAD). 4 pigs served as sham controls, receiving the same procedure except for the occlusion. All pigs received a baseline MRI and 3 follow-up scans after infarct induction (3-4, 10-14 and ~60 days after MI). Short axis cine images (gradient-echo, TE: 3.18ms, TR: 49.52ms, FA: optimal, in-plane-resolution: 0.4x0.4mm) were generated on a 7T MAGNETOM™ Terra system (Siemens Healthineers, Erlangen, Germany) using 3 in-house built 8Tx/16Rx coils.2 All images were manually segmented twice using Medis Suite 3.1 (Medis Medical Imaging Systems BV, Leiden, the Netherlands). The AI model is based on a pre-trained model as described in a previous study3. This model combines a U-Net architecture with a ResNet34 backbone and was re-trained for 200 epochs (100 epochs with weights of the backbone frozen, 100 epochs unfrozen). Only end-systolic and end-diastolic phases were used for transfer learning. Extensive data augmentation including rotation, flipping, brightness and contrast adjustments were applied. Training and validation of the model were performed on 8 pigs (6:2 split), resulting in a total number of 556 and 212 images, respectively. The quality of segmentation was blindly tested on image sets of 3 pigs (308 images).Results

Throughout training the loss on both the training and validation sets fell in the frozen phase. While the training loss continued to fall in the unfrozen phase, the validation loss slowly increased (Figure 2A). The DICE-scores of the validation set for the left ventricle and myocardium, both separately and combined, increased for about the first 60 epochs and then reached a plateau (Figure 2B). Coefficients of variability (CoV) for inter- and intra-observer variability were calculated as the standard deviation of the difference divided by the mean of the two measurements. For determination of ejection fraction (EF) we recieve a CoV of 4.6% and 3.5%, respectively. DICE-scores for cine-segmentation were 0.87 (LV) and 0.85 (myocardium) for comparison of manual segmentation and AI prediction, and 0.92 (LV) and 0.89 (myocardium) for repeated measurements of the same observer (Figure 3). Inter- and intra-observer agreement were analyzed using Bland-Altman plots and Pearson correlation plots of value pairs of all 44 Scans (Figure 4). The Bland-Altman plots show a systematic deviation of the mean value, indicating overestimation of the myocardial mass and underestimation of the volumes by the AI. AI prediction and manual segmentation show great correlation (r=0.95 for EF) comparable to intra-observer variability (r=0.97). The AI model detects epi- and endocardial contours reliably in all phases of the cardiac cycle and papillary muscles are excluded (Figure 5A and B). In rare cases (<1% of images), recognition is severely disturbed (Figure 5C). After AI segmentation 30seconds are enough to manually adjust the contours of one scan (end-diastolic and end-systolic images).Discussion

Bland-Altman and correlation plots indicate high inter- and intra-observer agreement. The systematic deviation in Bland-Altman plots is most likely due to the tendency of the AI to allocate parts of the papillary muscles to the myocardial mass, which consequently underestimates the volume of the blood pool. We observe this especially in apical layers, where the blood-tissue contrast is lower. In some apical slices without visible lumen, the AI model could not detect contours. Other obstacles for proper segmentation we observed were low SNR and image artefacts such as susceptibility artefacts, which are characteristic for high field strengths. This occurs only for severe artefacts. Compared to the segmentation of the software-integrated tool (Figure 1B), visual confirmation of automatic AI segmentation showed great improvement (Figure 5A). With regard to the CoV, our values are within the range of those already reported (CoVIntra-observer: 2.3-5.2%, CoVInter-observer: 2.9-6.0% for EF)4. The CoV for the intraobserver variability of the AI model is 0% due to consistent predictions.Conclusion

Although the pre-trained network was trained with human data at clinical field strengths, after transfer learning, the segmentation performed well on ultrahigh field (7T) images of healthy and pathologic porcine hearts. We have already achieved good results with comparatively small data sets and without hyperparameter tuning, which leads us to assume good generalization. We expect that the quality can be optimized using all cardiac phases and an improved training process.Acknowledgements

Financial support: German Ministry of Education and Research (BMBF, grant: 01E1O1504).

Thanks to Julia Aures for exchanging views on data analysis.

Parts of this work will be used in the doctoral thesis of Alena Kollmann.

References

1. Schulz-Menger J, Bluemke DA, Bremerich, J et al. Standardized image interpretation and post-processing in cardiovascular magnetic resonance - 2020 update. J Cardiovasc Magn Reson. 2020; 22(1):19

2. Elabyad IA, Terekhov M, Lohr D. et al. A Novel Mono-surface Antisymmetric 8Tx/16Rx Coil Array for Parallel Transmit Cardiac MRI in Pigs at 7T. 2020; Sci Rep 10, 3117

3. Ankenbrand MJ, Lohr D, Schlötelburg W, Reiter T, Wech T, Schreiber LM. Deep learning-based cardiac cine segmentation: Transfer learning application to 7T ultrahigh-field MRI. Magn Reson Med. 2021; 86: 2179– 2191

4. Luijnenburg SE, Robbers-Visser D, Moelker A, Vliegen HW, Mulder BJ, Helbing WA. Intra-observer and interobserver variability of biventricular function, volumes and mass in patients with congenital heart disease measured by CMR imaging. Int J Cardiovasc Imaging. 2010;26(1):57-64

Figures

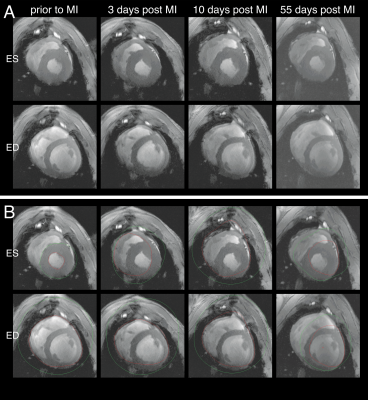

Figure 1 A: Short-axis cine in-vivo images of a porcine heart at 4 different time points. Depicted are the end-systolic (ES) and the end-diastolic (ED) images. B: Endo- (red) and epicardial (green) contours of automatic segmentation in commercial program (trained on human data). Image contrast was subsequently increased to improve the visibility of the contours.

Figure 2 A: Training and validation loss over time. Trained with frozen weight for 100 epochs, then unfrozen for another 100. Smoothed lines (loess) with standard error in the foreground and exact values in the background. B: Dice Scores in the validation set throughout training. Trained with frozen weight for 100 epochs, then unfrozen for another 100. Smoothed lines (loess) with standard error in the foreground and exact values in the background.

Figure 4 A: Bland-Altman plots (left) and Pearson correlation (right) for intra-observer variability (repeated measurement of one observer). B: Bland-Altman plots (left) and Pearson correlation (right) for inter-observer variability (values using manual segmentation compared to values using segmentation of the AI).

Figure 5 A: Endo- (red) and epicardial (green) contours drawn by the AI. The same images as in Figure 1A are displayed. B: Segmentation of different phases of the cardiac cycle is presented. C: Examples of automatic segmentation failure. Signal-to-noise ratio (SNR) of the posterior wall (left) or artefacts in the infarct area (mid) prevent correct segmentation in very few cases. Rarely, there are problems in apical layers where no contour is drawn due to the lack of a visible blood pool (right). Image contrast was subsequently increased to improve the visibility of the contours.