1683

Automated breast segmentation using deep-learning in water-fat breast MRI: application to breast density assessment

Arun Somasundaram1, Mingming Wu1, Tabea Borde1, Marcus R. Makowski1, Eva M. Fallenberg1, Yu Zhao2, and Dimitrios C. Karampinos1,3

1Department of Diagnostic and Interventional Radiology, School of Medicine, Technical University of Munich, Munich, Germany, 2AI Lab, Tencent, Shenzhen, China, 3Munich Institute of Biomedical Engineering, Technical University of Munich, Munich, Germany

1Department of Diagnostic and Interventional Radiology, School of Medicine, Technical University of Munich, Munich, Germany, 2AI Lab, Tencent, Shenzhen, China, 3Munich Institute of Biomedical Engineering, Technical University of Munich, Munich, Germany

Synopsis

Conventional methods for semi-automatic and rule-based breast segmentation on MR images have a tradeoff between accuracy and segmentation speed. To overcome this, several 2D-deep learning approaches have been proposed, which usually focus on using T1-weighted images to train their models. Our aim is to train a 3D-network for breast segmentation, trained on water-fat MR images, which can be used to measure the breast density based on the proton density fat fraction (PDFF). We show that our model segments both fast and accurately, and can visually outperform our ground truth segmentations while requiring only a few seconds to generate labels.

Introduction

Breast segmentation is an important prerequisite for tasks such as algorithmic lesion detection and breast density estimation using MRI1, as it is a known risk factor for breast cancer2. Breast density can be estimated either by using the segmentation masks as a starting point to further segment fibroglandular tissue3,4,5, or by using the mean proton density fat fraction (PDFF) of the breast masks, which have been shown to negatively correlate with mammographic estimations of breast density6.Conventional breast segmentation methods can be classified into semi-automatic and automatic: semi-automatic methods require manual labeling of landmarks or contours before mask generation7,3, and automatic methods use rule-based or atlas-based techniques4,1,5,8. Both usually require additional manual corrections, which adds to the time and resources needed. An alternative to knowledge-based approaches is to devise algorithms that automatically learn these rules from the available data, such as the aforementioned atlas-based methods, or by using deep learning (DL).

Existing DL approaches use 2D networks, such as the 2D-UNet9 to train on axial slices10,11,12. However, this means that the network can not learn about the contextual information about the 3D volume of the data. Thus, 3D networks such as the 3D-UNet13 can be used to learn from the full 3D data14.

The present work implements a fast and fully automatic method for breast segmentation from water-fat MRI data using a DL-based 3D network, that minimizes, or makes unnecessary, the need for manual intervention, applying this segmentation method for breast PDFF mapping.

Materials and Methods

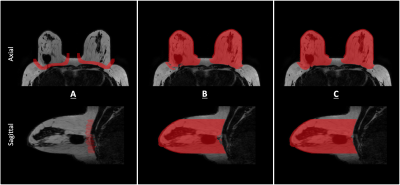

Scans were performed in a 3T MRI (Philips Ingenia; Best, The Netherlands) using six-echo gradient-echo sequencing for water-fat imaging, with imaging parameters: $$$acquisition voxel size: 1.7mm ~isotropic, FOV(mm): AP 220, RL 440, FH 190,~flip~angle: 3^{\circ}$$$.Water-fat separation was performed accounting for known confounding effects (multi-peak fat spectrum, single T2* decay)to obtain volumetric water-only and fat-only images, and PDFF and T2* maps, of 2 matrix sizes: (640, 640, 192) and (784, 784, 192).For each subject, the breast was semi-automatically segmented6 using the water-only and fat-only images, and manually marked contours, shown in Fig.1. Manual delineation took 15 to 20 minutes per subject, and manual corrections 30 to 50 minutes, with more time required to more finely correct errors, causing some of the labels to still contain some inconsistencies (Fig.4A).

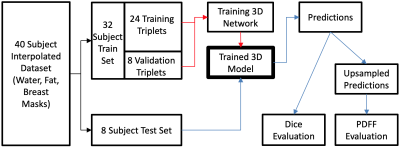

Water-only and fat-only images of 40 subjects, and their corresponding cleaned labels were used. The images and labels were downsampled to a voxel size of (128, 128, 128), and the water and fat images normalized to their standard score. The 40 triplets were randomly split into Train and Test sets, of sizes 32 and 8. The 3D-UNet architecture13, detailed in Fig.2, was used to train on this data set (PyTorch, 24GB NVidia Quadro6000 GPU). The training time was approximately 3 hours, and the best performing network on the validation split was chosen as the final model. The steps for model creation and evaluation is shown in Fig.3.

Results

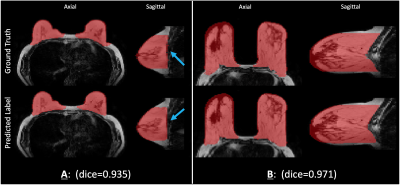

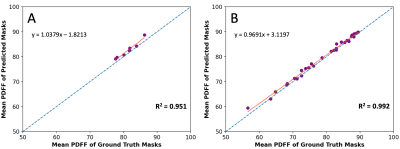

The predicted masks on the test set have high accuracy, with an average Dice score of $$$0.955\pm 0.020$$$. Fig.4A and 4B show predicted masks on two subjects from the test set with differing breast morphologies. Fig.4A also shows the generalization capability of the network, where even when some of the labels in the ground truth contain varying levels of inconsistencies, the network prediction is consistent. The Pearson coefficient of the PDFF values of the upsampled Test set predictions and the ground truth is 0.973, shown in Fig.5. The execution time for predicting masks for 8 subjects, including the time to load the data and the trained network, was about 38 seconds.Discussion

This study applies a 3D-DL framework to automate breast segmentation and PDFF-based breast density extraction. Water-fat MRI Dixon images were collected, semi-automatically segmented, and used to train a 3D model in a lower-resolution space. The final network generalizes well and performs accurately on unseen input, not requiring a postprocessing step before evaluation. In addition, the downsampling does not hinder the model's usefulness for density estimation, with a high Pearson's coefficient between the PDFF values of the upsampled predictions and the ground truth. The generation of segmentation masks is significantly faster using the network when compared to semi-automatic segmentation, and segmentations are visually consistent.This study has 3 limitations. First, the limited dataset size used in training, as neural network performance typically scales with data size15. Second, our data set does not represent different breast densities equally. Third, our choice of downsampling the data was largely guided by hardware constraints. Some ways to address these limitations include obtaining more labeled data, and exploring advances in 3D-DL in terms of network architecture16 and resource efficiency17.

Conclusion

In summary, water and fat MR images were shown to be highly informative for our implemented 3D-UNet, which even while constrained by hardware, generated accurate segmentations on unseen data, and required no manual fixes when compared to the ground truth semi-automatic method. In addition, the PDFF values of these masks were close to that of the manual masks, meaning that overcoming the aforementioned limitations can allow for future studies to have accurately segmented breast masks for an automated PDFF-based extraction of breast density.Acknowledgements

This work was supported in part by the European Research Council (grant agreement No 677661, ProFatMRI). The authors also acknowledge research support from Philips Healthcare.References

- Wang L, Platel B, Ivanovskaya T, Harz M, Hahn HK. 2012. Fully automatic breast segmentation in 3d breast mri. In: 2012 9th IEEE International Symposium on Biomedical Imaging (ISBI).IEEE. p. 1024–1027.

- Lee SH, Ryu HS, Jang Mj, Yi A, Ha SM, Kim SY, Chang JM, Cho N, Moon WK. 2021. Glandular tissue component and breast cancer risk in mammographically dense breasts at screening breast us. Radiology 301:57–65.

- Nie K, Chen JH, Chan S, Chau MKI, Yu HJ, Bahri S, Tseng T, Nalcioglu O, Su MY. 2008. Development of a quantitative method for analysis of breast density based on three-dimensional breast mri. Medical physics 35:5253–5262.

- van der Velden BH, Janse MH, Ragusi MA, Loo CE, Gilhuijs KG. 2020. Volumetric breast density estimation on mri using explainable deep learning regression. Scientific Reports 10:1–9.

- Gubern-Merida A, Kallenberg M, Mann RM, Marti R, Karssemeijer N. 2014. Breast segmentation and density estimation in breast mri: a fully automatic framework. IEEE journal of biomedical and health informatics 19:349–357.

- Borde T, Wu M, Ruschke S, Böhm C, Weiss K, Metz S, Makowski MR, Karampinos D. 2021. Assessing breast density using the standardized proton density fat fraction based on chemical shift encoding-based water-fat separation. In: ISMRM 2021. p. 743.

- Gilhuijs KG, Giger ML, Bick U. 1998. Computerized analysis of breast lesions in three dimensions using dynamic magnetic-resonance imaging. Medical physics 25:1647–1654.

- Wu S, Weinstein SP, Conant EF, Kontos D. 2013. Automated fibroglandular tissue segmentation and volumetric density estimation in breast mri using an atlas-aided fuzzy c-means method. Medical physics 40:122302.

- Ronneberger O, Fischer P, Brox T. 2015. U-net: Convolutional networks for biomedical image segmentation. In: International Conference on Medical image computing and computer-assisted intervention. Springer. p. 234–241.

- Ivanovska T, Jentschke TG, Daboul A, Hegenscheid K, Völzke H, Wörgötter F. 2019. A deep learning framework for efficient analysis of breast volume and fibroglandular tissue using mr data with strong artifacts. International journal of computer assisted radiology and surgery 14:1627–1633.

- Dalmış MU, Litjens G, Holland K, Setio A, Mann R, Karssemeijer N, Gubern-Mérida A. 2017. Using deep learning to segment breast and fibroglandular tissue in mri volumes. Medical physics 44:533–546.

- Zhang Y, Chen JH, Chang KT, Park VY, Kim MJ, Chan S, Chang P, Chow D, Luk A, Kwong T, et al. 2019. Automatic breast and fibroglandular tissue segmentation in breast mri using deep learning by a fully-convolutional residual neural network u-net. Academic radiology 26:1526–1535.

- Çiçek Ö, Abdulkadir A, Lienkamp SS, Brox T, Ronneberger O. 2016. 3d u-net: learning dense volumetric segmentation from sparse annotation. In: International conference on medical image computing and computer-assisted intervention. Springer. p. 424–432.

- Ha R, Chang P, Mema E, Mutasa S, Karcich J, Wynn RT, Liu MZ, Jambawalikar S. 2019. Fully automated convolutional neural network method for quantification of breast mri fibroglandular tissue and background parenchymal enhancement. Journal of digital imaging 32:141–147.

- LeCun Y, Bengio Y, Hinton G. 2015. Deep learning. nature 521:436–444.

- Hatamizadeh A, Tang Y, Nath V, Yang D, Myronenko A, Landman B, Roth H, Xu D. 2021. Unetr: Transformers for 3d medical image segmentation. arXiv preprint arXiv:2103.10504 .

- Alalwan N, Abozeid A, ElHabshy AA, Alzahrani A. 2021. Efficient 3d deep learning model for medical image semantic segmentation. Alexandria Engineering Journal 60:1231–1239.

- Wu Y, He K. 2018. Group normalization. In: Proceedings of the European conference on computer vision (ECCV). p. 3–19.

- Kingma DP, Ba J. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 .

Figures

Semi-automatic segmentation6, axial and sagittal view, masks overlaid on fat-only image. (A) Manual delineation of the breast parenchyma from the pecttoralis muscle with ITK-Snap. (B) Applying a dilation of the contours along the z-axis, calculating left and right breast centroids from this, and using these as seed points, along with the dilated contour, water-fat foreground and fat-only image to separate breast from body. Performed using MATLAB. (C) Manually correcting the resultant mask.

Implemented 3D UNet architecture. Takes downsampled water and fat images as 2-channel input, to predict the downsampled ground truth. Group normalization18, instead of the default Batch normalization13 is used in between convolutional layers. Training Parameters: Loss function: $$$0.5\cdot Dice(Pred, GT) + 0.5\cdot BinaryCrossEntropy(Pred, GT)$$$, learning rate: 0.1, batch size: 2, epochs: 200, patience: 50, train-val split: 24:8, optimizer: Adam19.

DL Pipeline. The dataset is first split into 2 sets: Train and Test. The red path shows the steps of training a 3D-DL segmentation model. The blue path is then for the evaluation of this model. The model is evaluated for its performance in 2 aspects with 2 methods: (1) Segmentation: Dice score between predictions and downsampled ground truth. (2) Breast density estimation: Similarity (Pearson coefficient) between the PDFF values of upsampled predictions and full-size ground truth masks.

Two examples of predicted breast segmentation masks compared with ground truth of water and fat image input taken from the test set, of differing morphologies, and their corresponding Dice scores. Axial and sagittal views are shown. In (A), the blue arrows indicate the posterior breast border, and how the predicted mask appears smoother than the ground truth. All segmentations are overlaid on their respective fat images.

Correlation plot of mean PDFF between the ground truth breast mask and the upsampled predicted masks for the (A) Test set (Pearson: 0.973), and (B) Train set (Pearson: 0.996). The red line signifies the trendline of the point spread, and the blue line is the reference identity line.

DOI: https://doi.org/10.58530/2022/1683