1511

Brain age prediction using fusion deep learning combining pre-engineered and convolution-derived features1Division of Mechanical and Biomedical Engineering, Ewha Womans University, Seoul, Korea, Republic of, 2Graduate Program in Smart Factory, Ewha Womans University, Seoul, Korea, Republic of, 3Ewha Brain Institute, Ewha Womans University, Seoul, Korea, Republic of, 4Department of Brain and Cognitive Sciences, Ewha Womans University, Seoul, Korea, Republic of, 5Graduate School of Pharmaceutical Sciences, Ewha Womans University, Seoul, Korea, Republic of

Synopsis

Prediction of biological brain age is important as its deviation from chronological age can serve as a biomarker for degenerative neurological disorders. In this study, we suggest novel fusion deep learning algorithms which combine pre-engineered features and convolutional neural net (CNN) extracted features of T1-weighted MR images. Over all backbone CNN architectures, fusion models improved prediction accuracy (mean absolute error (MAE) = 3.40–3.52) compared with feature-engineered regression (MAE = 4.58–5.15) and image-based CNN (MAE = 3.60–3.95) alone. These results indicate that using both features derived from convolution and pre-engineering can complement each other in predicting brain age.

Introduction

Degenerative neurological disorders can cause a disparity between the chronological age of a subject and biological brain age [1,2,3,4,5], which raises the possibility of using the age difference as a biomarker for neurodegenerative disorders. [6] The earlier approaches to prediction of biological brain age extracted atlas-based image features of brain MRI which were then fed into traditional regression algorithms. [7] More recently, convolutional neural network (CNN) models have shown great promise which receive the entire 3D image and output prediction through automated extraction of relevant features. [8] This study aimed to develop fusion neural network models which ensemble atlas-based and CNN-derived image features under a hypothesis that the two features can complement each other for improved prediction.materials and methods

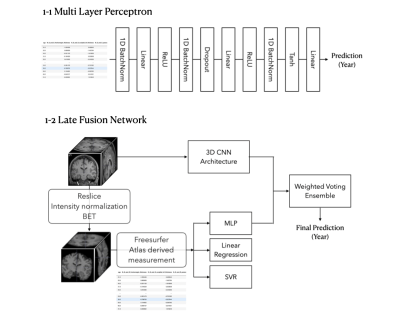

Figure 1 shows an overall schematic of data preprocessing and a prediction model framework used in this study.3D MR Image Dataset

Three-dimensional T1-weighted MR Images of 2175 healthy subjects were obtained from 5 public datasets: Open fMRI dataset (https://openfmri.org/dataset/), INDI dataset (https://registry.opendata.aws/fcp-indi/), FCP1000 dataset (http://fcon_1000.projects.nitrc.org/) , IXI dataset (https://brain-development.org/ixi-dataset/), and XNAT dataset (https://www.xnat.org). The subjects of the MRI dataset aged between 20 and 70 years with a mean of 40.3 years and a standard deviation of 16.6 years. 1750 and 435 subjects were used for training and validation, respectively, for prediction models. All the image preprocessing was performed using SPM and Freesurfer (https://surfer.nmr.harvard.edu). [9] MR images were first resampled to have a voxel size of 1.5 x 1.5 x 1.5 mm3, resliced to be aligned with the axis of the head coordinate, and then normalized so that every feature value gets transformed into an integer between 0 and 214. Non-brain tissues were deleted using the Brain Extraction Tool (BET). [10]

Regression with atlas-based feature extraction

We selected 530 features which provide comprehensive anatomical information about the brain on a regional basis. The extracted measurements included : 1) thickness/area/volume values of 148 cortical regions based on the Destrieux atlas, 2) thickness/area/volume values of subcortical regions based on FreeSurfer subcortex segmentation, and 3) global measurements. Three machine learning algorithms were used

for age prediction: (1) Elastic regression, (2) Support vector regression with a radial basis kernel, and (3) Multi-layer perceptron (MLP) neural network. All model parameters were selected using a 10-fold cross validation and grid search. The MLP architecture is shown in Figure 1-1.

CNN

Four representative pretrained 3D CNN models were used for image-based prediction of brain age: (1) ResNet [11], (2) ResNext [12], (3) ShuffleNet [13], and (4) MobileNet [14]. L1 loss was used for the loss function and networks were optimized using Adam with learning rate=0.005 and batch size=8. Step-wise learning rate decay(Step LR scheduler) was used so that the learning rate is multiplied by 0.1 every 50 steps.

Fusion ensemble learning

Fusion ensemble learning was implemented by combining feature-based regression models and image-based CNN models. Atlas-based image features and the entire raw image were used to train each model separately, and the final decision was made using an aggregation function to combine the predictions of multiple models. [15] After fine tuning feature based MLP algorithms, and 3D CNN independently, the final prediction was acquired by a weighted voting ensemble.

Results

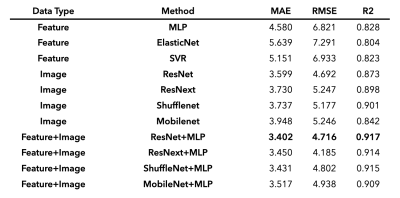

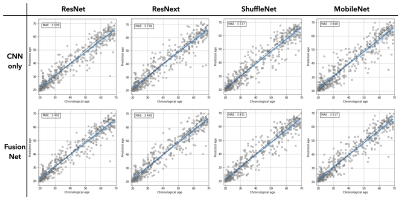

The accuracy of prediction models was evaluated by mean absolute error (MAE), root mean square deviation (RMSE), and coefficient of determination (R2), as summarized in Table 1. Using atlas-based features only, MLP had the least MAE of 4.580 years and was therefore chosen as the model to be fused with CNN models. Using the entire 3D volume only, all CNN models outperformed the ones using atlas-based features only (MAE = 3.599 – 3.948 vs 4.58 – 5.151). When the two predictions from MLP and CNN are aggregated through the fusion model, the prediction accuracy was further improved for all types of CNN tested. The minimum MAE was achieved in ResNet+MLP fusion model (MAE = 3.40 years) while the degree of the improvement was largest in MobileNet that had the largest MAE when used alone. We also observed larger improvement in subjects of 30s and 40s which were relatively sparse than other ranges of age (Figure 2).Discussion

We have shown that combining feature-engineered regression and raw-image-based CNN improves the accuracy of age prediction compared to using each model independently. While we tested relatively simple CNN models to focus on the effect of the combination, further investigations are underway to improve the prediction accuracy, including optimization of CNN architectures and mitigation of the effect of imbalanced age distribution in training samples.Acknowledgements

This research was supported by Basic Science Research Program through the National Research Foundation of Korea(NRF) funded by the Ministry of Education(NRF-2020R1A6A1A03043528).References

[1] Franke, Katja, and Christian Gaser. "Longitudinal changes in individual BrainAGE in healthy aging, mild cognitive impairment, and Alzheimer’s disease." GeroPsych (2012)

[2] Löwe, Luise Christine, et al. "The effect of the APOE genotype on individual BrainAGE in normal aging, mild cognitive impairment, and Alzheimer’s disease." PloS one 11.7 (2016): e0157514.

[3] Schnack, Hugo G., et al. "Accelerated brain aging in schizophrenia: a longitudinal pattern recognition study." American Journal of Psychiatry 173.6 (2016): 607-616

[4] Pardoe, Heath R., et al. "Structural brain changes in medically refractory focal epilepsy resemble premature brain aging." Epilepsy research 133 (2017): 28-32

[5] Corps, Joshua, and Islem Rekik. "Morphological brain age prediction using multi-view brain networks derived from cortical morphology in healthy and disordered participants." Scientific reports 9.1 (2019): 1-10

[6] Cole, James H., et al. "Brain age predicts mortality." Molecular psychiatry 23.5 (2018): 1385-1392

[7] Jónsson, Benedikt Atli, et al. "Brain age prediction using deep learning uncovers associated sequence variants." Nature communications 10.1 (2019): 1-10

[8] Cole, James H., et al. "Predicting brain age with deep learning from raw imaging data results in a reliable and heritable biomarker." NeuroImage 163 (2017): 115-124

[9] Fischl, Bruce. "FreeSurfer." Neuroimage 62.2 (2012): 774-781

[10] Smith, Stephen M. "Fast robust automated brain extraction." Human brain mapping 17.3 (2002): 143-155

[11] He, Kaiming, et al. "Deep residual learning for image recognition." Proceedings of the IEEE conference on computer vision and pattern recognition. 2016

[12] Xie, Saining, et al. "Aggregated residual transformations for deep neural networks." Proceedings of the IEEE conference on computer vision and pattern recognition. 2017

[13] Zhang, Xiangyu, et al. "Shufflenet: An extremely efficient convolutional neural network for mobile devices." Proceedings of the IEEE conference on computer vision and pattern recognition. 2018

[14] Howard, Andrew G., et al. "Mobilenets: Efficient convolutional neural networks for mobile vision applications." arXiv preprint arXiv:1704.04861 (2017)

[15] Huang, Shih-Cheng, et al. "Fusion of medical imaging and electronic health records using deep learning: a systematic review and implementation guidelines." NPJ digital medicine 3.1 (2020): 1-9Figures