1428

Understanding Alzheimer's disease through fMRI and deep learning1Brain and Mind Centre, The University of Sydney, Sydney, Australia, 2School of Biomedical Engineering, The University of Sydney, Sydney, Australia

Synopsis

Deep learning approaches for Alzheimer's disease (AD) classification have primarily focused on modelling the structural changes associated with the condition, neglecting the changes in functional brain dynamics. These functional changes may be detectable earlier than structural atrophy providing an avenue for earlier diagnosis and treatment. We therefore proposed a convolutional neural network combined with a long short-term memory unit to decode fMRI signals. The model was able to classify AD from healthy control with a balanced accuracy of 0.69. Whilst there is room to improve network performance, the study already provides promising insights into the possibilities of resting-state fMRI classification.

Introduction

Alzheimer's Disease (AD) is a neurodegenerative disorder resulting in the degeneration of brain tissue. Magnetic resonance imaging (MRI) can be used to identify the pathological changes associated with late stages of the disease including structural atrophy of the hippocampus and cerebral corticies1 However, interpretation of these images is limited by physician subjectivity which can result in missed findings and long turn-around times2. Attention has therefore shifted to the use of convolutional neural networks (CNN) to model the structural changes associated with AD based on MRI1,2. However, AD is not just a structural disorder. AD patients experience progressive decline in memory and executive function2. With structural changes often remaining undetected until later stages of the disease varying functional brain dynamics between AD and healthy patients may provide an avenue for earlier diagnosis. Despite this, most previous studies into the applications of deep learning for classification have only focused on the structural atrophy ignoring the changing functional dynamics of the brain.Methods

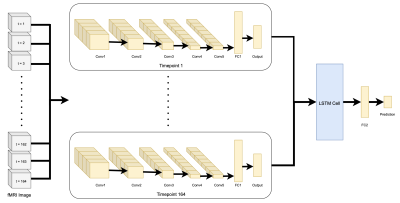

This study used the publicly available OASIS-3 neuroimaging dataset3 to train and test the network. Resting state fMRIs of 80 AD patients and 197 healthy controls were used. T1-w and resting state sequences were processed using the fmriprep toolbox.The 3D CNN model consisted of convolutional layers and a long short-term memory (LSTM) cell. Each timepoint of the fMRI image was fed through the CNN separately to extract structural features. The outputs were then fed chronologically into a LSTM cell to extract temporal information on the fMRI signal which was then passed to a final fully connected layer to determine a prediction. An illustration of the model can be seen in Figure 1.

Results

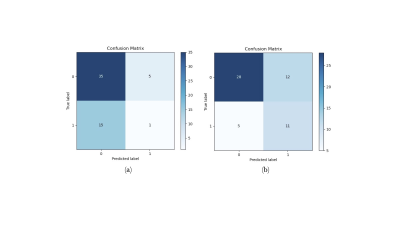

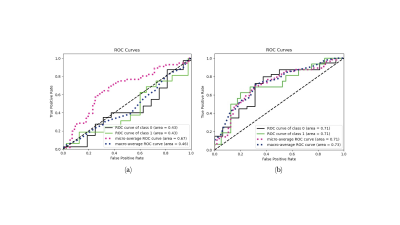

Original testing was only able to produce a balance accuracy of 0.47. The confusion matrix Figure 2a demonstrates that a significantly larger portion of healthy controls were predicted than AD due to data imbalanced. To solve that, a second training was performed in which each batch of 4 subjects included an equal number of images for each class (2 images each). This balanced testing improved the balanced accuracy to a maximum of 0.69. The confusion matrix is depicted in Figure 2b and was compared to that of the testing involving randomised batches. Receiver operating characteristic (ROC) curves for both types of training methods are included in Figure 3.Discussion

Training the model on the entire dataset in random batches was unsuccessful in producing a balanced accuracy above 0.5. This may be due to the huge imbalance in data classes. Imbalanced data is a common problem for medical imaging as the ratio of healthy to unhealthy samples is very uneven especially for rarer disorders and can lead to inaccurate and biased predictions4. Here our network was trained on over double the number of healthy controls compared to AD patients, thus creating a bias for the network to predict healthy controls. This led to a large proportion of false negative predictions as seen in Figure 2a producing a very poor balanced accuracy.To combat this issue each batch for training was made to include 2 images from each class. This alone was able to increase balanced accuracy to 0.69 suggesting that the imbalance in data classes was a key reason for the original false positive results of the original training approach. However, due to their being significantly less AD data than HC, balancing the data meant that less images were used per training epoch. For deep learning to be successful large amounts of labelled data are required. This is particularly difficult when it comes to medical imaging data as sufficiently labelled data is exceptionally limited3. Privacy and protection issues arise when using medical imaging data and therefore publicly available imaging data is scarce. Techniques that may help alleviate these problems is the use of data augmentation and transfer learning4.

Another limitation is the medical imaging medium itself. Whilst powerful in principle for early diagnosis, fMRI may be limited in its use compared to structural MRIs. fMRIs are much more susceptible to noise than structural MRIs. Noise or artifacts on the images can cause distortions making interpretation more difficult5. Learning group differences based on fMRI may therefore be more difficult than those on structural MRI data explaining why some previous structural based approaches have achieved better performances1,6.

Conclusions

Whilst there is room for improvement on the results, the study does provide some promising avenues for further development of functional based deep learning techniques for early AD diagnosis. The combination of a 3D CNN and a LSTM cell allowed for extraction of both spatial and temporal features for the complex prediction of AD. Training the network with balanced batches was able to dramatically improve the model performance compared to the larger unbalanced dataset highlighting the importance of addressing such issue.Finding ways to train the model on more data will allow for the continued improvement of the network and may open up the avenue for explainable AI, allowing us to identify areas of the brain that exhibits the most functional change in the disease and hence identifying some new imaging biomarkers for clinical diagnosis.

Acknowledgements

This work has been supported by a University of Sydney-Brain and Mind Centre Research Development Grant. Furthermore, data were provided by OASIS-3: Principal Investigators: T. Benzinger, D. Marcus, J. Morris; NIH P50 AG00561, P30 NS09857781, P01 AG026276, P01 AG003991, R01 AG043434, UL1 TR000448, R01 EB009352.

References

- Islam, J. & Zhang, Y. (2018), `Brain MRI analysis for Alzheimer's disease diagnosis using an ensemble system of deep convolutional neural networks', Brain informatics 5(2), 1-14.

- Wen, J., Thibeau-Sutre, E., Diaz-Melo, M., Samper-Gonz_alez, J., Routier, A., Bottani, S., Dormont, D., Durrleman, S., Burgos, N. & Colliot, O. (2020), “Convolutional neural networks for classification of Alzheimer's disease: Overview and reproducible evaluation”, Medical Image Analysis 63, 101694-101694.

- LaMontagne, P.J., Benzinger, T.L.S., Morris, J.C., Keefe, S., Hornbeck, R., Xiong, C., Grant, E., Hassenstab, J., Moulder, K., Vlassenko, A., Raichle, M.E., Cruchaga, C., Marcus, D. (2019), “OASIS-3: Longitudinal Neuroimaging, Clinical, and Cognitive Dataset for Normal Aging and Alzheimer Disease”, medRxiv.

- Zhou, S. K., Greenspan, H., Davatzikos, C., Duncan, J. S., Van Ginneken, B., Madabhushi, A., Prince, J. L., Rueckert, D. & Summers, R. M. (2021), “A review of deep learning in medical imaging: Imaging traits, technology trends, case studies with progress highlights, and future promises”, Proceedings of the IEEE 109(5), 820-838.

- Parmar, H., Nutter, B., Long, R., Antani, S. & Mitra, S. (2020), “Spatiotemporal feature extraction and classification of Alzheimer's disease using deep learning 3D-CNN for fMRI data”, Journal of Medical Imaging 7(5), 056001-056001.

- Sarraf, S. & Tofighi, G. (2016), “Deep learning-based pipeline to recognize Alzheimer’s disease using fMRI data”, 2016 Future Technologies Conference (FTC), pp. 816-820.

Figures