1183

A general framework of synthesizing 129Xe MRI data for improved segmentation model training1Medical Physics, Duke University, Durham, NC, United States, 2Mathematics, Duke University, Durham, NC, United States, 3Biomedical Engineering, Duke University, Durham, NC, United States, 4Biomedical Engineering, McGill University, Montreal, QC, Canada, 5Radiology, Duke University, Durham, NC, United States

Synopsis

Quantitative analysis of hyperpolarized 129Xe MRI, segmentation of the thoracic cavity, a crucial step that is often the bottleneck in an otherwise fully automated pipeline. This problem is attractive to solve using deep learning methods, but they are limited by their large appetite for manually segmented training data. To this end, we propose a method to automatically synthesize both 129Xe ventilation MR images and their corresponding thoracic cavity masks using general adversarial networks. This data augmentation technique can accelerate the training of deep learning segmentation models.

Introduction

Hyperpolarized 129Xe MRI has emerged as a promising means for quantitative characterization of lung function and disease progression 1,2. Such quantification involves an imaging pipeline in which the most substantive bottleneck is accurate segmentation of the lung cavity, the de-facto method for which remains painstaking manual segmentation by an expert reader. However, deep learning methods are now emerging to segment either a registered anatomical image or the xenon image directly3,4,5. One limitation in effectively training such models is data scarcity; this is driven by the cost of image acquisition and time required to manually segment the image. Thus, it is common to employ augmentation techniques to expand training data, but they are prone to producing highly correlated images6. Thus, we introduce a general framework for automatically synthesizing paired 129Xe MRI and thoracic cavity segmentations using Generative Adversarial Networks7. We then evaluate the degree to which such data improves deep learning segmentation performance on acquired 129Xe images.Methods

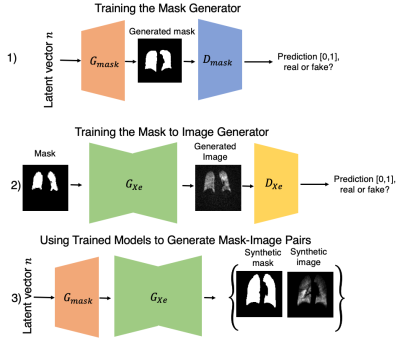

Model developmentFigure 1 illustrates the workflow for generating synthetic 129Xe MRI and segmentation image pairs in a three-step process. First, a generative model $$$G_{mask}$$$ is trained to synthesize a thoracic cavity mask from a random noise vector. This is followed by training an image-to-image translation network $$$G_{Xe}$$$ to generate an image from an input mask. Thus, the operation $$$G_{Xe}(G_{mask}(n))$$$, where $$$n$$$ represents the noise vector, produces a random, synthetic image-mask pair that is perfectly registered. Such image-mask pairs can then be used as additional augmented data to train a segmentation network.

Data collection and preparation

We collected and manually segmented a mix of 73 healthy subjects and patients acquired using 2D 129Xe ventilation MRI (128x128x14) on a Siemens Magnetom Trio-VB19A. 3D volumes were first split into 5 folds for cross validation and further separated into 14 individual coronal slices. Only slices that contained the lung cavity were included (924 slices total).

Model selection, training, and evaluation

For the generative model $$$G_{mask}$$$, we selected the Progressive Growing GAN8 and modified it to encode the coronal slice number (1-14) of the generated output mask. The model was trained using 5-fold validation on the manual thoracic cavity masks for 30000 steps for each resolution level, starting at 8x8 and gradually increasing in size to 128x128. The noise vector $$$n$$$ was randomly initialized to a length 128 vector. Other hyperparameters were default as in this implementation9.

For the image translation network $$$G_{Xe}$$$ we selected the pix2pix10 model, which was again trained with 5-fold validation using the manually generated image-mask pairs. Ultimately, 3500 synthetic image-mask pairs were generated and used to augment training (100 epochs with batch size of 16) of a standard U-net segmentation network11. To evaluate the quality of the generated images, we calculated the Frechet Inception Distance (FD)12 between the original and synthetic images. The FD score is a distance metric between the feature vectors between the original and generated images, with a lower score generally indicating higher quality and diversity. The contribution of synthetic images to improving segmentation Dice score performance was evaluated using a paired t-test.

Results

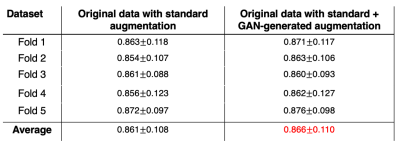

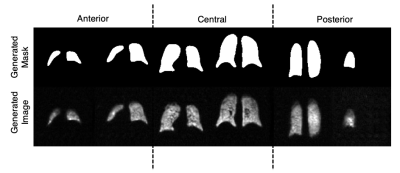

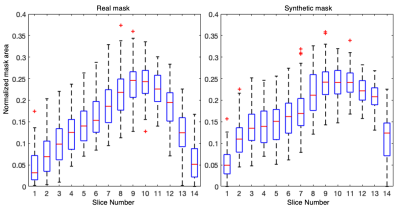

Figure 2 illustrates a montage of randomly generated synthetic mask-image pairs separated into anterior, central, and posterior categories. Figure 3 represents boxplots of the normalized mask area as a function of slice number for the original masks and the generated masks. The original and generated masks both share a similar pattern of mask area increasing from anterior to the central slice, and sharply decreasing in the posterior. Moreover, the generated masks share a similar slice-specific standard deviation in the mask area, suggesting that the network successfully generated a diverse dataset. The FD between the original dataset and the synthetic dataset was 68.71, which is comparable to values reported ranging from 1-78 of other modern GANs applied to MRI data13.Table 1 summarizes the segmentation performance when the U-net model was trained using 1) the original dataset with regular augmentation methods (translation, rotation, etc.) and 2) the original dataset with augmentation, supplemented with the 3500 synthetically generated images. The latter approach modestly, but significantly increased the Dice score from 0.861$$$\pm$$$0.108 to 0.866$$$\pm$$$0.110 (p<.001).

Discussion

We developed a rapid, automated method to synthesize mask-image pairs for data augmentation. This stands in contrast to other proposed strategies like template-based methods of data augmentation14, which are don’t leverage GPU acceleration and thus does not scale well to producing many thousands of datasets. The proposed strategy is both intuitive and produces realistic looking mask/image pairs, as validated by the FD score. Although these methods can complement traditional data augmentation techniques, they could be further improved by synthesizing defects in a controlled fashion as images with ventilation defects are most prone to low segmentation accuracy.For simplicity, this study was limited to 2D image generation and segmentation models, but these methods could be extended to 3D to leverage the availability of inter-slice information. GANs operating in 3D would not only require high-quality segmented images that are tedious to create, but also are susceptible to training instability15. Other limitations include using a simplistic U-net model for segmentation, compared to more such as those developed by Oktay et al. that incorporate anatomical prior knowledge16.

Acknowledgements

Supported by R01HL105643, R01HL12677, NSF GRFP DGE-1644868

References

1. Thomen, R. P. et al. Hyperpolarized 129Xe for investigation of mild cystic fibrosis lung disease in pediatric patients. J. Cyst. Fibros. 16, 275–282 (2017).

2. Wang, Z. et al. Diverse cardiopulmonary diseases are associated with distinct xenon magnetic resonance imaging signatures. Eur. Respir. J. 54, (2019).

3. Tustison, N. J. et al. Image- versus histogram-based considerations in semantic segmentation of pulmonary hyperpolarized gas images. Magn. Reson. Med. 1–15 (2021) doi:10.1002/mrm.28908.

4. Astley, J. R. et al. Comparison of 3D convolutional neural networks and loss functions for ventilated lung segmentation using multi-nuclear hyperpolarized gas MRI. ISMRM Proc. i, 9–11 (2021).

5. Leewiwatwong, S. et al. Deep learning-based thoracic cavity segmentation for hyperpolarized 129 Xe MRI. ISMRM Proc. 9–11 (2021).

6. Shin, H. C. et al. Medical image synthesis for data augmentation and anonymization using generative adversarial networks. Int. Work. Simul. Synth. Med. Imaging 11037 LNCS, 1–11 (2018).

7. Goodfellow, I. J. et al. Generative Adversarial Networks. Adv. Neural Inf. Process. Syst. (2014).

8. Karras, T., Aila, T., Laine, S. & Lehtinen, J. Progressive growing of GANs for improved quality, stability, and variation. 6th Int. Conf. Learn. Represent. ICLR 2018 - Conf. Track Proc. 1–26 (2018).

9. No Title. https://github.com/odegeasslbc/Progressive-GAN-pytorch.

10. Isola, P., Zhu, J. Y., Zhou, T. & Efros, A. A. Image-to-image translation with conditional adversarial networks. Proc. - 30th IEEE Conf. Comput. Vis. Pattern Recognition, CVPR 2017 2017-January, 5967–5976 (2017).

11. Ronneberger, O., Fischer, P. & Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. in (eds. Navab, N., Hornegger, J., Wells, W. M. & Frangi, A. F.) vol. 9351 234–241 (Springer International Publishing, 2015).

12. Heusel, M., Jan, L. G. & Hochreiter, S. GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium. (2017).

13. Skandarani, Y., Jodoin, P. & Lalande, A. GANs for Medical Image Synthesis : An Empirical Study. 1–24.

14. Tustison, N. J. et al. Convolutional Neural Networks with Template-Based Data Augmentation for Functional Lung Image Quantification. Acad. Radiol. 26, 412–423 (2019).

15. Jabbar, A., Li, X. I., Omar, B. & Science, C. A Survey on Generative Adversarial Networks : Variants , Applications , and Training. 54, (2021).

16. Oktay, O. et al. Anatomically Constrained Neural Networks (ACNNs): Application to Cardiac Image Enhancement and Segmentation. IEEE Trans. Med. Imaging 37, 384–395 (2018).

Figures

Figure 1. Illustration of paired generation of synthetic thoracic cavity masks and 129Xe ventilation images. In step 1, a latent vector n representing noise and a real mask are trained to generate a synthetic mask using Progressive GAN. Second, manually segmented masks and their images are used to train a conditional GAN (ie. Pix2pix) to generate a corresponding registered 129Xe ventilation image. To generate the synthetic mask-image pair, the vector n is first applied to Gmask to generate the mask, which is then fed into GXe to generate the image.

Figure 3. Boxplot of the normalized area of the real mask and synthetic mask at each slice index. The area gradually increases from anterior to the central part of the lung, and then decreases towards the posterior. This is desirable behavior that suggests the network successfully learned to encode slice number. Moreover, the similar standard deviations in the mask area suggests that diverse masks can be produced.