0964

Time-segmented and contrast-neutral motion correction using an end-to-end deep learning approach1Diagnostic and Interventional Imaging, University of Texas Health Science Center at Houston, Houston, TX, United States, 2United Imaging Healthcare, Houston, TX, United States, 3University of Texas Health Science Center at Houston, Houston, TX, United States

Synopsis

We developed an end-to-end deep learning-based motion correction technique that utilized the time-segmented nature of MRI data acquisition. Results of computer simulations and in vivo studies show the network to be highly effective in correcting motion artifacts.

Introduction

Motion artifacts affect ~30% of MRI scans1, typically manifesting as image blurring, signal ghosting, and ringing, interfering with true anatomy. Compromised image quality from severe motion artifacts could result in uninterpretable images or lead to misinterpretation, delaying diagnosis and treatment, and adversely affecting quantitative MRI such as morphometric measurements. Several retrospective and prospective approaches have been proposed for motion correction. Recently, application of deep learning (DL) has shown promising results for retrospective motion correction2–7. In this work, we introduce a DL-based approach that utilizes the time-segmented nature of many MRI pulse sequence to discretize the motion trajectory and train an end-to-end DL model for motion correction. Due to the high sensitivity of phase to motion, we also incorporated image phase information in the input to the DL. To reduce dependence on the image contrast, our DL model was trained on multi-contrast images. This produces a contrast-neutral model that can be applied to multiple MRI sequences without modification.Methods

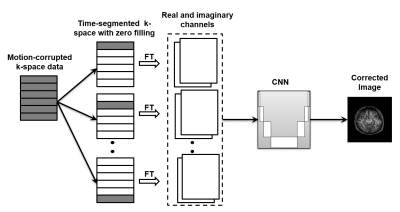

Time-segmented acquisition arises naturally in MRI when data is acquired using multi-shot fast spin echo, fast gradient echo, spiral, etc. A common and reasonable assumption in MRI is that patient motion occurs primarily in between the shots, with minimal intra-shot motion. Using this piecewise motion model with Nshot segments, Nshot separate complex images may be reconstructed from k-space data following zero filling. These individual images serve as the input to the DL model as separate channels. To retain the motion information-rich phase component, the complex data of the image is split into real and imaginary components, for a total of 2Nshot input channels. The proposed end-to-end motion correction approach is illustrated in Fig. 1.To show the feasibility of this approach, we trained a neural network using randomly generated simulated 2D rigid-body time-segmented motion trajectory. A linear, interleaved k-space filling was used similar to a clinical multislice pulse sequence. To demonstrate the feasibility of this approach our DL model was developed for a rectilinear MRI protocol with number of k-space segments = 16, k-space lines per shot = 16. The size of the input data was 256 x 256 x 32. The MRI data used for model training and testing in this study were either pre-acquired data in public or private repositories, or specifically acquired as part of this study. The pre-acquired data included 62 T1w volumes, 68 T2w volumes, and 196 FLAIR volumes, for a total of 25,550 image slices.

An encoder-decoder convolutional neural network (U-net8) was used. The number of filters doubled in four levels starting at 32. The Adam optimizer was used and mean absolute error was used as a loss function. Training used a batch size of 16 for a maximum number of 200 epochs. The data were split into training, validation, and testing sets with a ratio of 0.8, 0.1, and 0.1, respectively. The original motion-free image was used as the target.

Reconstructed images were quantitively compared to the reference images using the normalized root mean square error (RMSE) and the structural similarity index (SSIM).

Finally, three volunteer studies were conducted with acquisitions performed with the subject at rest and during voluntary head motion simulating a restless patient. The studies were performed on 1.5T and 3.0T United Imaging uMR570 and uMR790 MRI systems (United Imaging Healthcare America, Houston, Texas), using dedicated head coils.

Results

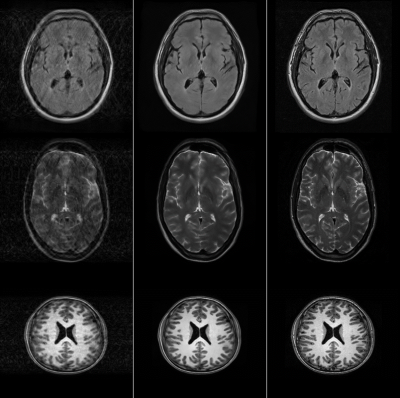

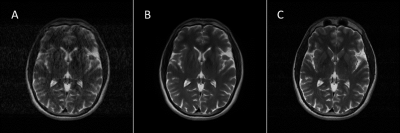

Visually, the proposed DL model effectively corrected the motion corrupted images in the test set, as judged by the reduced motion-induced ghosting and blurring. This was also quantitatively evident in the reduced RMSE and improved SSIM in the corrected images (RMSE=23.76±13.83%; SSIM=0.94±0.04) compared to the motion-corrupted images (RMSE=40.85±24.39%; SSIM=0.75±0.14). Fig. 2 shows representative images from the test set, demonstrating excellent performance across multiple MRI image contrasts. When applied to prospectively acquired data from volunteers, large improvement in image quality was observed (Fig. 3).Conclusions

The proposed approach shows promising performance in retrospectively correcting motion-corrupted images. This approach can help avoid the need for re-scanning patients, leading to improved patient experience and better scanner economics. Future developments will focus on further model refinement to improve performance, testing on a large cohort of patients, and addressing the generalization and clinical implementation.Acknowledgements

No acknowledgement found.References

(1) Andre, J. B.; Bresnahan, B. W.; Mossa-Basha, M.; Hoff, M. N.; Smith, C.; Anzai, Y.; Cohen, W. A.; Patrick Smith, C.; Anzai, Y.; Cohen, W. A.; Smith, C.; Anzai, Y.; Cohen, W. A. Towards Quantifying the Prevalence, Severity, and Cost Associated With Patient Motion During Clinical MR Examinations. J. Am. Coll. Radiol. 2015, 12 (7), 689–695. https://doi.org/10.1016/j.jacr.2015.03.007.

(2) Duffy, B. A.; Zhang, W.; Tang, H.; Zhao, L.; Law, M.; Toga, A. W.; Kim, H. Retrospective Correction of Motion Artifact Affected Structural MRI Images Using Deep Learning of Simulated Motion. 2018.

(3) Johnson, P. M.; Drangova, M. Conditional Generative Adversarial Network for 3D Rigid-Body Motion Correction in MRI. Magn. Reson. Med. 2019, 82 (3), 901–910.

(4) Sommer, K.; Saalbach, A.; Brosch, T.; Hall, C.; Cross, N. M.; Andre, J. B. Correction of Motion Artifacts Using a Multiscale Fully Convolutional Neural Network. Am. J. Neuroradiol. 2020, 41 (3), 416–423.

(5) Wang, C.; Liang, Y.; Wu, Y.; Zhao, S.; Du, Y. P. Correction of Out-of-FOV Motion Artifacts Using Convolutional Neural Network. Magn. Reson. Imaging 2020, 71, 93–102.

(6) Liu, J.; Kocak, M.; Supanich, M.; Deng, J. Motion Artifacts Reduction in Brain MRI by Means of a Deep Residual Network with Densely Connected Multi-Resolution Blocks (DRN-DCMB). Magn. Reson. Imaging 2020, 71, 69–79.

(7) Küstner, T.; Armanious, K.; Yang, J.; Yang, B.; Schick, F.; Gatidis, S. Retrospective Correction of Motion-Affected MR Images Using Deep Learning Frameworks. Magn. Reson. Med. 2019, 82 (4), 1527–1540.

(8) Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical image computing and computer-assisted intervention; 2015; pp 234–241. https://doi.org/10.1007/978-3-319-24574-4_28.

Figures

Figure 1: Proposed end-to-end deep learning model for motion correction. K-space data are clustered into segments with similar time of acquisition. Zero-filling is used to produce complex images which are stacked together to form the input to a convolutional neural network (CNN) to predict the motion-free image.