0962

Improved Detection and Localization of Artifacts using CutArt Augmentation1Subtle Medical Inc., Menlo Park, CA, United States, 2Stanford University, Stanford, CA, United States

Synopsis

With the aim of improving the performance of an automated quality control system, we propose to use a data augmentation technique based on cropped patches of simulated artifacts (CutArt) instead of artifacts that are distributed across the entire image. This has the advantage of improving the artifact localization and quality control classification performance, as assessed by experiments on simulated as well as real artifact affected data. Localization experiments suggested that the CutArt model learns to focus on the tissue of interest instead of the image background.

Introduction

Quality control (QC) is an important safeguard against erroneous diagnoses in the clinic as well as being an essential first step in most research studies. Because manual QC is extremely laborious and highly subjective, a fully automated solution based on deep learning (DL) is desirable. Here, we evaluate the benefits of combining artifact simulation with CutArt augmentation as a simple method for improving both the detection and localization performance of a DL based quality control system. CutArt data augmentation is based on inserting cropped regions of simulated artifacts into corresponding locations in the input image. Here, we consider motion artifacts only, as these are the most common cause of quality control failures. However, the method can easily be extended to other types of artifact.Methods

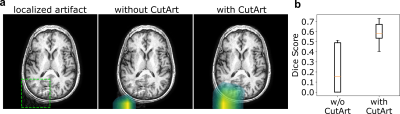

Datasets: Three separate databases containing T1w and T2w brain images were combined. Two were publicly available: IXI1 and OpenNeuro2, and one was in-house. These contained images of diverse clinical indications from multiple scanner manufacturers and sites, all 3 orientations (axial, coronal, sagittal) and images with and without gadolinium contrast agent. The combined dataset was manually assigned pass/fail image quality scores and split into train (n=2234), validation (n=226) and test (n=426) datasets.Artifact Simulation and CutArt: Motion artifacts were simulated in 2D by applying phase shifts at random locations in the Fourier domain followed by an inverse Fourier transform to the image domain3. The severity of the artifact was determined by the percentage of corrupted lines. Here we tested two severity ranges: 20-30% and 30-40% of corrupted lines. CutArt is illustrated in Fig. 1 and is a modified version of CutMix4 data augmentation. Briefly, it involves: selecting a random patch from the artifact corrupted image and inserting it into the corresponding location in the input image to generate an image with a QC ‘fail’ label. The patch size was selected from a uniform distribution between 30-50% of the image size. CutArt differs from CutMix4 augmentation in that if an artifact is introduced then the image is assigned a ‘fail’ label, whereas in CutMix the ground-truth labels are linearly combined.

Model training: A pre-trained 2D Resnet34 was fine-tuned for 80 epochs using an Adam optimizer and a binary cross entropy loss to predict the pass/fail (0/1) QC category. Motion simulation was performed with a probability of 0.3. CutArt was randomly performed on images with simulated motion with a probability of 0.5.

Localization prediction: GradCAM5 was performed at inference time using the final layer of the network. Output localization maps (scaled between 0 and 1) were thresholded at 0.4 across all images to produce binary masks.

Evaluation: Due to class imbalance, average precision (area under the precision recall curve) was used as the evaluation metric for the QC classification task. In order to assess localization performance with respect to a known ground-truth, the Dice index was calculated on a simulated validation set. This was created by adding simulated motion artifacts to a subset of n=8 images from the validation set that passed QC.

Results

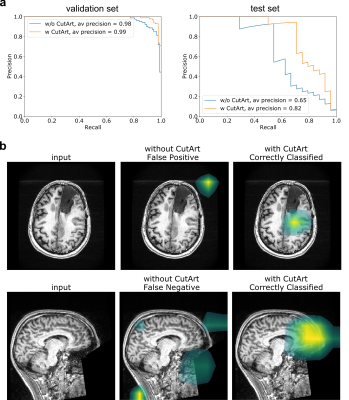

Localization performance: Visually, models trained with CutArt were able to localize motion artifacts well on the simulated validation dataset (Fig. 2). The average Dice score significantly improved from 0.23±0.08 to 0.58±0.04 (p=0.002, unpaired t-test). Models trained without CutArt were unable to localize the simulated artifact and tended to focus more on background information rather than the foreground tissue of interest.QC classification performance: On both the validation and test sets, CutArt outperformed the model trained without CutArt with the average precision increasing from 0.98 to 0.99 on the validation set and 0.65 to 0.82 on the test set, for the 30-40% artifact severity model (Fig. 3a). It increased from 0.61 to 0.82 on the 20-30% severity model, demonstrating the effect was robust to the simulated artifact severity and indicating that the improved performance was due to CutArt, and not simply because the artifact simulation parameters were unrealistic. In agreement with the simulated validation assessment, models trained without CutArt tended to focus on artifacts present in the image background rather than in the brain (Fig. 3b).

Discussion

Our results demonstrate significantly improved performance on the QC classification task by using cropped patches of motion artifact simulation instead of using entire slices. One likely explanation for the improved performance is that the model learns to pay more attention to the more important parts of the image i.e. the brain tissue rather than predominantly the background of the image, which is not always a reliable feature for determining the presence of artifacts in the input image. This explanation is also supported by the artifact localization results which tended to focus on the brain tissue itself rather than the image background. Another reason for the improved performance could be related to the prevalence of localized artifacts in real artifact affected data. More precise artifact segmentation maps could be generated using test-time augmentation and other more fine-grained localization techniques such as guided backpropagation.Conclusions

In conclusion, CutArt is a simple and effective method for improving both classification and localization performance for medical image QC. Future work could investigate whether further improvements are possible by constraining the artifact patches to the tissue of interest.Acknowledgements

We would like to acknowledge the grant support of NIH R44EB027560References

1. https://brain-development.org/ixi-dataset/

2. https://openneuro.org/

3. Duffy, B. A., Zhang, W., Tang, H., Zhao, L., Law, M., Toga, A. W., & Kim, H. (2018). Retrospective correction of motion artifact affected structural MRI images using deep learning of simulated motion.

4. Yun, S., Han, D., Oh, S. J., Chun, S., Choe, J., & Yoo, Y. (2019). CutMix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision (pp. 6023-6032).

5. Selvaraju, R. R., Cogswell, M., Das, A., Vedantam, R., Parikh, D., & Batra, D. (2017). Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE international conference on computer vision (pp. 618-626).

Figures