0959

A Deep Forward-Distortion Model for Unsupervised Correction of Susceptibility Artifacts in EPI1Department of Electrical and Electronics Engineering, Bilkent University, Ankara, Turkey, 2National Magnetic Resonance Research Center (UMRAM), Bilkent University, Ankara, Turkey, 3Neuroscience Graduate Program, Bilkent University, Ankara, Turkey

Synopsis

Echo planar imaging (EPI) requires the correction of susceptibility artifacts for further quantitative analyses. Images acquired in reversed phase-encode (PE) directions are typically used to estimate the susceptibility-induced field from EPI data. In this work, an unsupervised deep Forward-Distortion Network (FD-Net) is proposed for the correction of susceptibility artifacts in EPI: The field and underlying anatomically-correct image are predicted, subject to the constraint that forward-distortion of this image with the field explains the input warped images. This approach provides rapid correction of susceptibility artifacts, with superior performance over deep learning methods that unwarp input images based on a predicted field.

Introduction

Echo planar imaging (EPI) is the most commonly-used sequence for diffusion weighted imaging (DWI) and functional MRI (fMRI), due to its rapid k-space acquisition capability. However, susceptibility artifacts reduce the usability of acquired images1, and correction is necessary for further quantitative analyses2. Leading methods use images acquired in reversed phase-encode (PE) direction to estimate the susceptibility-induced displacement field from data3.In this work, we propose a deep Forward-Distortion Network (FD-Net) for correcting susceptibility artifacts by predicting the field and underlying anatomically-correct image, such that forward-distorting this image with the field explains input warped images. We validate FD-Net with respect to TOPUP, and show the superiority of its forward-distortion approach with respect to prior deep learning methods that instead aim to unwarp input images based on a predicted field.

Methods

Classic Correction ApproachField-map based susceptibility correction assumes that distortions are in opposite directions in reversed-PE images (i.e., blip-up/-down EPI images) and that only displacement along the PE direction is significant4. In this classic approach, the estimated field is used in an inverse problem to estimate a correct image that best explains the distortion. This approach has been shown to outperform registration-based/measured-field techniques5. Here, we take TOPUP from FSL3 as a classic “reference” method. TOPUP uses the reversed-PE image pairs to estimate the field6, and outputs a single corrected image. It is often considered a common gold-standard for EPI distortion correction.

Learning-based Correction

Previous deep-learning methods in the literature commonly utilize the predicted field to correct distorted images. S-Net7 uses a 3D U-Net8 to predict the field and unwarps using bilinear interpolation. For training, S-Net uses local cross-correlation of the corrected blip-up/-down images for similarity loss with a diffusion regularizer for field smoothness.

Deepflow-net9 instead uses a 2D U-Net architecture and a multi-resolution scheme. Mean Squared Error (MSE) between the corrected blip-up/-down images is used as the similarity measure, and total variation for field smoothness regularization. The correction is performed via cubic interpolation, but an intensity correction approach similar to that in TOPUP is introduced to compensate for pile-ups at dense field locations. While both methods enable notable speed-up compared to classic methods, their reverse-distortion model (i.e., unwarping) cannot directly constrain fidelity to measurements.

Proposed Method

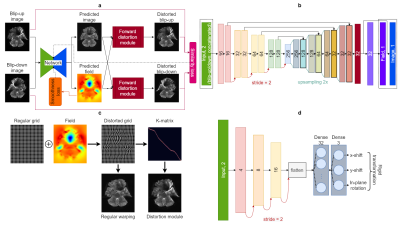

We propose a novel forward-distortion model, FD-Net, to explicitly constrain measurement fidelity for enhanced correction performance. FD-Net, outlined in Fig. 1, uses a 2D U-Net to estimate both a displacement field and a corrected image. These estimates are then used to enforce consistency to input data: if we forward-distort the corrected image with the field in reversed directions, they should match the input blip-up/-down EPI images. Analogous to sensitivity-encoding formulation in parallel imaging, this forward approach is motivated by its SNR benefits10.

FD-Net is an unsupervised model that can be trained via MSE loss to measure similarity between the input and distorted images, with second-order finite differences for regularizing field smoothness. Inspired by the "K-matrix" approach of TOPUP6, both distortions and pile-ups are implemented with a single matrix operation based on a Gaussian kernel to avoid potential ringing artifacts. To compensate for rigid-body motion between measurements, an affine-transformation is employed to align one of the PE directions as in TOPUP. Forward-distortion is performed on a 2x grid to improve performance.

A competing method based on Deepflow-net was considered without a multi-resolution scheme. For fair comparison, implementation was based on an identical architecture to FD-Net, but the forward-distortion module was replaced with a correction (i.e., unwarping) module9. The model was trained on MSE between the two corrected images, and the model output was formed by averaging the corrected images.

Learning procedures

We used randomly selected unprocessed DWI data from Human Connectome Project's 1200 Subjects Data Release11. A total of 20 subjects were selected, 12 for training and 8 for testing. For each subject, a single b0-volume consisting of 111 slices with 168x144 image matrix were utilized. TOPUP correction was applied for reference.

Both FD-Net and Deepflow-net were implemented in Tensorflow/Keras and trained using Adam optimizer for 100 epochs until convergence. Correction of a volume took on average ~7.5 seconds for each network.

Results

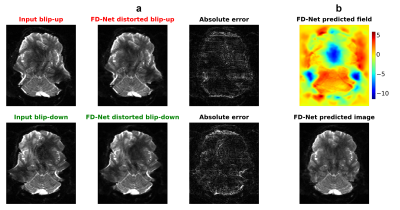

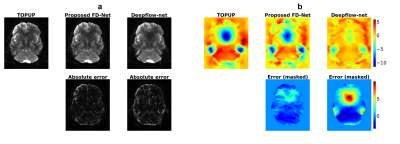

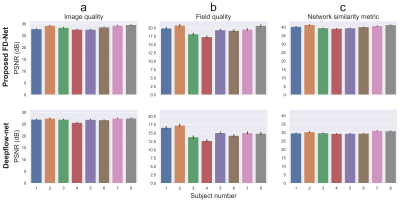

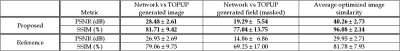

Representative results given in Fig. 2 show that FD-Net can successfully predict the correct image and field by maximizing similarity between the forward-distorted images and inputs. Fig. 3 visually compares TOPUP and both methods. FD-Net outperforms Deepflow-net in terms of both the generated image and field quality when compared to TOPUP.A comprehensive quantitative comparison between the two methods is provided in Fig. 4. The table in Fig. 5 quantifies the superiority of the FD-Net approach, with 1.55 dB and 4.43 dB improvements in PSNR compared to Deepflow-net for the generated image and field, respectively. Fig. 4c and the last column of Fig. 5 also show that FD-Net can achieve forward-distortion very successfully, when compared to the level at which Deepflow-net can match the corrected images.

Conclusion

The proposed FD-Net provides results comparable to TOPUP, by predicting a self-consistent image and field. This unsupervised deep learning approach provides rapid correction of susceptibility artifacts in EPI, while maintaining high performance. Our results indicate that the forward-distortion model methodizes a better-conditioned problem by enforcing consistency to measurement data.Acknowledgements

This work was supported by the Scientific and Technological Council of Turkey (TUBITAK) via Grant 117E116. Data were provided by the Human Connectome Project, WU-Minn Consortium (Principal Investigators: David Van Essen and Kamil Ugurbil; 1U54MH091657) funded by the 16 NIH Institutes and Centers that support the NIH Blueprint for Neuroscience Research; and by the McDonnell Center for Systems Neuroscience at Washington University.

References

- P. Mansfield, “Multi-planar image formation using NMR spin echoes,” J. phys., vol. 10, no. 3, pp. L55–L58, 1977.

- J.-D. Tournier, S. Mori, and A. Leemans, “Diffusion tensor imaging and beyond: Diffusion Tensor Imaging and Beyond,” Magn. Reson. Med., vol. 65, no. 6, pp. 1532–1556, 2011.

- S. M. Smith et al., “Advances in functional and structural MR image analysis and implementation as FSL,” Neuroimage, vol. 23 Suppl 1, pp. S208-19, 2004.

- D. Holland, J. M. Kuperman, and A. M. Dale, “Efficient correction of inhomogeneous static magnetic field-induced distortion in Echo Planar Imaging,” Neuroimage, vol. 50, no. 1, pp. 175–183, 2010.

- M. S. Graham, I. Drobnjak, M. Jenkinson, and H. Zhang, “Quantitative assessment of the susceptibility artefact and its interaction with motion in diffusion MRI,” PLoS One, vol. 12, no. 10, p. e0185647, 2017.

- J. L. R. Andersson, S. Skare, and J. Ashburner, “How to correct susceptibility distortions in spin-echo echo-planar images: application to diffusion tensor imaging,” Neuroimage, vol. 20, no. 2, pp. 870–888, 2003.

- S. T. M. Duong, S. L. Phung, A. Bouzerdoum, and M. M. Schira, “An unsupervised deep learning technique for susceptibility artifact correction in reversed phase-encoding EPI images,” Magn. Reson. Imaging, vol. 71, pp. 1–10, 2020.

- O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional Networks for Biomedical Image Segmentation,” arXiv [cs.CV], 2015.

- B. Zahneisen, K. Baeumler, G. Zaharchuk, D. Fleischmann, and M. Zeineh, “Deep flow-net for EPI distortion estimation,” Neuroimage, vol. 217, no. 116886, p. 116886, 2020.

- K. P. Pruessmann, M. Weiger, M. B. Scheidegger, and P. Boesiger, “SENSE: sensitivity encoding for fast MRI,” Magn. Reson. Med., vol. 42, no. 5, pp. 952–962, 1999.

- D. C. Van Essen, S. M. Smith, D. M. Barch, T. E. J. Behrens, E. Yacoub, and K. Ugurbil, “The WU-Minn Human Connectome Project: An overview,” Neuroimage, vol. 80, pp. 62–79, 2013.

Figures