0953

Hybrid image and k-space deep learning reconstruction exploiting spatio-temporal redundancies for 2D cardiac CINE

Siying Xu1, Patrick Krumm2, Andreas Lingg2, Haikun Qi3, Kerstin Hammernik4,5, and Thomas Küstner1

1Medical Image And Data Analysis (MIDAS.lab), Department of Interventional and Diagnostic Radiology, University Hospital of Tuebingen, Tuebingen, Germany, 2Department of Radiology, University Hospital of Tuebingen, Tuebingen, Germany, 3School of Biomedical Engineering, ShanghaiTech University, Shanghai, China, 4Lab for AI in Medicine, Technical University of Munich, Munich, Germany, 5Department of Computing, Imperial College London, London, United Kingdom

1Medical Image And Data Analysis (MIDAS.lab), Department of Interventional and Diagnostic Radiology, University Hospital of Tuebingen, Tuebingen, Germany, 2Department of Radiology, University Hospital of Tuebingen, Tuebingen, Germany, 3School of Biomedical Engineering, ShanghaiTech University, Shanghai, China, 4Lab for AI in Medicine, Technical University of Munich, Munich, Germany, 5Department of Computing, Imperial College London, London, United Kingdom

Synopsis

Cardiac CINE MR imaging allows for accurate and reproducible measurement of cardiac function but requires long scanning times. Parallel imaging and compressed sensing (CS) have reduced the acquisition time, but the possible acceleration remained limited. Deep learning-based MR image reconstructions can further increase the acceleration rates with improved image quality. In this work, we propose a novel network for retrospectively undersampled 2D cardiac CINE that a) operates in image and k-space domain with interleaved architectures and b) utilizes spatio-temporal filters for multi-coil dynamic data. The proposed network outperforms CS and some other neural networks in both qualitative and quantitative evaluations.

Introduction

Cardiac CINE MR imaging allows for accurate and reproducible measurement of cardiac function1. Conventionally, multi-slice 2D CINE images are acquired under multiple breath-holds leading to patient discomfort and imprecise assessment. Parallel imaging2,3 and compressed sensing (CS)4-8 have enabled the reduction of scan time, but the possible acceleration remained limited.Recently, deep learning-based MR image reconstruction methods showed potentials to further increase the acceleration rates while maintaining or improving spatial and temporal resolution. Image enhancement9-11, physics-based unrolled learning12-14, k-space learning15,16 and hybrid learning17,18 networks have been proposed. These networks either operate in image9-14 or k-space15,16 domain. Moreover, the spatio-temporal cardiac dynamics can be exploited using recurrent19, low-rank20 or spatial-temporal filters21,22. However, most approaches so far focused on either spatial reconstruction in one or both domains18, or dynamic reconstruction in a single domain23-25.

In this work, we propose a novel complex-valued physics-based unrolled reconstruction network that a) operates in image and k-space domain and b) utilizes spatio-temporal filters for multi-coil dynamic data. An interleaved architecture with 2D+t filters is proposed to maximize the sharing of spatio-temporal k-space and image information. The proposed approach is investigated on in-house acquired 2D cardiac CINE MRI.

Methods

Our hybrid network adopts a mutual learning strategy to process the MR data in the k-space and image domain in parallel. The overall network architecture is depicted in Fig.1. The network consists of blocks with interleaved k-space and image subnetworks, and intermittent data consistency (DC) steps. The final reconstructed image is the output of the last image DC layer, but both outputs including k-space branch are evaluated in loss calculation, and thus impact back-propagation.The image subnetwork (Fig.1b) is expressed as a residual 2D+t UNet. It has three encoding and decoding stages with two 2D+t complex-valued convolutional layers ($$$t \times x \times y=9\times5\times5$$$) in each stage and ModReLU activations between two convolutions as well as after the temporal convolution to enhance the network's representation power21. Downsampling is achieved by striding while upsampling is performed via transposed convolutions.

The k-space subnetwork (Fig.1c) is inspired by Robust artificial-neural-network for k-space interpolation (RAKI)16. It consists of three complex-valued convolutional layers with ModReLU activations that operate on the multi-coil k-t-coil data.

Within one iteration, the outputs of the individual image and k-space subnetworks are exchanged and combined with each other, i.e. for the image branch the k-space subnetwork output will be converted to the image domain through the multi-coil adjoint operator and added to the output of the image subnetwork.

A data consistency (DC) layer is applied to both k-space and image domain. The DC layer of the image branch is implemented by a gradient descent algorithm. The k-space branch performs a null-space projection such that sampled k-space locations will be replaced by acquired values. The image and k-space after DC layers are used as inputs for the next block. For six iterations, the overall network results in 2,512,350 trainable parameters.

2D cardiac CINE datasets were acquired in 104 subjects (66 patients and 38 healthy subjects) in-house with a bSSFP sequence (TE/TR=1.06/2.12ms, flip angle 52°, bandwidth=915Hz/px, spatial resolution 1.9$$$\times$$$1.9mm2, slice thickness 8mm, temporal resolution $$$\approx$$$40ms, 25 cardiac phases) on a 1.5T MRI in eight breath-holds (14s each). Data were split into 83 training and 21 test subjects and retrospectively undersampled using VISTA26 with acceleration factor R=2 to 15. The mean absolute errors (MAE) between reconstructed image/k-space and reference image/k-space are optimized by ADAM27 (learning rate 10-4 in the first 30 epochs and 10-5 in another 30 epochs, batch size of 1). Impact of hybrid processing (hybrid vs. unrolled network) and spatio-temporal filters is investigated and the proposed approach is compared to a CS ($$$\ell_1$$$ wavelet regularized), UNet-based image enhancement9 and modified RAKI (our k-space subnetwork) reconstruction.

Results and Discussion

Animated Fig. 2 shows the cardiac time-resolved reconstructions by our proposed hybrid network for R=5, 10 and 15 retrospectively undersampled 2D CINE in one patient with cardiac lymphoma and potential myocarditis. A comparison to other reconstruction methods is shown in Fig. 3 (R=5) and Fig. 4 (R=15). The hybrid k-space and image network outperforms other reconstruction methods for the presented acceleration factors. Even for high accelerations that would enable single breath-hold imaging, the proposed hybrid network can successfully reconstruct the images with good quality and sharp details. Fig. 5 depicts the quantitative assessment (PSNR, SSIM, MSE) over all test subjects, slices and cardiac phases. The hybrid network significantly outperforms all other networks regarding PSNR, SSIM and MSE metrics.We acknowledge limitations of this study. This work focused on a retrospective undersampling. In the future, we plan to acquire prospectively undersampled data within a single breath-hold. Furthermore, we will try to adapt the proposed approach for 3D cardiac CINE imaging.

Conclusion

Our novel hybrid multi-coil 2D+t deep learning-based reconstruction network can efficiently reconstruct highly undersampled 2D cardiac CINE with high image quality. The proposed hybrid network of interleaved and unrolled architecture with spatio-temporal kernels exploits the spatio-temporal redundancies in the k-space and image domain for dynamic image reconstruction which enables high acceleration rates.Acknowledgements

The authors would like to thank Jens Kübler and Stanislau Chekan for study coordination. This project was supported by the Germany’s Excellence Strategy – EXC-Number 2064/1 – Project number 390727645 and EXC-Number 2180 – Project number 390900677.References

1. American College of Cardiology Foundation Task Force on Expert Consensus Documents et al. Circulation 2010;121(22).2. Kellman et al. Magn Reson Med 2008;59(4).

3. Kellman et al. Magn Reson Med 2009;62(6).

4. Chaâri et al. Med Image Anal 2011;15(2).

5. Block et al. Magn Reson Med 2007;57(6).

6. Osher et al. Multiscale Modeling and Simulation 2005;4(2).

7. Weller. IEEE Southwest Symposium on Image Analysis and Interpretation (SSIAI) 2016. 8. Liu et al. IEEE Trans Image Process 2013;22(12).

9. Hauptmann et al. Magn Reson Med 2019;81.

10. Kofler et al. IEEE Trans Med Imaging 2020;39(3).

11. Lee et al. IEEE Trans on Biomedical Engineering 2018;65(9).

12. Hammernik et al. Magn Reson Med 2018;79(6).

13. Schlemper et al. IEEE Trans Med Imaging 2018;37(2).

14. Aggarwal et al. IEEE Trans Med Imaging 2019;38(2).

15. Lee et al. Magn Reson Med 2016;76(6).

16. Akçakaya et al. Magn Reson Med 2019;81.

17. Eo et al. Magn Reson Med 2018;80(5).

18. El-Rewaidy et al. Magn Reson Med 2021;85(3).

19. Qin et al. IEEE Trans Med Imaging 2019;38(1).

20. Sandino et al. Proc Intl Soc Mag Reson Med 2021;29.

21. Sandino et al. Magn Reson Med 2021;85.

22. Küstner et al. Sci Rep 2020;10(1).

23. Biswas et al. Magn Reson Med 2019;82(1).

24. Hammernik et al. Proc ISMRM 27th Annu Meeting Exhibit 2019.

25. Qin et al. Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics) 2019.

26. Ahmad et al. Magn Reson Med 2015;74(5).

27. Kingma et al. Proceedings of the 3rd International Conference on Learning Representations, San Diego, CA, United States 2015.

Figures

Fig. 1: (a) Proposed hybrid physics-based unrolled reconstruction network with interleaved (b) image vn and (c) k-space un subnetworks. Complex-valued spatio-temporal convolutions are applied on the x-t and to the k-t-coil dimensions. The DC layer of both branches receives the under-sampled k-space and sampling mask, together with the currently reconstructed data in the respective branch. An MAE loss is applied to the reconstructed k-space and image to train the network end-to-end.

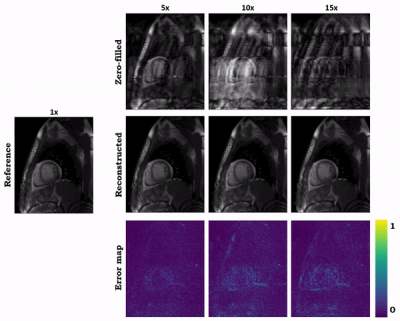

Fig. 2 (Animated): Reconstructions of a patient for retrospectively VISTA undersampled 2D CINE with accelerations R=5, R=10, and R=15 by the proposed hybrid network. The zero-filled input, reconstructed output and error maps to the fully-sampled reference image are shown.

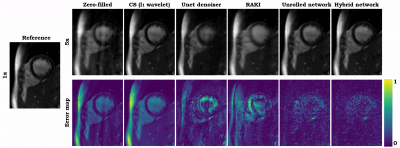

Fig. 3 (Animated): Reconstructions of a healthy subject for retrospectively VISTA undersampled 2D CINE (R=5). Results of zero-filled input, compressed sensing reconstruction (l1 wavelet), UNet denoiser9, modified RAKI, our physics-based unrolled network, and the proposed hybrid network are depicted in comparison to the fully-sampled reference image. Error maps show the deviation from the reference.

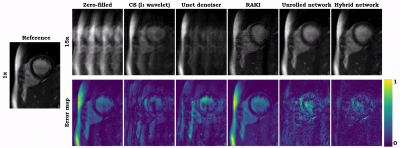

Fig. 4 (Animated): Reconstructions of a healthy subject for retrospectively VISTA undersampled 2D CINE (R=15). Results of zero-filled input, compressed sensing reconstruction (l1 wavelet), UNet denoiser9, modified RAKI, our physics-based unrolled network, and the proposed hybrid network are depicted in comparison to the fully-sampled reference image. Error maps show the deviation from the reference.

Fig. 5: Quantitative analysis in terms of peak-signal to noise ratio (PSNR), structural similarity index (SSIM) and mean squared error (MSE) between zero-filled, CS, UNet denoiser, modified RAKI, our physics-based unrolled network, and the proposed hybrid network reconstructions to the fully-sampled reference. Results depict the mean (dot), median (horizontal line), 25% and 75% quantile (boxes), standard deviation (whiskers) and outliers (diamonds) of the R=15 times retrospectively undersampled 2D CINE.

DOI: https://doi.org/10.58530/2022/0953