0829

CycleSeg-v2: Improving unpaired MR-to-CT Synthesis and Segmentation with pseudo label and LPIPS loss1Bio and Brain Engineering, Korea Advanced Institute of Science and Technology, Daejeon, Korea, Republic of, 2Department of Radiation Oncology, Samsung Medical Center, Seoul, Korea, Republic of

Synopsis

Radiotherapy treatment typically requires both CT and MRI as well as labor intensive contouring for effective planning and treatment. Deep learning can enable an MR-only workflow by generating synthetic CT (sCT) and performing automatic segmentation on the MR data. However, MR and CT data are usually unpaired and limited contours are available for MR data. In this study, we proposed CycleSeg-v2 that extends the previously proposed CycleSeg to work with unpaired data. To ensure robust training, we employed LPIPS loss in addition to pseudo label. Experiments with data from prostate cancer patients showed that CycleSeg-v2 improved upon previous approaches.

Introduction

Radiotherapy treatment planning typically requires both CT and MRI for identifying the tumors and the surrounding organs as well as for radiation dose calculation. Manual, time-consuming contouring of relevant organs is also necessary for target delineation and treatment monitoring. Deep learning can generate both realistic synthetic CT (sCT)1,2 and segmentation3,4 from MR images to enable MR-only radiotherapy treatment planning, reducing the burden for both patients and clinicians. However, contouring is typically performed on the CT images, so often no contour is available for MR images. Since MR and CT images are not acquired at the same time, previous approaches have used CycleGAN for unpaired training to generate sCT from MR images. This can introduce geometric distortion between the MRI and sCT images as the cycle consistent training alone does not penalize this error15. In this study, we proposed CycleSeg-v2, a CycleGAN-based network that performs both sCT synthesis and segmentation based on unpaired CT and MRI. To address the aforementioned obstacles, CycleSeg-v2 incorporates the Learned Perceptual Image Patch Similarity (LPIPS) loss5 with the pseudo-label approach15 to train with unpaired data.Methods

Data:We utilized 115 sets of simulation CT scans of patients with prostate cancer. The simulation CT scans were performed with GE Healthcare Discovery CT590 RT. The field of view (FOV) was 50cm, the slice-thickness between the consecutive axial CT images was 2.5 mm, and the matrix size and resolution were 512×512 and 0.98×0.98 mm2. Contours of organs-of-interest were manually annotated on the CT data. MRI scans were conducted immediately after the simulation CT scans with a Philips 3.0 T Ingenia MR. T2 weighted MR images were obtained with FOV = 400×400×200 mm3, matrix size = 960×960×80, TR/TE = 6152ms/100ms. The data were manually inspected to remove those with unusual artifacts, resulting in 93 pairs of MRI/CT volumes. To process the data for training the networks, both MRI and CT volumes were resampled to a common 1.3×1.3×2.5 mm3 spacing and registered the MRI volume to CT volume with affine transformation in simpleITK6-8. The central 352×352 pixels in the axial plane were cropped to remove the background. To perform intensity normalization, the Hounsfield units for CT were linearly mapped from [-1024, 3071] to [-1, 1] range. The intensities of the MRI data were normalized by subtracting the mean, dividing by 2.5 times the standard deviation of the voxels inside the body, and clipping the normalized values to [-1,1] range. During training, MR and CT slices were randomly sampled from all training subjects.

Networks:

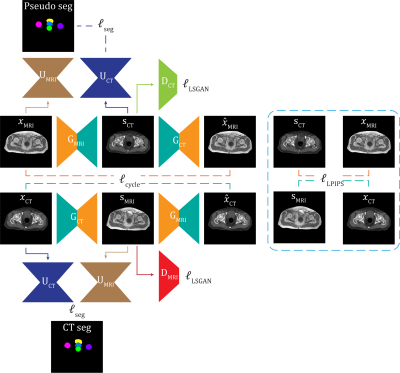

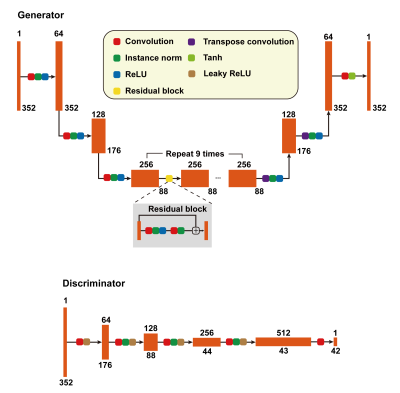

CycleSeg-v2 consists of a CycleGAN9 and 2 UNets10, whose structures are shown in Figures 1 and 2. Similar to Zhang et al11 and Luu et al15, CycleSeg-v2 enforces shape consistency through the segmentation network to improve the synthesis. CycleSeg-v2 adopted the pseudo-label approach proposed in CycleSeg15 to train the UNet-CT with sCT by using the masks generated by UNet-MRI from input MR images. To reduce the geometric distortion from the unpaired training scheme, we utilized the LPIPS loss, which minimizes the perceptual similarity distance using a pretrained network (VGG16). The following loss is minimized during the training process:

$$\mathcal{L}(x_{MRI},x_{CT})=\mathcal{L}_{LSGAN}+10\mathcal{L}_{cycle}+\mathcal{L}_{seg}+\mathcal{L}_{LPIPS} (1)$$

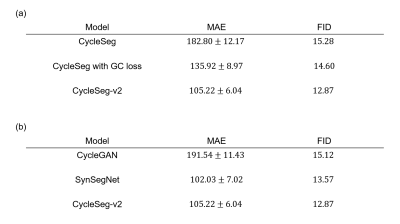

CycleSeg-v2 was compared with the baseline CycleGAN as well as SynSegNet12. To investigate the effect of the LPIPS loss, CycleSeg-v2 was compared with CycleSeg, which does not have the LPIPS loss, as well as a variant of CycleSeg with the gradient correlation loss13. Mean absolute error (MAE) of HU and Frechet inception distance (FID)14 were used as quantitative metrics for sCT synthesis and 3D dice coefficient was used to evaluate segmentation performance.

Result and Discussion

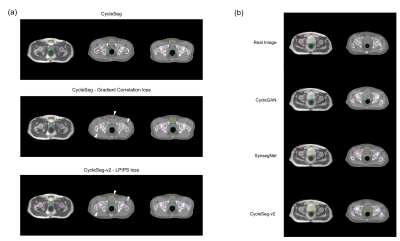

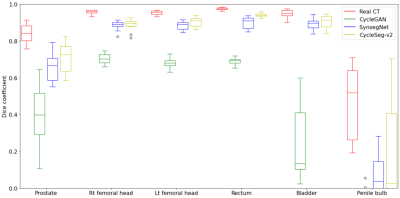

Table 1a and Figure 3a showed the effect of the LPIPS loss to enforce consistency between the input and the generated images. The addition of the LPIPS loss in CycleSeg-v2 leads to lower MAE and FID (105.22 and 12.87) compared to the cases with no LPIPS loss (182.80 and 15.28) or with the gradient correlation loss (135.92 and 14.60). Without any consistency loss, CycleSeg tends to produce sCT images that are slightly misaligned with respect to the input MR images. With the addition of either the gradient correlation or the LPIPS loss, this problem was largely negated but CycleSeg-v2 was better at generating correct CT intensity (white arrows). Table 1b compares the performance of CycleSeg-v2 with other approaches for generating sCT. It had the lowest FID value of 12.87 and the second-lowest MAE value of 105.22. Figure 3b showed a representative real MR and CT slice with generated sCT and segmentation from the 3 methods. The vanilla CycleGAN method showed the misalignment problem and failed to segment the bladder. Both SynSegNet and CycleSeg-v2 showed better synthesis and segmentation performance, with slightly more accurate right femoral and rectum segmentation for CycleSeg-v2. Figure 4 showed the quantitative Dice scores for the 3 networks in comparison to the best-case scenario or reference of Unet trained and tested on real CT. CycleSeg-v2 showed better performance compared to the other 2 networks.Conclusion

CycleSeg-v2 incorporates LPIPS loss and pseudo label to enable simultaneous sCT synthesis and segmentation from MR images with unpaired training and no MR segmentation label. The proposed method has the potential to streamline radiotherapy treatment planning with only MR acquisition.Acknowledgements

No acknowledgement found.References

1. Liu Y, Lei Y, Wang Y, Shfai-Erfani G et al. “Evaluation of a deep learning-based pelvic synthetic CT generation technique for MRI-based prostate proton treatment planning”. Phys. Med. Biol. 64 205022, 2019

2. Liu Y, Lei Y, Wang T, Kayode O et al. “MRI-based treatment planning for liver stereotactic body radiotherapy: validation of a deep learning-based synthetic CT generation method”. Br J Radiol. 2019 Aug;92(1100):20190067

3. S. Elguindi et al., "Deep learning-based auto-segmentation of targets and organs-at-risk for magnetic resonance imaging only planning of prostate radiotherapy," Phys Imaging Radiat Oncol, vol. 12, pp. 80-86, Oct 2019.

4. M. H. F. Savenije et al., "Clinical implementation of MRI-based organs-at-risk auto-segmentation with convolutional networks for prostate radiotherapy," Radiat Oncol, vol. 15, no. 1, p. 104, May 11 2020.

5. Zhang R, Isola P, Efros A, Shechtman E, Wang O, “The Unreasonable Effectiveness of Deep Features as a Perceptual Metric,” CVPR 2018.

6. R. Beare, B. C. Lowekamp, Z. Yaniv, "Image Segmentation, Registration and Characterization in R with SimpleITK", J Stat Softw, 86(8), 2018.

7. Z. Yaniv, B. C. Lowekamp, H. J. Johnson, R. Beare, "SimpleITK Image-Analysis Notebooks: a Collaborative Environment for Education and Reproducible Research", J Digit Imaging., 31(3): 290-303, 2018.

8. B. C. Lowekamp, D. T. Chen, L. Ibáñez, D. Blezek, "The Design of SimpleITK", Front. Neuroinform., 7:45, 2013.

9. Zhu J-Y, Park TS, Isola P, Efros A. "Unpaired Image-to-Image Translation using Cycle-Consistent Adversarial Networks", in IEEE International Conference on Computer Vision (ICCV), 2017.

10. Ronneberger O, Fischer P, Brox T. “U-Net: Convolutional Networks for Biomedical Image Segmentation”, Cham, Springer International Publishing, 2015.

11. Zhang Z, Yang L, Zheng Y. "Translating and Segmenting Multimodal Medical Volumes with Cycle- and Shape-Consistency Generative Adversarial Network." In Conference on Computer Vision and Pattern Recognition (CVPR), 2018

12. Huo, Y., Xu, Z., Moon, H., Bao, S., Assad, A., Moyo, T.K., Savona, M.R., Abramson, R.G., Landman, B.A.: SynSeg-Net: Synthetic Segmentation Without Target Modality Ground Truth. IEEE Transactions on Medical Imaging 38, 1016-1025 (2019)

13. Hiasa, Y., Otake, Y., Takao, M., Matsuoka, T., Takashima, K., Carass, A., Prince, J.L., Sugano, N., Sato, Y.: Cross-Modality Image Synthesis from Unpaired Data Using CycleGAN. pp. 31-41. Springer International Publishing, (2018)

14. Heusel M, Ramsauer H, Unterthiner T, Nessler B et al. “GANs Trained by a Two Time-Scale Update Rule Converge to a Local Nash Equilibrium”, in Advances in Neural Information Processing Systems (NIPS), 2017

15. Luu H.M et al., “CycleSeg: MR-to-CT synthesis and segmentation network for prostate radiotherapy treatment planning”. 30th Annual Meeting, ISMRM 2021. Program number 0813.

Figures