0686

Designing a Clinical Decision Support System for MRI Radiology Titles Using Machine Learning Techniques and Electronic Medical Records1Radiology, Stanford University, Stanford, CA, United States, 2GE Healthcare, Sunnyvale, CA, United States

Synopsis

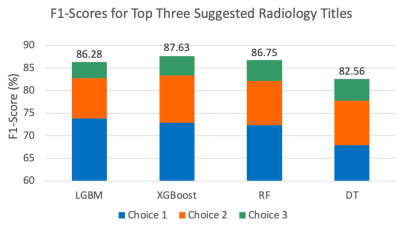

The use of inappropriate radiology protocols introduces risk of missed and incomplete diagnoses, thus endangering patient health, potentially prolonging treatment, and increasing healthcare costs. A clinical decision support system based on machine learning and electronic medical records of patients undergoing MRI was developed to predict radiology titles and their probabilities for radiologist review. A cumulative F1-score of ~85% was obtained for the top three predicted titles. The proposed system can guide physicians toward selecting appropriate titles and alert radiologists of potentially inappropriate selections, thereby improving imaging utility and increasing diagnostic accuracy, which favors better patient outcomes.

Introduction

Approximately 40 million MRI scans are performed annually in the USA1. Most studies examining machine learning (ML) applications in MRI have focused on developing algorithms for image acquisition and interpretation tasks, while few systems have been developed for pre-image acquisition tasks, such as protocol selection2. Exam-specific radiology protocols, where a radiologist selects an anatomical region (e.g., abdomen), focus (e.g., liver), and pharmaceutical (e.g., intravenous contrast), are used to translate physician orders into radiological imaging tasks3. The selection of an appropriate radiology protocol enhances patient safety and experience, whereas inappropriate selection can produce incomplete diagnostic information, potentially jeopardizing health, delaying treatment, and increasing healthcare costs2. Accurate protocol selection is a time-consuming, cumbersome, and error-prone task2.To address this challenge, we developed a technique for predicting examination radiology titles, which are initially selected by referring physicians during radiology ordering, and are the primary determinant used by radiologists for radiology protocol selection. The initially selected radiology titles, however, are frequently incorrect and are adjusted by radiologists. Prior efforts to address this problem have focused on text inputs4,5 or limited their protocols to a specific subspecialty such as neuroradiology2,3. An intelligent system to predict these radiology titles could aid the referring physicians in selecting these titles, and/or alert the radiologists to inconsistencies in the ordered radiology title and the rest of the patient’s electronic medical record (EMR).

Methods

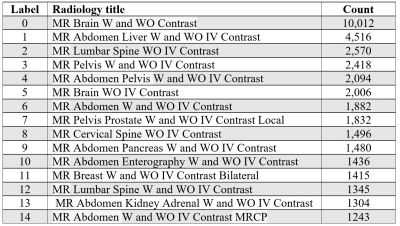

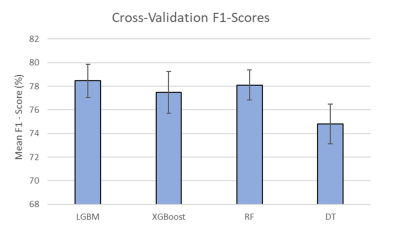

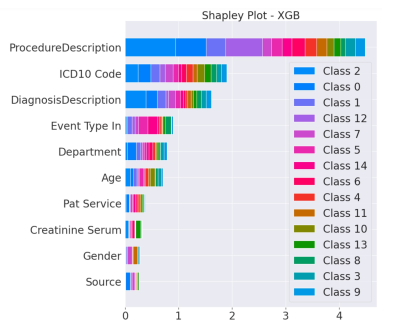

We used ML models6 to develop a technique that utilizes patient EMRs to predict the most suitable radiology titles. We obtained the EMRs of patients undergoing MRI scans after 2017 for the abdomen or related conditions (e.g., metastasis), including additional scans of the brain, breast, abdomen, lumbar, and pelvic regions. The tabular dataset included EMR-extracted data relevant to protocol selection, such as admission information, demographic characteristics, diagnoses, laboratory findings, previous orders and procedures, and prior radiology findings. Selected attributes included age, race/ethnicity, height, weight, smoking history, insurance provider and type, ‘Event Type In’ (admit/transfer event), ‘Pat Service’ (type of provided hospital service, and ‘Source’ (diagnostic source, e.g., Professional Billing Code). Laboratory values were lemmatized to group similar clinical measurements (e.g., ‘urine creatinine’ and ‘creatinine, urine’). Then, we ranked the radiology titles based on number of uses in the entire data to reveal the 15 most common radiology titles (Table 1).In the next stage, patient data associated with the 15 top titles were selected and extracted. The extracted data from 20,117 patients (may have multiple records) formed the input signals inclusive of 36,869 records of selected titles and the feature dimensions of 31 attributes. The number of records was determined by excluding age < 18 or > 90 years, duplicate records, and patients not having the 15 top radiology titles. The data were allocated to training (80%) and test (20%) sets by patients. Four DT-based algorithms, namely light gradient boosting machine (LGBM), extreme gradient boosting (XGBoost), random forest (RF), and DT, and were used for supervised learning because of their ability to model non-linear relationships in the EMRs7. Hyperparameters (maximum depth, learning rate, and number of leaves) were tuned with a grid search mechanism and the five-fold cross-validation method. We also employed the 10-fold cross-validation method to evaluate model performance, represented by F1-score, a metric appropriate for evaluating imbalanced data. Summary plots of Shapley Additive Explanations (SHAP) values were developed to explain the contribution of each feature to the prediction and indicate the relative importance of each feature.

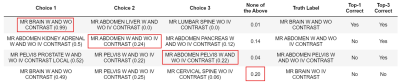

Finally, the tuned models were used to predict radiology titles and their probabilities. The pipeline produces a list of the top three radiology title suggestions along with their probabilities. We used F1-scores to evaluate the top-three selections.

Results and Discussion

For all models, we obtained an approximate F1-score of 78% in our cross-validation results (Fig. 1). SHAP plots indicated that Procedure Description and International Classification of Diseases (ICD) Code were the most important features included; these features played significant roles in most models and correlated with routine protocols selected by radiologists (Fig. 2). The predicted titles and their probabilities were reported for radiologist review (Fig. 3). The most probable predicted radiology titles derived from these four models can be selected for radiologist review. We obtained an 85% accumulated F1-score for the top three predicted titles (Fig. 4). The top three radiology titles produced, along with their probabilities, will be useful for selecting the most appropriate title in the context of a clinical decision support system. A threshold value can be defined to indicate the titles that require radiologist or technologist revision at a later stage.Conclusions

We demonstrated that ML techniques can predict MRI radiology titles using EMR data and identified the most significant features in this process. These models produce titles accurately based on a patient’s EMR data. The proposed approach introduces new avenues for guiding referring physicians toward correct radiology title selection and alerting radiologists and radiology technologists to inconsistencies between the radiology title in the exam order and the patient’s underlying conditions. When put into real-world use, we hope our approach will increase the accuracy of radiology protocol selection, and thereby improve imaging precision, reduce costs, and lead to better patient outcomes.Acknowledgements

This work has been supported and funded by General Electric (GE) Healthcare.References

1. Stewart C, MRI units per million: by country 2019, Statista. Oct 26, 2021:A1. https://www.statista.com/statistics/282401/density-of-magnetic-resonance-imaging-units-by-country/, Accessed November 1, 2021.

2. Brown A, Marotta T. A natural language processing-based model to automate MRI brain protocol selection and prioritization. Acad Radiol. 2017;24(2):160-166.

3. Lee Y. Efficiency improvement in a busy radiology practice: determination of musculoskeletal magnetic resonance imaging protocol using deep-learning convolutional neural networks. J Digit Imaging. 2018;31(5):604-610.

4. Kalra A, Chakraborty A, Fine B, et al. Machine learning for automation of radiology protocols for quality and efficiency improvement. J Am Coll Radiol. 2020;17(9):1149-1158.

5. López-Úbeda P, Díaz-Galiano M, Martín-Noguerol T, et al. Automatic medical protocol classification using machine learning approaches. Comput Meth Prog Bio. 2021;200:105939.

6. Ferreira A, Figueiredo M. Boosting Algorithms: A Review of Methods, Theory, and Applications. In: Zhang C, Ma Y (eds) Ensemble Machine Learning. Springer, Boston, MA, USA; 2012.

7. Klug M, Barash Y, Bechler S, et al. A gradient boosting machine learning model for predicting early mortality in the emergency department triage: devising a nine-point triage score. J Gen Intern Med. 2020;35(1): 220-227.

Figures