0598

AI-based Automated Liver Image Prescription: Evaluation across Patients and Pathologies and Prospective Implementation and Validation1Radiology, University of Wisconsin-Madison, Madison, WI, United States, 2Medical Physics, University of Wisconsin-Madison, Madison, WI, United States, 3Electrical and Computer Engineering, University of Wisconsin-Madison, Madison, WI, United States, 4Radiology and Nuclear Medicine, Universität zu Lübeck, Lübeck, Germany, 5Medicine, University of Wisconsin-Madison, Madison, WI, United States, 6Emergency Medicine, University of Wisconsin-Madison, Madison, WI, United States, 7Biomedical Engineering, University of Wisconsin-Madison, Madison, WI, United States

Synopsis

An automated AI-based method for liver image prescription from a localizer was recently proposed. In this work, this AI method was further evaluated in a larger retrospective patient cohort (1,039 patients for training/testing), across pathologies, field strengths, and against radiologists’ inter-reader reproducibility performance. AI-based 3D axial prescription achieved a S/I shift of <2.3 cm compared to manual prescription for 99.5% of test dataset. The AI method performed well across all sub-cohorts and better in 3D axial prescription than radiologists’ inter-reader reproducibility performance. The AI method was successfully implemented on a clinical MR system and showed robust performance across localizer sequences.

Introduction

Artificial intelligence (AI) methods have enabled automated image prescription in the spine, heart, and brain1–3, with the potential to improve workflow, efficiency, and prescription accuracy and consistency4,5. However, AI-based image prescription for the liver remains an unmet need. Recent work6 has shown the potential of AI-based automated prescription for liver MRI in a limit number of datasets. The purpose of this work was to further develop and validate this AI method in a larger population, across sub-cohorts of patients and training sizes, to compare its performance with manual labeling, and to validate its prospective implementation.Methods

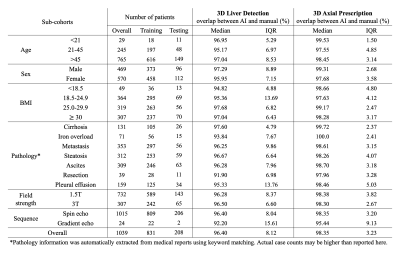

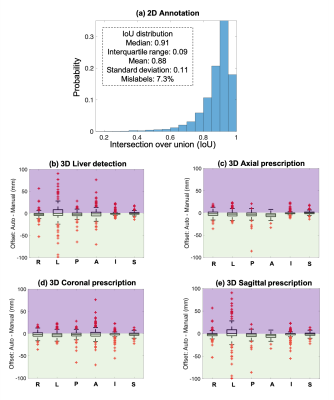

Data: In this IRB-approved study, data (localizer images) from 1,039 patient exams (composition shown in Table 1) were retrieved retrospectively, with a waiver of informed consent.Manual labeling and inter-reader reproducibility: six board-certified abdominal radiologists manually annotated seven classes of labels using bounding boxes that span the liver, torso, and arms to enable liver image prescription in any orientation (Figure 1). Furthermore, we conducted inter-reader reproducibility studies.

Training: We trained a convolutional neural network (CNN) based on the YOLOv3 architecture for detection and classification to estimate coordinates of the aforementioned bounding boxes10,11. Different training dataset sizes (5%-100% of the 831 training datasets) were used for training. Finally, a shallower network (YOLOv3-tiny) was trained for real-time scanner implementation.

3D image prescription: Based on the detected bounding boxes for each slice in the localizer, image prescription for whole-liver acquisition in each orientation was calculated as the minimum 3D bounding box needed to cover all the labeled 2D bounding boxes in the required volume (Figure 1). For example, 3D axial prescription covers the torso in A/P, R/L dimensions and the liver in S/I dimension.

Evaluation: The performance of automated 2D object detection for each of the seven classes of labels was measured by intersection over union (IoU). To evaluate the performance of subsequent automated 3D prescription, the mismatch with manual prescription for each of the six edges of the 3D box was calculated. The performance was evaluated on the remaining 20% of all datasets across patients, pathologies, field strengths and sequences, with increasing training size, and against inter-reader reproducibility results.

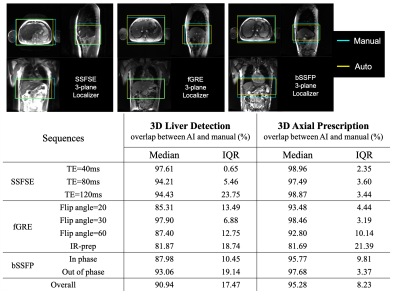

Online implementation & prospective study: The automated prescription was implemented on a 3T MR system (GE MR750), and evaluated prospectively on six healthy volunteers using a variety of localizer pulse sequences (SSFSE, fGRE, bSSFP) and acquisition parameters.

Results

Training with 80% of all data required ~40 hours on an NVIDIA Tesla V100 GPU, to reach a mean Averaged Precision (mAP) of 91% at an IoU threshold of 0.5. For testing, object detection for one three-plane localizer (~30 images) on a GPU required ~0.3 seconds.Figure 2 shows the accuracy of 2D (a) and 3D detection (b), and prescription (c-e). The histograms of IoU for each of the seven classes are qualitatively similar, with IoU median >0.91 and interquartile range <0.09. For 3D liver detection, 93% ± 9% of the manual volume was included in the automated prescription, while 3D axial prescription had 97% ± 3% inclusion, 3D coronal and sagittal prescription had 96% ± 6% and 95% ± 7% inclusion respectively. The shift in 3D axial prescription was less than 2.3 cm in the S/I dimension for 99.5% of the test datasets. This indicates that the addition of a narrow safety margins would ensure complete liver coverage in effectively all patients.

AI-based liver prescription for 3D liver detection and 3D axial prescription maintained high performance across sub-cohorts in age, sex, BMI, pathology, and acquisition field strength and sequence (Table 1). High overlap (>91%) between AI and manual labeling for 3D liver detection and 3D axial prescription was observed across all sub-cohorts.

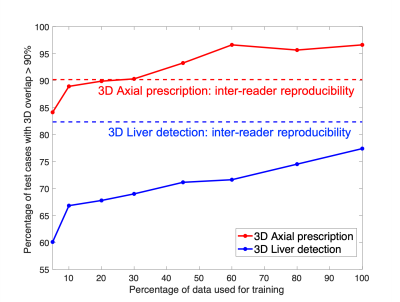

The performance of AI-based prescription improved with training dataset size (Figure 3), and achieved performance comparable or superior to inter-reader reproducibility for 3D axial prescription. For axial prescription, the inter-reader reproducibility study yields a buffer of 2.4cm in the S/I dimension which would cover 99.5% of the test patients, on par with the AI-based method.

We successfully implemented the AI-based automated image prescription method with the full and tiny YOLOv3 networks on an MRI system at our site. Automated image prescription for one three-plane localizer on a CPU in the console required ~10 seconds with the full network and ~3 seconds with the tiny network. AI-based 3D axial prescription showed promising prospective performance (similar to that of the retrospective study) across the three localizer sequences acquired (Figure 4) except fGRE with IR-prep. AI had best performance for spin-echo acquisitions.

Discussion

This work demonstrated excellent performance of AI-based automated liver image prescription across patients, pathologies, and field strengths, as well as successful scanner implementation. Overall, the proposed AI method has the potential to standardize liver MR prescription. This study has several limitations, particularly the use of data from a single center and a single vendor and the limited number of subjects for prospective study.Conclusion

AI-based automated liver prescription has excellent performance across patients, pathologies, and field strengths, and against radiologists’ inter-reader reproducibility performance. Further, we demonstrated the successful evaluation on an MR system. This method has the potential to advance the development of fully free-breathing, single button-push MRI of the liver.Acknowledgements

We would like to thank Daryn Belden, Wendy Delaney, and Prof. John Garrett from UW Radiology for their assistance with data retrieval, and Dan Rettmann, Lloyd Estkowski, Naeim Bahrami, Ersin Bayram, and Ty Cashen from GE Healthcare for their assistance with implementation of our AI-based liver image prescription on one of the GE scanners at the University of Wisconsin Hospital. We also wish to acknowledge GE Healthcare and Bracco who provide research support to the University of Wisconsin. Dr. Oechtering receives funding from the German Research Foundation (OE 746/1-1). Dr. Reeder is a Romnes Faculty Fellow, and has received an award provided by the University of Wisconsin-Madison Office of the Vice Chancellor for Research and Graduate Education with funding from the Wisconsin Alumni Research Foundation.

References

1. GE Healthcare’s AIRxTM Tool Accelerates Magnetic Resonance Imaging. Intel. Accessed December 15, 2020. https://www.intel.com/content/www/us/en/artificial-intelligence/solutions/gehc-airx.html

2. Barral JK, Overall WR, Nystrom MM, et al. A novel platform for comprehensive CMR examination in a clinically feasible scan time. J Cardiovasc Magn Reson. 2014;16(Suppl 1):W10. doi:10.1186/1532-429X-16-S1-W10

3. De Goyeneche A, Peterson E, He JJ, Addy NO, Santos J. One-Click Spine MRI. 3rd Med Imaging Meets NeurIPS Workshop Conf Neural Inf Process Syst NeurIPS 2019 Vanc Can.

4. Itti L, Chang L, Ernst T. Automatic scan prescription for brain MRI. Magn Reson Med. 2001;45(3):486-494. doi:https://doi.org/10.1002/1522-2594(200103)45:3<486::AID-MRM1064>3.0.CO;2-#

5. Lecouvet FE, Claus J, Schmitz P, Denolin V, Bos C, Berg BCV. Clinical evaluation of automated scan prescription of knee MR images. J Magn Reson Imaging. 2009;29(1):141-145. doi:https://doi.org/10.1002/jmri.21633

6. Geng R, Sundaresan M, Starekova J, et al. Automated Image Prescription for Liver MRI using Deep Learning. Int Soc Magn Reson Med Annu Meet 2021 Virtual Meet.

7. Chandarana H, Feng L, Block TK, et al. Free-Breathing Contrast-Enhanced Multiphase MRI of the Liver Using a Combination of Compressed Sensing, Parallel Imaging, and Golden-Angle Radial Sampling. Invest Radiol. 2013;48(1). doi:10.1097/RLI.0b013e318271869c

8. Choi JS, Kim MJ, Chung YE, et al. Comparison of breathhold, navigator-triggered, and free-breathing diffusion-weighted MRI for focal hepatic lesions. J Magn Reson Imaging. 2013;38(1):109-118. doi:https://doi.org/10.1002/jmri.23949

9. Zhao R, Zhang Y, Wang X, et al. Motion-robust, high-SNR liver fat quantification using a 2D sequential acquisition with a variable flip angle approach. Magn Reson Med. 2020;84(4):2004-2017. doi:https://doi.org/10.1002/mrm.28263

10. Redmon J, Divvala S, Girshick R, Farhadi A. You Only Look Once: Unified, Real-Time Object Detection. ArXiv150602640 Cs. Published online May 9, 2016. Accessed December 15, 2020. http://arxiv.org/abs/1506.02640

11. Pang S, Ding T, Qiao S, et al. A novel YOLOv3-arch model for identifying cholelithiasis and classifying gallstones on CT images. PloS One. 2019;14(6):e0217647. doi:10.1371/journal.pone.0217647Figures