0555

ResoNet: Physics Informed Deep Learning based Off-Resonance Correction Trained on Synthetic Data1Electrical Engineering and Computer Sciences, University of California, Berkeley, Berkeley, CA, United States, 2International Computer Science Institute, University of California, Berkeley, Berkeley, CA, United States

Synopsis

We propose a physics-inspired, unrolled-deep-learning framework for off-resonance correction. Our forward model includes coil sensitivities, multi-frequency bins, and non-uniform Fourier transforms hence compatible with fat/water imaging and parallel imaging acceleration. The network, which includes data-consistency terms and CNN modules serving as proximal operators, is trained end-to-end using only synthetic random field maps, coil sensitivities, and noise-like images with statistics (smoothness) mimicking natural signals. Our aim is to train the network to reverse off-resonance irrespective of the type of imaging, and hence generalizable to any anatomy and contrast without retraining. We demonstrate initial results in simulations, phantom, and in-vivo data.

Introduction

Non-Cartesian trajectories provide rapid imaging1 and robustness to patient motion2, but can be susceptible to off-resonance blurring, especially when readout durations are long. Several analytical methods have been developed for off-resonance correction, but are mostly computationally slow and require accurate field map estimates, often via additional scans. Recent works have considered deep-learning solutions3-5 which use convolutional neural networks (CNN) to deblur images directly or estimate field maps. However, these methods use real MRI data for training and do not leverage the physics behind off-resonance blurring.We propose a physics-inspired unrolled-deep-learning framework for off-resonance correction. Our model enforces data consistency with a forward model that includes coil sensitivities, multi-frequency bins, and non-uniform Fourier transforms. The model is trained end-to-end using only synthetic random field maps, coil sensitivities, and noise-like images, with the aim of learning to reverse off-resonance irrespective of the type of imaging and hence generalize to any anatomy and contrast without retraining.

Methods

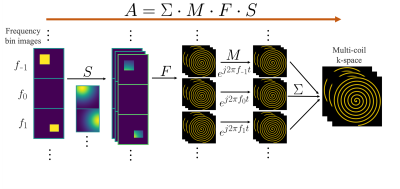

Forward Model definitionWe aim to solve for $$$x$$$ such that $$$A(x)=y$$$, where $$$A$$$ is the forward model, $$$y$$$ the multi-coil k-space measurements, and $$$x$$$ the clean image for each frequency bin. We approximate the forward model as follows (Figure 1): $$A=\Sigma\cdot M\cdot F\cdot S$$ where $$$M=\exp{(j\cdot2\pi\cdot f\cdot t)}$$$.

We model the image as a stack of sharp images at different resonant frequencies around the center Larmor frequency, each containing the region of the object that is resonant. The coil images for each frequency bin are obtained by multiplication with coil sensitivity maps ($$$S$$$). Finally, a density compensated NUFFT ($$$F$$$) calculates the non-Cartesian k-space for each bin, which is then modulated at the corresponding frequency ($$$M$$$). These differently modulated k-spaces are then summed ($$$\Sigma$$$) to obtain a complete representation of the acquired multi-coil k-space.

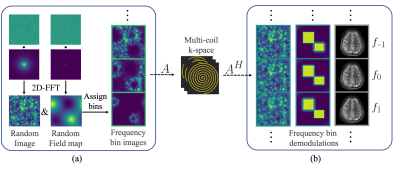

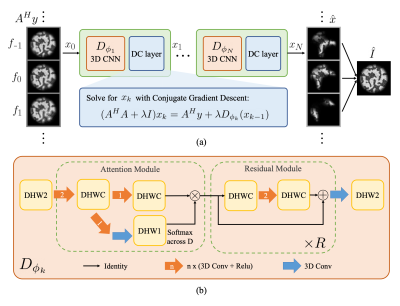

This problem is ill-posed, and hence, we incorporate a neural network for regularization. We take a MoDL-inspired6 approach, an unrolled iterative model that is trained end-to-end (Figure 3a). The input to our model is a stack of images demodulated at multiple frequencies obtained from the adjoint operator $$$A^H$$$ on raw multi-coil kspace. Regions of the object are sharp at the frequency bin corresponding to its local resonant frequency, while other off-resonant regions blur on top (Figure 2b). The objective of our model is to obtain a clear image at each frequency bin, as well as a final combined image without off-resonance blurring.

CNN architecture

The 3D CNN architecture is depicted in Figure 3b. The network takes the complex images obtained from applying $$$A^H$$$ to multi-coil k-space, with the real and imaginary parts as different channels. Convolutions are across image dimensions and frequency bins. We use a combination of an attention module across frequencies and residual modules7. The attention module allows the network to focus on features from a certain frequency range at each spatial location. After the attention module, $$$R$$$ residual blocks follow, and a final convolution outputs the cleaner complex images at each frequency.

Training data simulation

Inspired by [8], all the training data corresponds to simulated synthetic random field maps, coil sensitivities, and noise-like images with statistics (smoothness) mimicking natural signals. Random images and field maps are generated by applying a 2D-FFT to exponentially weighted random complex data, where the weighting radius determines the level of smoothness. Similarly, random sensitivity maps are generated from weighted random SPIRiT kernels9. The images at multiple bins are obtained by blurring a random image $$$I$$$ into the bins according to a random field map $$$F$$$ and bin frequency values, obtaining $$$x$$$. Our sensitivity maps and $$$x$$$ are fed to the forward model to obtain our multi-coil k-space $$$y$$$.

From here, we have all the elements to supervise our model. The model’s output $$$\hat{x}$$$ can be supervised with the ground truth $$$x$$$, as well as the combined output image $$$\hat{I}=\sum_i\hat{x}_{f_i}$$$ can be supervised with $$$I$$$. Additionally, we can aid learning by supervising the attention maps with the frequency bin weights obtained from the field map.

Experiments & Results

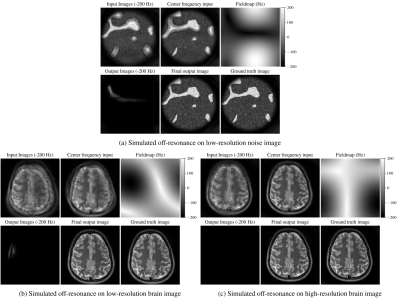

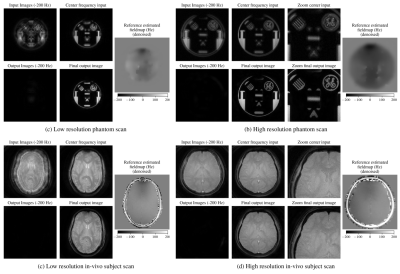

To evaluate our approach, we trained a model simulating a spiral trajectory with 4 interleaves, 10.72ms readout, $$$2.2\text{mm}^2$$$ resolution, and off-resonance field map in the range of -200Hz/200Hz (corresponding to 2.15 phase cycles). We picked 11 frequency bins in the -200Hz/200Hz range (step of 40Hz between bins) for our model approximation, and simulated 6 coils during training. We used Adam10 optimizer with L1 losses. For our unrolled model, we used 4 unrolls, 2 residual blocks on each network, and 12 conjugate gradient iterations with $$$\lambda=1$$$.Results on noise data and real brain images with simulated synthetic off-resonance are shown in Figure 4. Results from evaluating our noise-trained network on acquired phantom and in-vivo brain data are shown in Figure 5. We used a spiral GRE sequence with a spectral-spatial pulse for water-only excitation. We scanned with the same readout trajectory as in training, as well as with a higher-resolution ($$$1\text{mm}^2$$$) scan with a different spiral trajectory (13 interleaves, 10.72ms readout). Sensitivity maps for the acquired data were estimated with ESPIRiT11 using BART12.

Conclusion

We introduce a physics-inspired, unrolled-deep-learning framework for off-resonance correction. Our model is trained purely on synthetic multi-coil data in order to generalize to any anatomy and contrast without retraining.Acknowledgements

No acknowledgement found.References

M. A. Bernstein, K. F. King, and X. J. Zhou, Handbook of MRI pulse sequences. Elsevier, 2004.

Y. Yang, G. H. Glover, P. van Gelderen, A. C. Patel, V. S. Mattay, J. A. Frank, and J. H. Duyn, “A comparison of fast MR scan techniques for cerebral activation studies at 1.5 Tesla, ”Magn Reson Med, vol. 39, no. 1, pp. 61–67, Jan 1998.

D. Y. Zeng, J. Shaikh, S. Holmes, R. L. Brunsing, J. M. Pauly, D. G. Nishimura, S. S. Vasanawala, and J. Y. Cheng, “Deep residual network for off-resonance artifact correction with application to pediatric body MRA with 3d cones, ”Magnetic Resonance in Medicine, vol. 82, no. 4, pp. 1398–1411, 2019. [Online]. Available: https://onlinelibrary.wiley.com/doi/abs/10.1002/mrm.27825

Y. Lim, Y. Bliesener, S. Narayanan, and K. S. Nayak, “Deblurring for spiral real-time MRI using convolutional neural networks, ”Magnetic Resonance in Medicine, vol. 84, no. 6, pp. 3438–3452, 2020. [Online]. Available:https://onlinelibrary.wiley.com/doi/abs/10.1002/mrm.28393

M. W. Haskell, A. A. Cao, D. C. Noll, and J. A. Fessler, “Deep learning field map estimation with model-based image reconstruction for off-resonance correction of brain images using a spiral acquisition.”

H. K. Aggarwal, M. P. Mani, and M. Jacob, “Modl: Model-based deep learning architecture for inverse problems, ”IEEE Transactions on Medical Imaging, vol. 38, no. 2, p. 394–405, Feb 2019. [Online]. Available:http://dx.doi.org/10.1109/TMI.2018.2865356

K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” 2015.

B. S. Hu and J. Y. Cheng, “System and method for noise-based training of a prediction model,” Mar 2020.

M. Lustig and J. M. Pauly, “SPIRiT: Iterative self-consistent parallel imaging reconstruction from arbitrary k-space, ”Magnetic Resonance in Medicine, vol. 64, no. 2, pp. 457–471, 2010. [Online]. Available:https://onlinelibrary.wiley.com/doi/abs/10.1002/mrm.22428

D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in3rd International Conference on LearningRepresentations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, Y. Bengio and Y. LeCun, Eds., 2015. [Online]. Available: http://arxiv.org/abs/1412.6980

M. Uecker, P. Lai, M. J. Murphy, P. Virtue, M. Elad, J. M. Pauly, S. S. Vasanawala, and M. Lustig, “ESPIRiT—an eigenvalue approach to autocalibrating parallel MRI: Where sense meets grappa, ”Magnetic Resonance in Medicine, vol. 71, 2014.

M. Uecker, F. Ong, J. I. Tamir, D. Bahri, P. Virtue, J. Y. Cheng, T. Zhang, and M. Lustig, “Berkeley advanced reconstruction toolbox,” inProc. Intl. Soc. Mag. Reson. Med, vol. 23, no. 2486, 2015

Figures