0306

Mitigating synthetic T2-FLAIR artifacts in 2D MAGiC using keyhole and deep learning based image reconstruction

Sudhanya Chatterjee1, Naoyuki Takei2, Rohan Patil1, Sugmin Gho3, Suchandrima Banerjee4, Florian Wiesinger5, and Dattesh Dayanand Shanbhag1

1GE Healthcare, Bengaluru, India, 2GE Healthcare, Tokyo, Japan, 3GE Healthcare, Seoul, Korea, Republic of, 4GE Healthcare, Menlo Park, CA, United States, 5GE Healthcare, Munich, Germany

1GE Healthcare, Bengaluru, India, 2GE Healthcare, Tokyo, Japan, 3GE Healthcare, Seoul, Korea, Republic of, 4GE Healthcare, Menlo Park, CA, United States, 5GE Healthcare, Munich, Germany

Synopsis

Magnetic resonance image compilation (MAGiC) is a single click scan that provides multiple contrast-weighted images in around 5 mins. This makes it an effective diagnostic option in clinical settings. However, synthetic T2-FLAIR is known to have partial volume artifacts which impacts its diagnostic performance. In this work, we propose a method to mitigate partial-volume artifacts in synthetic T2-FLAIR using a separately acquired fast T2-FLAIR contrast information combined with keyhole and deep learning-based image reconstruction.

Introduction

MAGnetic resonance image Compilation (MAGiC) is a synthetic MRI technique which provides multiple contrast weighted images from quantitative parameters of tissues1. It is a single click sequence and has a typical scan time of around 5 minutes. This makes it an effective in-vivo MRI diagnostic option in clinical setting. However, synthetic T2-FLAIR images are known to suffer from partial-volume artifacts such as brightening in ventricular and cortical regions which severely impact diagnostic performance2,3,4. T2-FLAIR is an essential contrast weighting for diagnostic brain MRI of assessment for most neurological conditions but it is challenging to synthesize diagnostic quality images from multiparametric approaches (e.g., synthetic MRI, MRF, QALAS) primarily because of partial volume effects. Recently several solutions have been proposed to resolve this issue in MAGiC using deep learning (DL) methods5,6,7. These methods largely rely on DL to correct synthetic T2-FLAIR contrast using information from multi-delay multi-echo and/or synthetic and quantitative maps generated by synthetic MR workflow. Hence, these methods are still in realms of contrast synthesis. The widely employed and reliable workaround to get diagnostic quality T2-FLAIR has been to acquire a standalone T2-FLAIR sequence in addition to the multiparametric scan. But this adds a time penalty to the examination and thus dilutes the time saving and streamlined workflow advantage that these techniques offer. In this work, we propose to reduce the time penalty by acquiring a highly accelerated separate T2-FLAIR image in addition to MAGiC, the multi-delay-multi echo fast spin echo sequence that is used for synthetic MR image generation on the GE scanners2.Method

In the proposed method, a separate T2-FLAIR image is accelerated by acquiring k-space lines (as per the acceleration factor) only around center of k-space (Figure-1(b)). This scheme of bandlimited k-space data emphasizes on retention of the T2-FLAIR contrast. Since (fully sampled) synthetic MR and separately acquired accelerated T2-FLAIR images are obtained for the same subject, a cross contrast keyholing is performed to share structure information between accelerated T2-FLAIR and a synthetic MR weighted-contrast image (illustrated in Figure-1(c)). Cross-contrast keyholing followed by DL reconstruction has been shown to be an effective way to accelerate multi-contrast MRI examinations8. In our work, we chose the fully sampled synthetic T2-w image as the reference image (another synthetic MR image can also be used). While this step helps in restoring structural composition of the blurred regions in the image, it also introduces some high frequency contrast artifact from the reference image. The resulting artifacts are then removed using a deep learning artifact correction and an image enhancement module.Deep learning for image reconstruction

The objective of the reconstruction unit is to obtain high image quality (IQ) T2-FLAIR images from naively keyhole-combined initial T2-FLAIR image. In first stage of image reconstruction, a two-channel input deep learning network (Densenet architecture9) is used to remove high frequency contrast artifacts in the image due to cross-contrast keyholing step. This network is driven by a combined loss of mean absolute error (MAE) and structural similarity index (SSIM) (weighted 1.0 and 0.2 respectively). The second stage of image reconstruction uses a deep learning network (residual channel attention network architecture10) to further enhance the artifact corrected images from the earlier stage. This network is driven by MAE loss function. In this work, reconstruction results while acquiring the extra T2-FLAIR image with an acceleration factor of 3 are demonstrated (retrospective under-sampling).

Data

All data were collected on a GE Discovery MR 750w 3.0T scanner using a 24-channel head coil (GE Healthcare, Waukesha, WI). Synthetic MRI was acquired using the multiple-dynamic multiple-echo sequence2 with acquisition parameters: TE=16.8 and 92.4ms; delay times=210, 610, 1810, and 3810ms; TR=4000ms; FOV=220×194 mm; matrix size = 320×288; ETL=16; bandwidth=±35.71 kHz; slice thickness=5mm; resulting in a total acquisition time of 4min 32s. T2-w and T2-FLAIR synthetic images were retrieved from SyMRI software (v.8.0, Synthetic MR, Linköping, Sweden) with parameters as: TR/TE/TI=10000/118/2566ms for T2-FLAIR, TR/TE = 5000/70ms for T2-w. A separate scan of the conventional 2D axial T2-FLAIR was obtained with TR/TE/TI=10,000/118.5/2566.65ms; ETL=27. Other parameters such as FOV or resolution were equivalent to the synthetic images. Our study had 96 participants of which 58, 7 and 31 were used for training, validation and testing respectively. This study was reviewed and approved by an Institutional Review Board.

Results

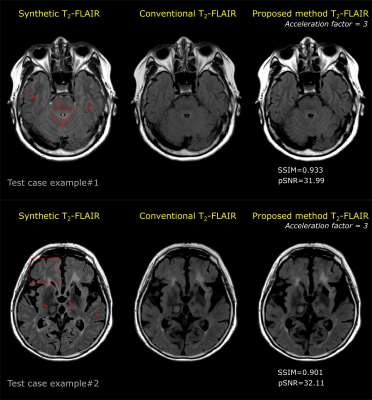

Results from two test cases are shown in Figure-3 and Figure-4. Results show mitigation of the synthetic T2-FLAIR artifacts such as artificial cortical thickening appearance and brightness around partial volume regions. As seen in the results, the contrast of T2-FLAIR images have been preserved in the proposed method. Reconstructed T2-FLAIR images have high IQ which is demonstrated by high SSIM values (>0.95) and pSNR values (>32). SSIM and pSNR for 31 test cases are shown in Figure-5.Conclusion

Synthetic MR has emerged as an important option for clinical MR imaging. In this work we have proposed a framework to mitigate the issue of artifacts in synthetic T2-FLAIR images by cross-contrast keyholing and deep-learning reconstruction of an additional accelerated T2-FLAIR image.Acknowledgements

Authors would like to thank Synthetic MR (SyntheticMR AB, Linkoping, Sweden) for providing support with the post processing software and Gyeongsang National University Changwon Hospital for support during the image acquisition process.References

- Warntjes, J. B. M., O. Dahlqvist Leinhard, J. West, and P. Lundberg. "Rapid magnetic resonance quantification on the brain: optimization for clinical usage." Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine 60, no. 2 (2008): 320-329.

- Tanenbaum, Lawrence N., Apostolos John Tsiouris, Angela N. Johnson, Thomas P. Naidich, Mark C. DeLano, Elias R. Melhem, Patrick Quarterman et al. "Synthetic MRI for clinical neuroimaging: results of the magnetic resonance image compilation (MAGiC) prospective, multicenter, multireader trial." American Journal of Neuroradiology 38, no. 6 (2017): 1103-1110.

- Granberg, T., M. Uppman, F. Hashim, C. Cananau, L. E. Nordin, S. Shams, J. Berglund et al. "Clinical feasibility of synthetic MRI in multiple sclerosis: a diagnostic and volumetric validation study." American Journal of Neuroradiology 37, no. 6 (2016): 1023-1029.

- Blystad, Ida, Jan Bertus Marcel Warntjes, O. Smedby, Anne-Marie Landtblom, Peter Lundberg, and Elna-Marie Larsson. "Synthetic MRI of the brain in a clinical setting." Acta radiologica 53, no. 10 (2012): 1158-1163.

- Ryu, Kyeong Hwa, Hye Jin Baek, Sung-Min Gho, Kanghyun Ryu, Dong-Hyun Kim, Sung Eun Park, Ji Young Ha, Soo Buem Cho, and Joon Sung Lee. "Validation of deep learning-based artifact correction on synthetic FLAIR images in a different scanning environment." Journal of clinical medicine 9, no. 2 (2020): 364.

- Ryu, Kanghyun, Yoonho Nam, Sung‐Min Gho, Jinhee Jang, Ho‐Joon Lee, Jihoon Cha, Hye Jin Baek, Jiyong Park, and Dong‐Hyun Kim. "Data‐driven synthetic MRI FLAIR artifact correction via deep neural network." Journal of Magnetic Resonance Imaging 50, no. 5 (2019): 1413-1423.

- Wang, Guanhua, Enhao Gong, Suchandrima Banerjee, Dann Martin, Elizabeth Tong, Jay Choi, Huijun Chen, Max Wintermark, John M. Pauly, and Greg Zaharchuk. "Synthesize high-quality multi-contrast magnetic resonance imaging from multi-echo acquisition using multi-task deep generative model." IEEE transactions on medical imaging 39, no. 10 (2020): 3089-3099.

- Chatterjee, Sudhanya, Suresh Emmanuel Joel, Ramesh Venkatesan, and Dattesh Dayanand Shanbhag. "Acceleration by Deep-Learnt Sharing of Superfluous Information in Multi-contrast MRI." In International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 392-401. Springer, Cham, 2021.

- Huang, Gao, Zhuang Liu, Laurens Van Der Maaten, and Kilian Q. Weinberger. "Densely connected convolutional networks." In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4700-4708. 2017.

- Zhang, Yulun, Kunpeng Li, Kai Li, Lichen Wang, Bineng Zhong, and Yun Fu. "Image super-resolution using very deep residual channel attention networks." In Proceedings of the European conference on computer vision (ECCV), pp. 286-301. 2018.

Figures

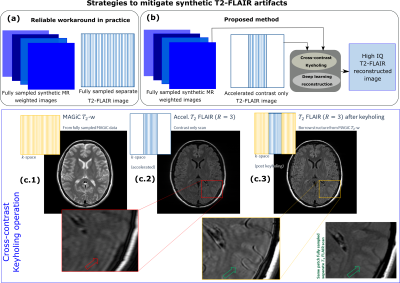

(a) Current practice in clinical setting to mitigate artifacts in synthetic T2-FLAIR has been to acquire a separate T2-FLAIR. (b) Proposed method reduces the time penalty of extra T2-FLAIR acquisition by cross-contrast keyholing and DL reconstruction. (c.2) Extra T2-FLAIR images are accelerated by acquiring only contrast information. Synthetic MR

images are fully sampled. (c.3) Post cross contrast keyholing, structural

composition improves. The problem for DL now becomes that

of identifying and removing high frequency contrast information due to

keyholing step.

Deep

learning module for artifact correction and image enhancement is shown here. In

the first stage, artifact removal network removes cross-contrast keyholing

artifact. In the second stage, output of previous network is refined.

The

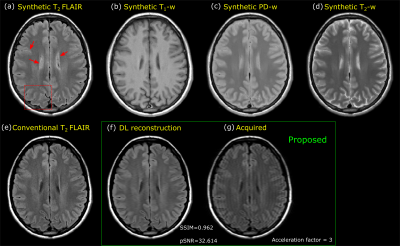

Synthetic weighted contrast images (a-d), a fully sampled separately

acquired T2-FLAIR (e) and T2-FLAIR using proposed method with acceleration

factor = 3 (f) are shown here. Synthetic T2-FLAIR image has brightening at CSF and partial

volume interfaces as highlighted. The T2-FLAIR images using the proposed method is

acquired with an acceleration factor of 3 (scan time of approximately 1 minute).

Images obtained using proposed method mitigate the artifacts observed in

synthetic T2-FLAIR.

Results from two different test cases are shown here. Artifacts in the

synthetic T2-FLAIR images are highlighted using red arrows

and boxes. Reconstructed T2-FLAIR images using the proposed method show

mitigation of the artifacts observed in the synthetic T2-FLAIR images. SSIM and pSNR metrics show high

IQ reconstruction of the images from proposed methods as compared to a fully

sampled separate T2-FLAIR images.

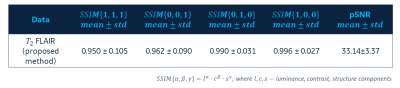

Consolidated statistics of 31 test cases are

shown here. . High luminance value suggests good contrast match between reconstructed T2-FLAIR image using proposed method and conventional T2-FLAIR images.

DOI: https://doi.org/10.58530/2022/0306