0301

Motion2Recon: A Motion-Robust Semi-Supervised Framework for MR Reconstruction

Harris Beg1,2, Beliz Gunel2,3, Batu M Ozturkler2,3, Christopher M Sandino3, John M Pauly3, Shreyas Vasanawala4, Akshay S Chaudhari4,5, and Arjun D Desai3

1Computing and Mathematical Sciences, California Institute of Technology, Pasadena, CA, United States, 2Equal Contribution, Stanford University, Stanford, CA, United States, 3Electrical Engineering, Stanford University, Stanford, CA, United States, 4Radiology, Stanford University, Stanford, CA, United States, 5Biomedical Data Science, Stanford University, Stanford, CA, United States

1Computing and Mathematical Sciences, California Institute of Technology, Pasadena, CA, United States, 2Equal Contribution, Stanford University, Stanford, CA, United States, 3Electrical Engineering, Stanford University, Stanford, CA, United States, 4Radiology, Stanford University, Stanford, CA, United States, 5Biomedical Data Science, Stanford University, Stanford, CA, United States

Synopsis

In this study, we propose Motion2Recon, a semi-supervised consistency-based approach for robust accelerated MR reconstruction of motion-corrupted images. Motion2Recon reduced dependence on fully-sampled (supervised) training data and improves reconstruction performance among motion-corrupted scans. It also maintained superior performance among non-motion, in-distribution scans, which may help eliminate the need for manual motion detection. All code and experimental configurations are openly available in Meddlr (https://github.com/ad12/meddlr).

Introduction

Accelerated MRI is critical for alleviating high costs and slow scan times in MR imaging. Parallel imaging, compressed sensing, and deep learning (DL) have all shown promise for high quality reconstructions from accelerated acquisitions1,2,3. However, these methods are sensitive to out-of-distribution (OOD) acquisition artifacts, particularly those induced by patient motion4. While motion correction techniques may quell motion artifacts, these algorithms often require access to additional motion-tracking apparatuses or rely on empirical motion states or navigators, which require collecting additional data or changing the underlying sequence5,6,7. Additionally, scans must often be manually triaged to determine if motion correction must be applied, which can be time-consuming8. Thus, a unified framework that can eliminate manual triaging and can reconstruct high-quality images from accelerated, motion-corrupted acquisitions is desirable.In this study, we propose Motion2Recon, a semi-supervised consistency-based approach for robust accelerated MR reconstruction of motion-corrupted images. Motion2Recon reduced dependence on fully-sampled (supervised) training data and improves reconstruction performance among motion-corrupted scans. It also maintained superior performance among non-motion, in-distribution scans, which may help eliminate the need for manual motion detection. All code and experimental configurations are openly available in Meddlr (https://github.com/ad12/meddlr).

Theory

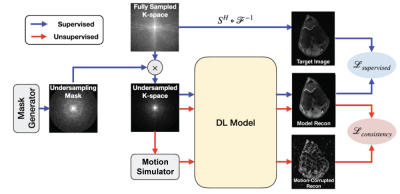

Motion2Recon extends the semi-supervised consistency framework proposed in Noise2Recon to include robustness to motion artifacts9 (Figure 1). Fully-sampled (supervised) data are used for standard supervised training3. For scans without fully-sampled references (unsupervised), examples are augmented with motion. The model generates reconstructions for both non-augmented unsupervised images and motion-augmented unsupervised images. To build invariance to motion, consistency is enforced between the two reconstructions. The total loss is the weighted sum of the supervised loss $$$L_{sup}$$$ (applied for fully-sampled data) and consistency loss $$$L_{cons}$$$.To simulate motion-corrupted acquisitions, k-space samples were augmented by applying phase shifts with amplitude $$$\alpha$$$ along odd and even readout lines, modeled after affine motion in two-shot acquisitions. On even and odd numbered lines, we chose some $$$m_{e}, m_{o} \in U(-1, 1)$$$ and $$$\alpha$$$, which allowed us to control the extent of motion. For each i-th volume, we applied translational motion by multiplying each line with $$$e^{-j\phi_i}$$$, where $$$\phi_i$$$ is given by $$$\pi\alpha{m_e}$$$ and $$$\pi\alpha{m_o}$$$ for even and odd numbered lines, respectively. Figure 2 shows an example of simulated motion artifacts at different motion extents $$$\alpha$$$.

Methods

19 fully-sampled 3D fast-spin-echo knee scans from mridata.org were split into 14, 2, and 3 subjects for training, validation, and testing, respectively10,11. The datasets were used to reconstruct ground truth images for evaluation using JSENSE12 with kernel width 8 and a $$$20 \times 20$$$ auto-calibration region. Data was retrospectively undersampled using Poisson Disc undersampling.We compared Motion2Recon to two baselines: supervised training without and with motion augmentations (termed Supervised and Supervised+Aug, respectively). All reconstruction methods used a U-Net architecture13. Experiments were conducted at both 4x and 16x acceleration, and methods were evaluated at motion levels $$$\alpha_{test} = 0$$$ (no motion), 0.2 (light motion), and 0.4 (heavy motion). Motion2Recon and Supervised+Aug methods were trained with both light and heavy motion levels.

To explore reconstruction performance in data-limited settings, all methods were evaluated in a data-limited regime with 1 supervised volume. Image quality was measured using structural similarity (SSIM)14 and complex peak-signal-to-noise ratio (cPSNR).

Results

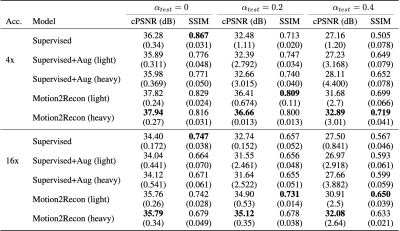

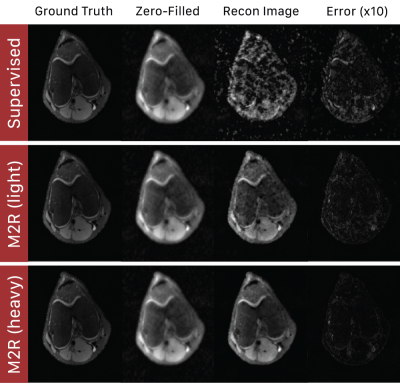

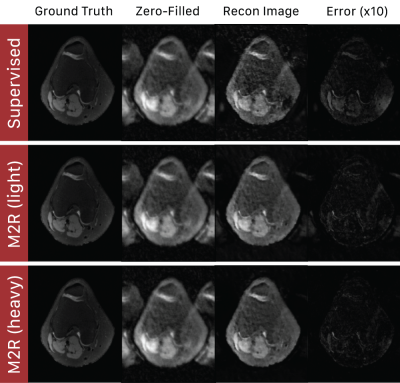

Motion2Recon consistently achieved higher cPSNR and SSIM in comparison to all baselines in motion-free, light, and heavy motion settings (Table 1). Visual inspection of Motion2Recon reconstructions compared to those of Supervised and zero-filled images corroborate superior performance of Motion2Recon in all three settings (Figs. 3,4). Motion2Recon also outperformed naïve Supervised+Aug methods at both light and heavy motion levels despite both methods being trained with the same set of motion augmentations. Unlike Supervised+Aug, Motion2Recon maintained high SSIM values at increasing motion levels. Supervised+Aug models slightly outperformed Supervised models in light and heavy motion settings, but underperformed in settings with no motion.Discussion

Motion2Recon achieved superior performance compared to both supervised baselines in both in-distribution (no motion) and out-of-distribution (light/heavy motion) settings. This suggests that in limited data scenarios, consistency may reduce network overfitting and increase generalizability to both in-distribution and OOD motion-corrupted data.As expected, adding motion augmentations during training increased robustness for reconstructing motion-corrupt scans. However, applying these augmentations in a supervised framework (Supervised+Aug) degraded reconstruction performance among in-distribution (motion-free) scans. Motion2Recon achieved high performance on both in-distribution scans (no motion) and OOD motion-corrupt scans. Generalizability to both distributions is critical for building a unified framework for tackling both reconstruction and motion-correction without manual triaging of motion-corrupt scans. Additionally, Motion2Recon can be extended to simulate perturbations using different motion models, which may be beneficial for generalizing to different motion artifacts.

Conclusion

In this work, we presented Motion2Recon, a semi-supervised consistency-based approach for robust MR reconstruction for motion-corrupt scans with limited supervised data. This approach is capable of achieving high performance on motion-corrupt scans without compromising performance on motion-free scans.Acknowledgements

This work was supported by R01 AR063643, R01 EB002524, R01 EB009690, R01 EB026136, K24 AR062068, and P41 EB015891 from the NIH; the Precision Health and Integrated Diagnostics Seed Grant from Stanford University; DOD – National Science and Engineering Graduate Fellowship (ARO); National Science Foundation (GRFP-DGE 1656518, CCF1763315, CCF1563078); Stanford Artificial Intelligence in Medicine and Imaging GCP grant; Stanford Human-Centered Artificial Intelligence GCP grant; Summer Undergraduate Research Fellowship at Caltech; GE Healthcare and Philips.References

1. Klaas P Pruessmann, Markus Weiger, Markus B Scheidegger, and Peter Boesiger. Sense: sensitivity encoding for fast mri. Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine, 42(5):952–962, 1999.

2. Michael Lustig, David Donoho, and John M Pauly. Sparse mri: The application of compressed sensing for rapid mr imaging. Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine, 58(6):1182–1195, 2007.

3. Christopher M Sandino, Joseph Y Cheng, Feiyu Chen, Morteza Mardani, John M Pauly, and Shreyas S Vasanawala. Compressed sensing: From research to clinical practice with deep neural networks: Shortening scan times for magnetic resonance imaging. IEEE signal processing magazine, 37(1):117–127, 2020.

4. Inger Havsteen, Anders Ohlhues, Kristoffer H Madsen, Janus Damm Nybing, Hanne Chris- tensen, and Anders Christensen. Are movement artifacts in magnetic resonance imaging a real problem?—a narrative review. Frontiers in neurology, 8:232, 2017.

5. Frank Godenschweger, Urte Kägebein, Daniel Stucht, Uten Yarach, Alessandro Sciarra, Renat Yakupov, Falk Lüsebrink, Peter Schulze, and Oliver Speck. Motion correction in mri of the brain. Physics in Medicine & Biology, 61(5):R32, 2016.

6. Maxim Zaitsev, Burak Akin, Pierre LeVan, and Benjamin R Knowles. Prospective motion correction in functional mri. Neuroimage, 154:33–42, 2017.

7. Li Feng, Leon Axel, Hersh Chandarana, Kai Tobias Block, Daniel K Sodickson, and Ricardo Otazo. Xd-grasp: golden-angle radial mri with reconstruction of extra motion-state dimensions using compressed sensing. Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine, 75(2):775–788, 2016.

8. Aiping Jiang, David N Kennedy, John R Baker, Robert M Weisskoff, Roger BH Tootell, Roger P Woods, Randall R Benson, Kenneth K Kwong, Thomas J Brady, Bruce R Rosen, et al. Motion detection and correction in functional mr imaging. Human Brain Mapping, 3(3):224–235, 1995.

9. Arjun D Desai, Batu M Ozturkler, Christopher M Sandino, Shreyas Vasanawala, Brian A Hargreaves, Christopher M Re, John M Pauly, and Akshay S Chaudhari. Noise2recon: 3A semi-supervised framework for joint mri reconstruction and denoising. arXiv preprint arXiv:2110.00075, 2021.

10. Anne Marie Sawyer, Michael Lustig, Marcus Alley, Phdmartin Uecker, Patrick Virtue, Peng Lai, and Shreyas Vasanawala. Creation of fully sampled mr data repository for compressed sensing of the knee. 2013.

11. F Ong, S Amin, S Vasanawala, and M Lustig. Mridata. org: An open archive for sharing mri raw data. In Proc. Intl. Soc. Mag. Reson. Med, volume 26, 2018.

12. Leslie Ying and Jinhua Sheng. Joint image reconstruction and sensitivity estimation in sense (jsense). Magnetic Resonance in Medicine, 57(6):1196–1202, 2007. doi: https://doi.org/10.1002/ mrm.21245. URL https://onlinelibrary.wiley.com/doi/abs/10.1002/mrm.21245.

13. Olaf Ronneberger, Philipp Fischer, and Thomas Brox. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical image computing and computer-assisted intervention, pages 234–241. Springer, 2015.

14. Zhou Wang, Alan C Bovik, Hamid R Sheikh, and Eero P Simoncelli. Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing, 13(4): 600–612, 200

Figures

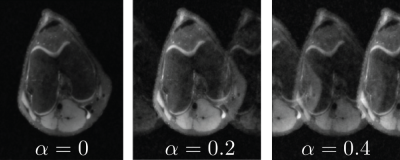

Fig. 1: A simulation of odd and even phase errors across one axis with varying motion levels, denoted by $$$\alpha$$$. This simulation was generated by our motion artifact simulation framework, which uses random uniformly generated phase errors along odd and even lines of the k-space in order to simulate affine motion in two-shot acquisitions.

Fig. 2: The Motion2Recon framework. Scans with fully-sampled references can be trained in a supervised manner with loss $$$L_{supervised}$$$. For prospectively undersampled scans (i.e. unsupervised), the motion simulation framework augments the undersampled k-space with affine motion. Consistency is enforced between the model reconstruction of the motion-corrupted image and the reconstruction of the undersampled image. Thus, undersampled scans without fully-sampled references can be leveraged during training.

Table 1: Mean (standard deviation) reconstruction performance of Supervised, Supervised+Aug, and Motion2Recon measured by cPSNR and SSIM. Supervised+Aug and Motion2Recon models were each trained at light ($$$\alpha=0.2$$$) and heavy ($$$\alpha=0.4$$$) motion levels. Bolded values show the best model at different motion levels during evaluation ($\alpha_{test}$). Motion2Recon consistently outperforms baselines among motion-corrupted scans with minimal performance loss among motion-free scans.

Fig. 3: Sample reconstructions of Supervised and Motion2Recon methods on a heavily motion-corrupt scan ($$$\alpha_{test}=0.4$$$) at 16x acceleration. Supervised methods amplified motion artifacts, resulting in lack of visible anatomy in the image. In contrast, Motion2Recon trained at light and heavy motion levels successfully removed motion artifacts while maintaining sharp image quality

Fig. 4: Sample reconstructions of Supervised and Motion2Recon methods on a heavily motion-corrupt scan ($$$\alpha_{test}=0.4$$$) at 16x acceleration. Supervised methods cannot remove coherent ghosting artifacts characteristic of bulk affine motion. Motion2Recon methods preserved image quality while resolving and removing these coherent artifacts, as shown in the error maps.

DOI: https://doi.org/10.58530/2022/0301