0080

FlowSeg: Joint deep artifact suppression and segmentation network for low latency beat-to-beat assessment of cardiac aortic flow.1Institute of Cardiovascular Sciences, University College London, London, United Kingdom, 2Department of Computer Science, University College London, London, United Kingdom

Synopsis

Real-time cardiac output monitoring is desirable but requires low latency reconstruction and segmentation. A framework based on a spiral real-time flow acquisition and joint deep artifact suppression and segmentation was developed to provide flow volumes with low latency at the scanner. The proposed network was characterized in simulations and the full framework tested at the scanner providing real-time monitoring of flow during exercise. Future work will aim to validate the method in a larger cohort.

Introduction

Real-time cardiac output monitoring is desirable for many stress protocols. This requires both low latency reconstruction and segmentation. We recently proposed a fast reconstruction method for real-time flow imaging1 relying on a NUFFT followed by a U-Net deep artifact suppression step. However, the analysis remains time consuming as it requires segmentation of the vessels hampering real-time feedback of flow volumes. Here the deep artifact suppression network is extended to jointly obtain the restored images and the segmentation of the aorta for low latency beat-to-beat flow quantification during exercise.Methods

Real-time acquisition:

Real-time flow was acquired using a golden angle variable density spiral (central acceleration R=26) with alternating flow encoded and compensated readouts. Other parameters included: FOV=400x400mm2, Pixel resolution=2.08x2.08mm2, 3 spiral arms per contrast image, 6 per velocity maps, temporal resolution=35ms, FA=20o, Venc=200cm/s.

Training Data:

The training dataset included 500 magnitude and phase image series (DICOM format) of aortic spiral gated Phase Contrast MR (PCMR) from a cohort of congenital heart disease patients. 100 series were segmented semi-automatically2 by an expert (V.M.). 400/70 non-segmented/segmented datasets were used for training, 0/10 for validation and 0/16 for testing.

Network and training:

The network architecture consists of a single 3D U-Net with 20 initial filters and 3 downsamplings/upscalings. It takes as input 24 frames of the real and imaginary cropped (128x128) undersampled gridded images and outputs the restored real and imaginary images1 and an additional segmentation probability map3 (using a sigmoid output activation function).

The loss function L between target image/segmentation ($$$y_{ref}$$$/$$$seg_{ref}$$$) and prediction ($$$y_{pred}$$$/$$$seg_{pred}$$$) is defined as follows:

$$L=(1-SSIM(y_{ref},y_{pred}))+\delta\ast\alpha\ast BCE(seg_{ref},seg_{pred})$$

Where SSIM is the average real and imaginary 2D-SSIM, $$$\delta$$$=0/1 for non-segmented/segmented datasets, BCE is the binary cross-entropy and $$$\alpha$$$ is a weighting factor between segmentation and artifact suppression losses3.

Data augmentation was performed during training and included random flips, rotations, translational motion (applied with probability of 0.5) before undersampling using the proposed spiral trajectory and center cropping to obtain the training pairs.

The networks were trained for 200 epochs each using an Adam optimizer (initial learning rate of 5E-4)

Simulations:

Three networks were compared in simulations: 1) Deep artifact suppression only ($$$\alpha$$$=0) with motion augmentation (Denoising only), 2) Joint segmentation ($$$\alpha$$$=5) but no motion augmentation at training (FlowSeg no motion) and 3) both segmentation ($$$\alpha$$$=5) and data augmentation (FlowSeg). Evaluation was performed on the test set (including motion in all 16 cases) using MAE, pSNR, SSIM, BCE and Dice metrics.

Prospective experiments:

As proof of concept, two healthy subjects were prospectively acquired (at rest and during exercise) and reconstructed at the scanner (Aera 1.5T, Siemens Healthineers) using Gadgetron4, TensorFlow MRI5 and applying the proposed network in a sliding window fashion (window of 24 frames, step size 18 frames, keeping only central frames). Images were additionally reconstructed offline to compare the three networks in one subject.

Both flow encoded and compensated images were deep artifact suppressed and segmented using the same network. The images were combined (average magnitude and phase subtraction) and the union of both segmentations was used as final segmentation. From these, a flow curve was extracted and peak detection was performed for real-time monitoring of heart rate, stroke volume and cardiac output. The resultant images, segmentation and flow curves were displayed in real-time at the scanner.

Reconstruction timings were recorded on a Linux Workstation (NVIDIA GeForce RTX 12GB) during acquisition, on frames 100 to 500.

Results

Simulations:

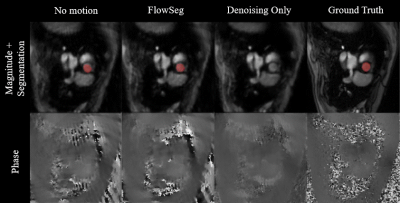

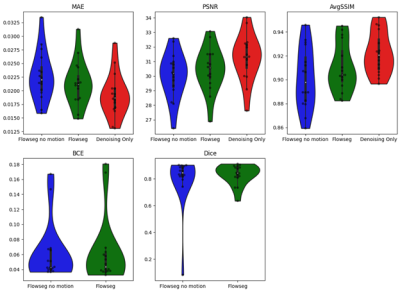

In-silico results are shown for a representative test subject in Figure 2, and quantitative results are shown in Figure 3 with the proposed model mean scores reported at 0.021, 30.46, 0.91, 0.063 and 0.83 for MAE, PSNR, AvgSSIM, BCE and Dice respectively. Comparisons indicate our joint segmentation method provides additional segmentations, while only marginally decreasing image quality metrics (-0.003, -0.8 and -0.012 points in MAE, PSNR and AvgSSIM respectively). Motion augmentation led to more robust segmentation in the presence of motion (1/0 severely failed case for no motion/motion models respectively).

Prospective experiments:

Mean reconstruction time was recorded at the scanner at 23.5ms/frame including 16.1ms/frame for gridding and 7.1ms/frame for joint deep artifact suppression and segmentation. This was lower than the acquired resolution of 35ms/frame, making the method feasible for real-time visualization.

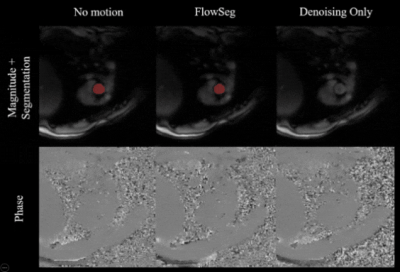

A short cine of a prospectively acquired subject at rest comparing the three different deep learning models (reconstructed offline) is shown in Figure 4. Results are in line with the ones obtained in simulations.

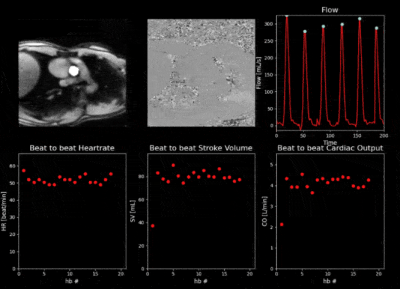

Finally, a video of the proposed interface for real-time monitoring as recorded at the scanner during exercise is provided in Figure 5. As expected, the increase in heart rate and cardiac output manifests immediately at the start of exercise and decreases when the exercise is interrupted. The stroke volume remains stable.

Conclusion

FlowSeg enables real-time joint deep artifact suppression and segmentation for low latency monitoring of aortic flow volumes. The additional segmentation marginally affected final image quality while motion data augmentation seemed to improve robustness. The framework could greatly facilitate workflow and provide a tool for real-time monitoring of flow volumes for stress applications (adenosine, dobutamine or exercise). Future work will aim to validate the method in a larger cohort.Acknowledgements

This work was supported by the British Heart Foundation (grant: NH/18/1/33511). This work used the open source frameworks Gadgetron and Tensorflow MRI.References

1. Jaubert, O. et al. Deep artifact suppression for spiral real-time phase contrast cardiac magnetic resonance imaging in congenital heart disease. Magn. Reson. Imaging 83, 125–132 (2021).

2. Odille, F., Steeden, J. A., Muthurangu, V. & Atkinson, D. Automatic segmentation propagation of the aorta in real-time phase contrast MRI using nonrigid registration. J. Magn. Reson. Imaging 33, 232–238 (2011).

3. Buchholz, T.-O., Prakash, M., Krull, A. & Jug, F. DenoiSeg: Joint Denoising and Segmentation. arXiv 2005.02987v2 (2020).

4. Hansen, M. S. & Sørensen, T. S. Gadgetron: An open source framework for medical image reconstruction. Magn. Reson. Med. 69, 1768–1776 (2013).

5. Montalt-Tordera, J. et al. TensorFlow MRI: A Library for Modern Computational MRI on Heterogenous Systems, Submitted to Proceedings of the ISMRM 2022.

Figures

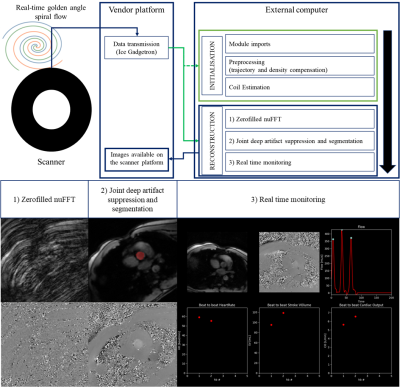

Figure 1. Proposed framework for low latency monitoring of aortic flow. Top: Real-time golden angle variable density spiral data is forwarded to an external computer using Gadgetron, the pipeline is initialized using the 10 first frames (i.e. Module imports, trajectory, density compensation and coil estimation) and the proposed reconstruction and flow monitoring are performed during scanning. Flow maps and segmentations are sent back to the scanner. Bottom: Illustration of the different reconstruction steps.

Figure 2. Animated results from a representative test subject obtained from three models and compared to the Ground Truth. The segmentation is overlaid to the magnitude images when available. Note the simulated translational motion (not included at training for the no motion model).

Figure 3. In-silico quantitative results obtained on the test set (16 subjects which includes motion). Top row: Violin plots comparing imaging metrics (MAE, PSNR and AvgSSIM) for three deep learning models: Flowseg no motion (at training), Flowseg (proposed) and denoising only. Bottom row: Comparison of segmentation metrics (BCE and Dice) for models providing a segmentation output.

Figure 4. Animated cine of a prospectively acquired in-vivo subject reconstructed (offline) using the three different deep learning models. Top row: Combined magnitude and overlaid segmentation (in red). Bottom row: Combined flow maps.

Figure 5. Real Time flow monitoring during exercise. The 5 first seconds of exercise, 5 seconds just after interruption of exercise and 5 seconds at the end of the acquisition. Top Row: Magnitude and overlaid segmentation, Phase and extracted blood flow curve with marked detected peaks. Bottom Row: Beat to beat heartrate, stroke volume and cardiac output as provided in real time. Increase/decrease in heart rate and cardiac output with stable stroke volume (as expected in healthy subjects) at beginning/end of exercise is observed in real-time.