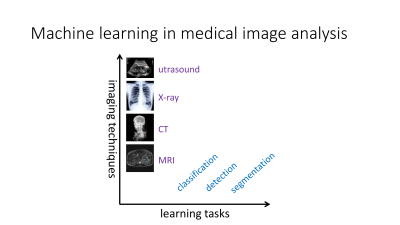

Perspectives on classification of 2D and 3D medical images

Krzysztof J. Geras1

1New York University, New York, NY, United States

1New York University, New York, NY, United States

Synopsis

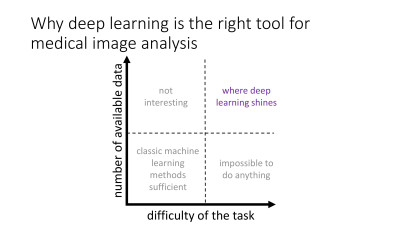

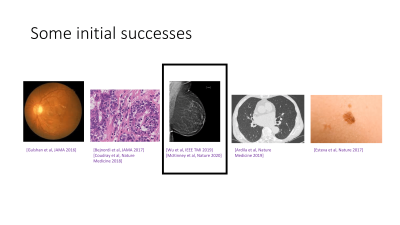

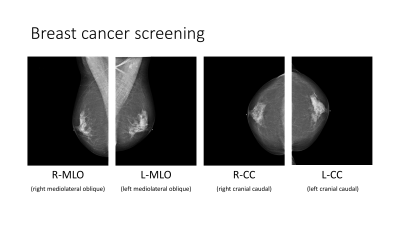

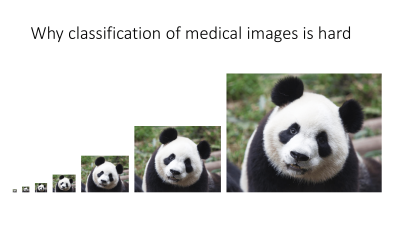

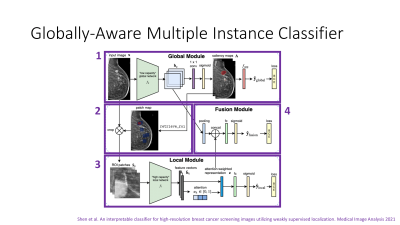

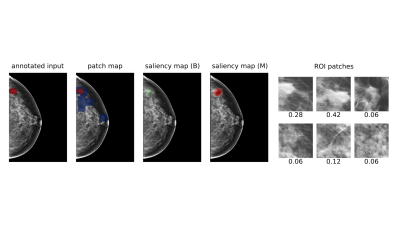

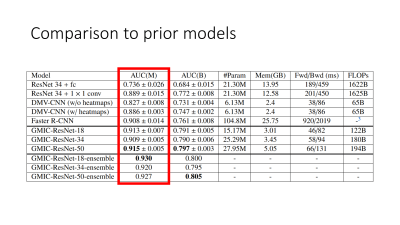

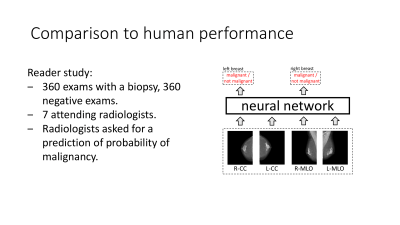

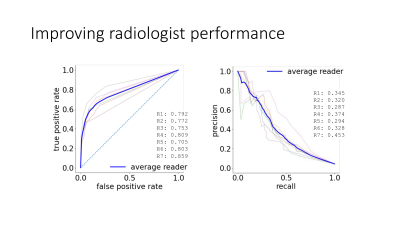

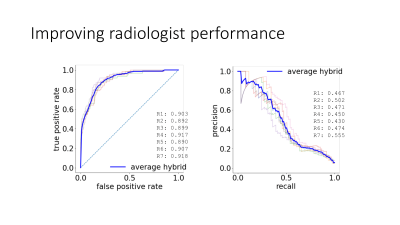

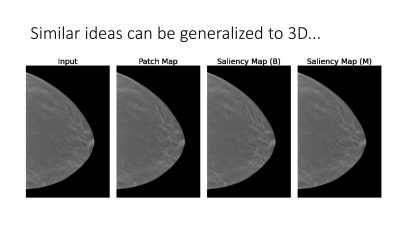

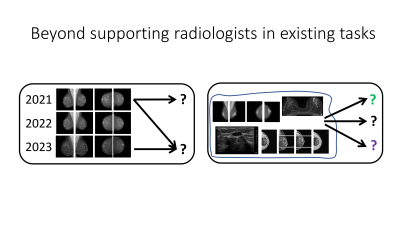

Although deep neural networks have already achieved a good performance in many medical image analysis tasks, their clinical implementation is slower than many anticipated a few years ago. One of the critical issues that remains outstanding is the lack of explainability of the commonly used network architectures imported from computer vision. In my talk, I will explain how we created a new deep neural network architecture, tailored to medical image analysis, in which making a prediction is inseparable from explaining it. I will demonstrate how we used this architecture to build strong networks for breast cancer screening exam interpretation.