Bayesian Approaches in ML

Archana Venkataraman1

1Johns Hopkins University, Baltimore, MD, United States

1Johns Hopkins University, Baltimore, MD, United States

Synopsis

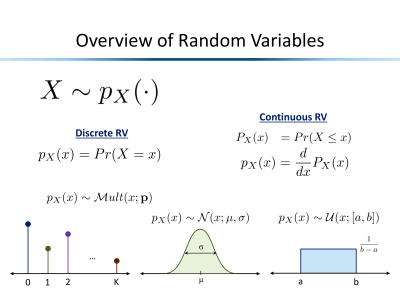

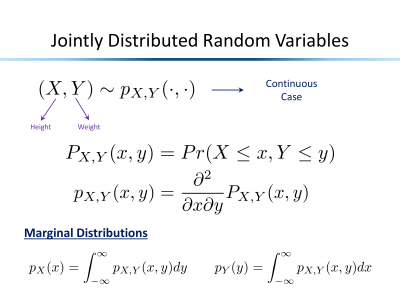

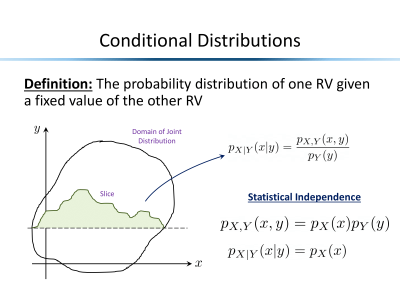

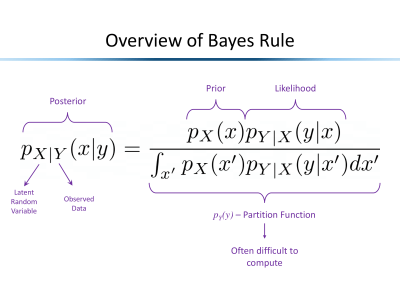

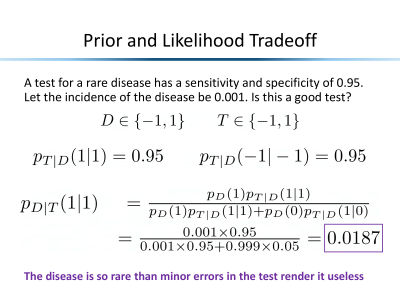

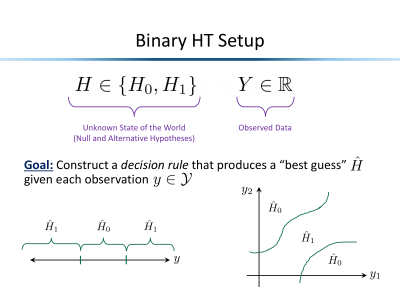

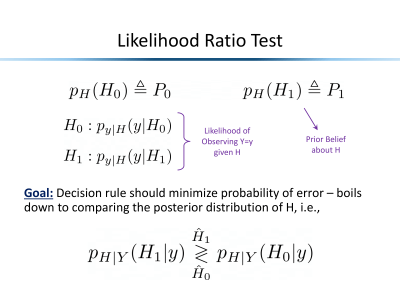

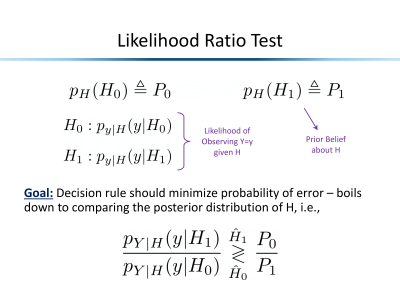

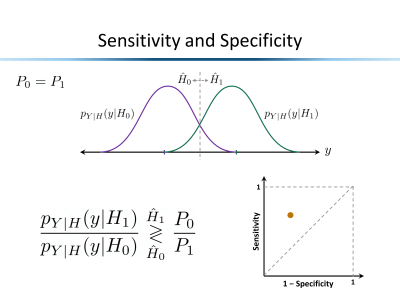

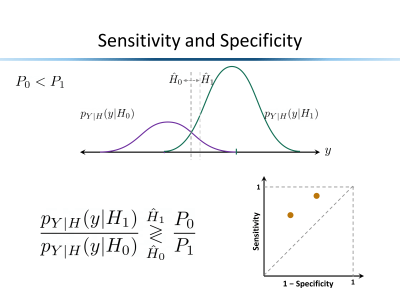

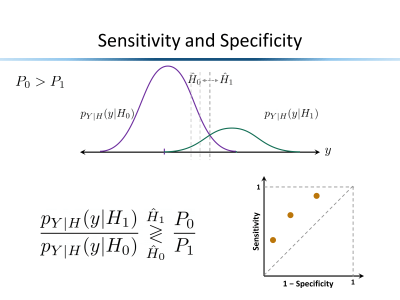

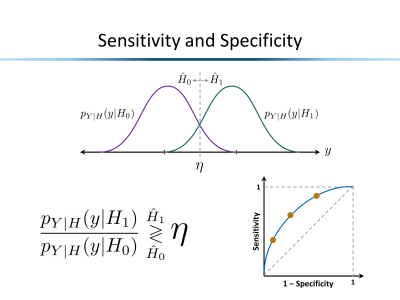

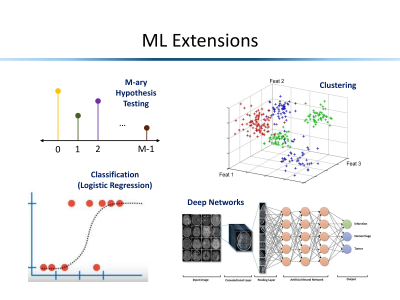

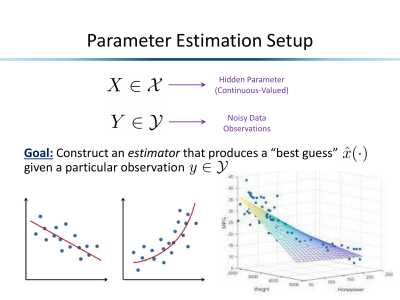

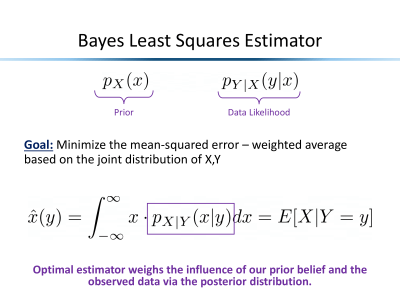

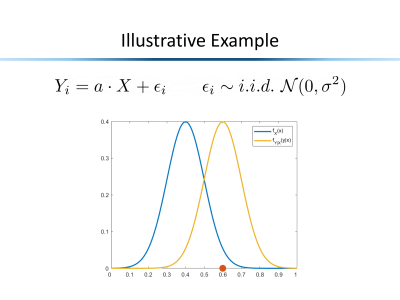

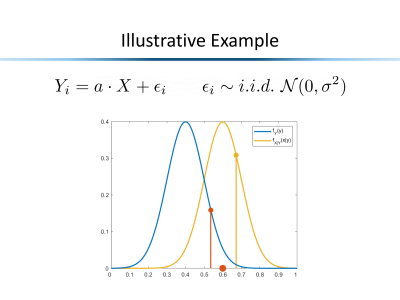

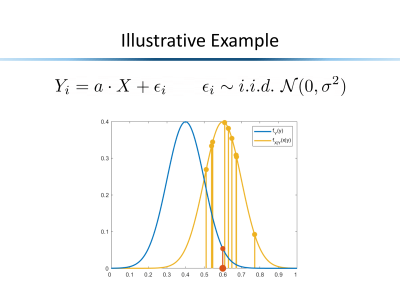

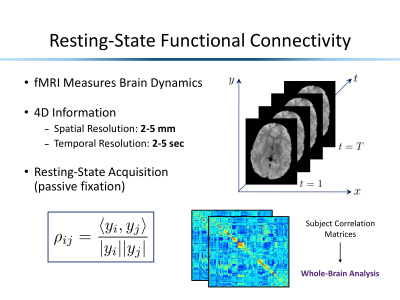

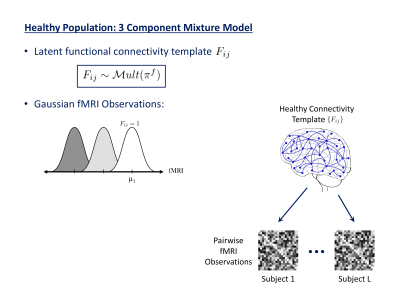

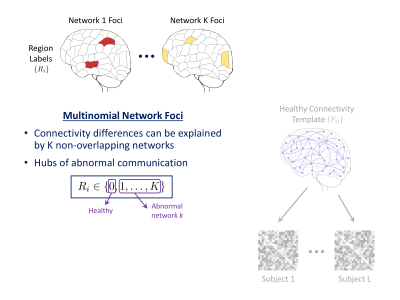

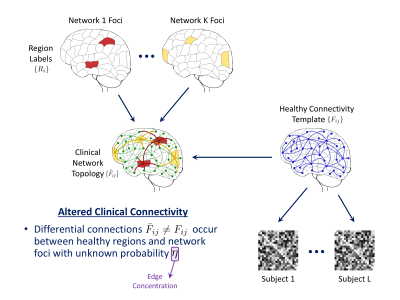

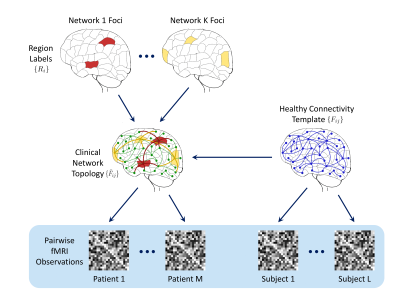

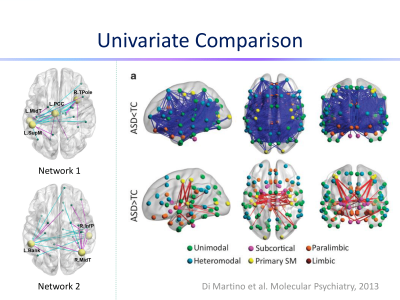

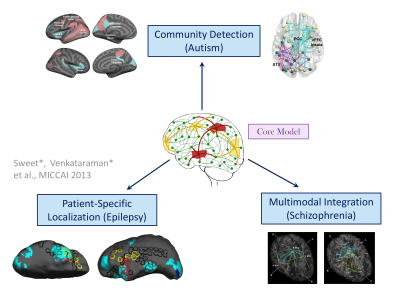

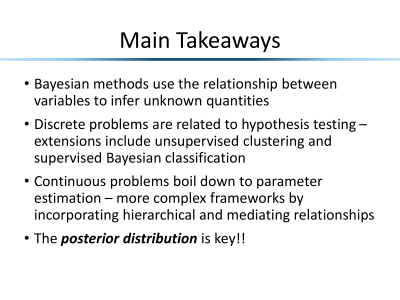

At its core, Bayesian ML is about making predictions from noisy and imperfect data. These predictions rely on the posterior distribution, which combines a priori assumptions about the unknown quantities with a likelihood model for the observed data. This tutorial introduces classical themes in Bayesian analysis. We will start with fundamentals of random variables and conditional distributions, building into the well-known “Bayes Rule”. From here, we will dive into hypothesis testing and parameter estimation, including how to perform inference in these setups. Finally, we will showcase a flexible and interpretable Bayesian model for functional connectomics.