4123

Reduction of B1-field induced inhomogeneity for body imaging at 7T using deep learning and synthetic training data.1TU Eindhoven, Utrecht, Netherlands, 2UMC Utrecht, Utrecht, Netherlands, 3TU Eindhoven, Rossum, Netherlands

Synopsis

Ultra high-field MR images suffer from severe image inhomogeneity and artefacts due to the B1 field. Deep learning is a potential solution to this problem but training is difficult because no perfectly homogeneous 7T images exist that could serve as a ground truth. In this work, artificial training data has been created using numerically simulated 7T B1 fields, perfectly homogeneous 1.5T images and a signal model to add typical 7T B1 inhomogeneity on top of 1.5T images. A Pix2Pix model has been trained and tested on out-of-domain data where it out-performs classic bias field reducing algorithms.

Introduction

Ultra high-field MRI is known to provide higher SNR and superior resolution in comparison to clinical field strengths. The downside is that the increased B1-field frequency causes strongly inhomogeneous transmit and receive fields and therefore corresponding image artefacts and inhomogeneities. This effect is particularly pronounced for 7T body imaging where the larger cross-sectional area allows for more extensive interference patterns within the field of view. Although these inhomogeneities can typically be avoided for smaller imaging targets, they do make 7T images look less attractive arguably impeding clinical adoption of 7T body imaging. Therefore, various techniques have been presented to address this inhomogeneity. For receive inhomogeneities, the standard approach is to measure the receive fields by comparison to a body coil image. Since 7T does not have a body coil image, this method is less effective. Other approaches include histogram based methods such as bi-histogram equalization1, or a non parametric approach such as the N42 algorithm. On the transmit side, efforts have mostly focused on adapting the RF pulse such that more homogeneous flip angle distributions are obtained3, 4. In this work we aim to use deep learning to train a network that adjusts T2w 7T prostate images and reduces or removes the B1-field induced image inhomogeneity and artefacts. The difficulty is that no perfectly homogeneous 7T images exist that could serve as ground truth for the training set. Therefore, artificial 7T images have been created using numerically simulated 7T B1 field patterns and existing 1.5T T2w prostate images.Methods

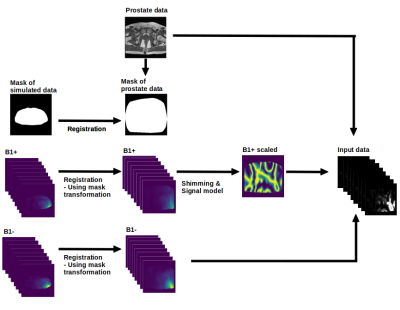

In this work we use 1.5T prostate images as a substitute for homogeneous 7T data. The 1.5T images are processed into seemingly realistic 7T images using numerically simulated B1 field distributions from 23 custom-made models5. These distributions, combined with 40 1.5T prostate images, results in around 1600 images without any data augmentation. The procedure for one image is outlined in Figure 1. First we register the simulated B1+ and B1- fields to the shape of the 1.5T prostate image. Subsequently, we apply a shimming procedure to the registered B1+ data and a signal model on top of that to mimic the signal produced for a T2w image6. Then we combine the mimicked T2w prostate image and the registered B1- data to obtain 8 quasi-realistic single-channel 7T prostate images. These images serve as input while the undisturbed 1.5T image is the target. Finally we choose Pix2Pix7 as Deep Learning architecture together with a Perceptual-Style loss8. The performance of the deep learning model is visually compared against N42 and BBHE1.Results

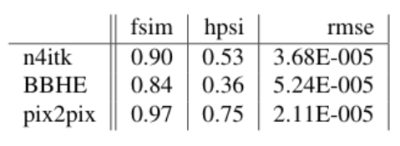

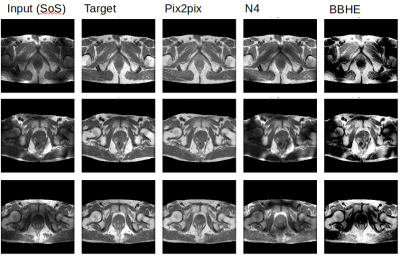

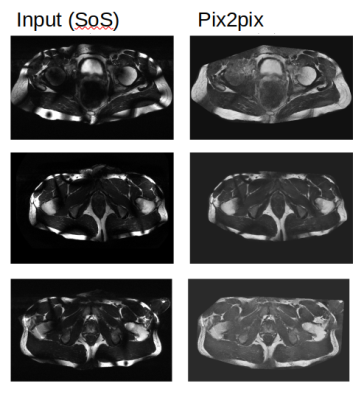

Figure 3 shows the result of the models on the test set. In the three examples that are shown it is clear that Pix2pix matches the target the best. This visual assessment corresponds to the metric values taken over the test set shown in Figure 2. More metrics were initially chosen, i.e. SSIM, but did not gave conclusive results. In Figure 4, Pix2pix was applied to three real 7T T2w prostate images to show how well the model generalizes to measured data. The results show a clear reduction of image inhomogeneity including a decrease in banding artefacts around the edges. Small edges seem to be preserved as well as severe destructive interference bands. In addition, we apply the trained pix2pix model to three out-of-domain images, shown in Figure 5 where we notice an overall improvement in visual appearance of the input.Discussion

Based on visual inspection of the results, combined with the metrics shown in Figure 2, we see great improvement of the deep learning model compared to standard image processing techniques. A similar case holds for the result on real measured 7T data, shown in Figure 4 and the out-of-domain data in Figure 5. Results look promising but -before application- need careful validation. In a future step we will investigate whether key image performance metrics such as SNR, sharpness and resolution are preserved. Also the contrast seems to be reduced in some of the images which should preferably be avoided. Hardly any black bands are present in the resulting images thereby increasing the visual appeal of the prostate image. However, care should be taken that this procedure could also be considered as a drawback since the algorithm may ‘invent’ parts of the image where no data is present pretending to provide information from regions where no information is available. Future study will aim to combine this approach with multiple shim settings in one acquisition4 to avoid signal voids all together. An alternative solution is to not directly predict the undisturbed image but rather predict the bias field and correct the original image accordingly. Although this alternative approach has been pursued in parallel, results have not yet been successful.Conclusion

We have presented a method to create synthetic 7T imaging data from simulated B1 fields and 1.5T images. These images are used to train a Pix2pix network to reduce B1-induced inhomogeneities in 7T images. The approach was used for T2w prostate images where the network clearly and reliably reduces inhomogeneity of real 7T images. Without renewed training, the network also performs well for out-of-domain 7T data such as T1w prostate, liver and knee images.Acknowledgements

No acknowledgement found.References

1. Arriaga-Garcia, E. F., Sanchez-Yanez, R. E. & Garcia-Hernandez, M. Image enhancement using bi-histogram equalizationwith adaptive sigmoid functions. In2014 International Conference on Electronics, Communications and Computers(CONIELECOMP), 28–34 (IEEE, 2014).

2. Tustison, N. J.et al.N4itk: improved n3 bias correction.IEEE transactions on medical imaging29, 1310–1320 (2010)

3. Adriany, G., Van de Moortele, P.F., Wiesinger, F., Moeller, S., Strupp, J.P., Andersen, P., Snyder, C., Zhang, X., Chen, W., Pruessmann, K.P. and Boesiger, P., 2005. Transmit and receive transmission line arrays for 7 Tesla parallel imaging. Magnetic Resonance in Medicine: An Official Journal of the International Society for Magnetic Resonance in Medicine, 53(2), pp.434-445.

4. Orzada, S., Maderwald, S., Poser, B.A., Bitz, A.K., Quick, H.H. and Ladd, M.E., 2010. RF excitation using time interleaved acquisition of modes (TIAMO) to address B1 inhomogeneity in high‐field MRI. Magnetic Resonance in Medicine, 64(2), pp.327-333.

5. Meliadò, E. F., van den Berg, C. A., Luijten, P. R. & Raaijmakers, A. J. Intersubject specific absorption rate variabilityanalysis through construction of 23 realistic body models for prostate imaging at 7t.Magn. resonance medicine81,2106–2119 (2019)

6. Wang, J., Qiu, M., Yang, Q. X., Smith, M. B. & Constable, R. T. Measurement and correction of transmitter and receiverinduced nonuniformities in vivo.Magn. Reson. Medicine: An Off. J. Int. Soc. for Magn. Reson. Medicine53, 408–417(2005).

7. Isola, P., Zhu, J.-Y., Zhou, T. & Efros, A. A. Image-to-image translation with conditional adversarial networks. InProceedings of the IEEE conference on computer vision and pattern recognition, 1125–1134 (2017)

8. johnson, J., Alahi, A. & Fei-Fei, L. Perceptual losses for real-time style transfer and super-resolution. InEuropeanconference on computer vision, 694–711 (Springer, 2016).

Figures