4115

Classification of Cancer at Prostate MRI: Artificial Intelligence versus Clinical Assessment and Human-Machine Synergy1Radiology, Renji Hospital,Shanghai Jiaotong University School of Medicine, Shanghai, China, 2United Imaing Healthcare, Shanghai, China, 3Shanghai United Imaging Intelligence Co. Ltd, Shanghai, China

Synopsis

The interpretation of mpMRI is limited by expertise required and interobserver variability. Here we present an AI model, with ordinary accuracy level for diagnosing prostate cancer, the remarkable of false negative and sensitivity could help to reduce missed-diagnosis of PCa especially csPCa. To assess its performance in clinical setting, we curated internal and external validation, and performance of radiologists, AI model, human-machine synergy were compared, although AI performed suboptimal, the human-led synergy method performed equivalent to clinical assessment with improved consistency, which can serve as a comparison standard to more complex deep learning and synergy approaches in the future.

INTRODUCTION

MRI is currently recognized as the best imaging method for the diagnosis of prostate cancer (PCa), and the potential of artificial intelligence (AI) to provide diagnostic support for human interpretation requires further evaluation. Thus, the aim of our study was to develop an AI model for prostate segmentation and PCa detection based on multi-center cohort. Moreover, possible methods involving human-machine synergy were also explored.METHODS

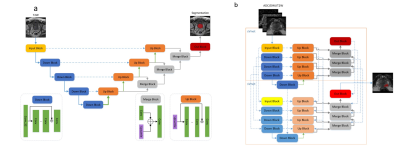

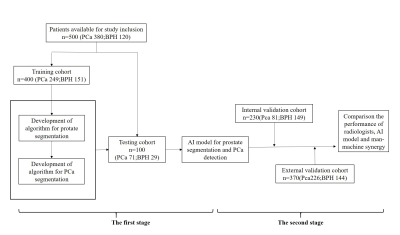

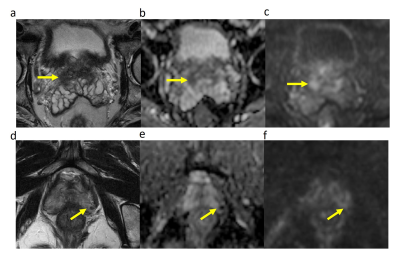

In this retrospective study, T2-weighted, diffusion-weighted prostate MRI sequences and apparent diffusion coefficient (ADC) maps from consecutive men examined with different 3.0-T MRI scanners and protocols between 2015 and 2018 were manually segmented. Ground truth was provided by extended systematic prostate biopsy. AI models with different structures[Vnet, small-Vnet(sVnet), multi-small Vnet(msVnet)] were discussed(Fig. 1), and the performance of radiologists, AI model as well as human-machine synergy were compared within both the internal and external validation groups (Fig. 2). We tried two possible methods to create human-machine synergy. As we found a low false-negative rate (FNR) in our training set, we adopted the ‘negative’ results labelled by the AI system for both methods. For the first synergy method (AI-led method), according to the probabilities, we collected the error-prone areas for the AI as quick filters for the radiologists to perform a clinical reading in the test set. If the situation was not included, then the results would refer to the AI results (Fig. 3). For the second synergy method (human-led method), the radiologists reviewed the patients that were labelled ‘positive’ by the AI system with PI-RADS 2.1, and the category of the patients was classified according to the results of the radiologist.Results

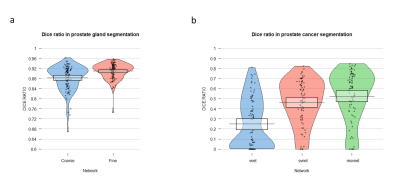

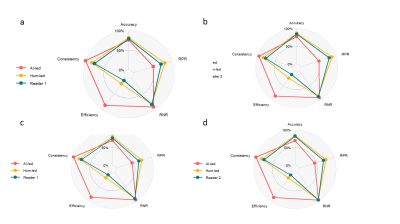

Finally, 1100 patients (median age, 68 years; interquartile range [IQR], 60–73 years) were included. Regarding the segmentation of the prostate gland, the mean/median Dice similarity coefficient (DSC) of the coarse segmentation was 0.88/0.89, whereas the fine segmentation displayed superior performance with a mean/median DSC of 0.91/0.91 in the testing group (Fig. 4a), regarding the segmentation of PCa, The performance of msVnet was optimal among the three networks (Fig. 4b). For the accuracy in PCa diagnosis, AI was comparable or inferior to radiologists (182/230 vs 195/230, 182/230 vs 199/230; P = 0.079, 0.027) in internal test and inferior to two radiologists (268/370 vs 7294/370, 268/370 vs 318/370; P=0.01, P<0.001) in external test, but FNR and sensitivity were remarkable with AI (8/81, 73/81; 16/226, 210/226), especially for the clinical significance PCa (csPCa) (2/69, 67/69 ; 4/176, 172/176). Human-led synergy methods was superior to AI-led methods (306/370 vs 284/370), (326/370 vs 284/370; P=0.012, P<0.001), equal or better than radiologists (326/370 vs 318/370, 306/370 vs 294/370, P=0.077, P=0.004) in external test set. For the internal consistency of the PCa assessment, the AI-led method and human-led method showed perfect internal consistency both in the internal validation cohort (κ = 0.991, 0.836) and external validation cohort (κ = 1.000, 0.828), whereas the two radiologists showed reduced internal consistency (κ = 0.748, 0.770).DISCUSSION

In this study, we present an AI model on a clinically relevant task of prostate gland segmentation and PCa identification covering five different medical centers with six different scanners. The performance of our AI system was within the accuracy range of general radiologists both in internal and external test set1-6,which demonstrate that AI has the capacity to independently complete the diagnosis of PCa. Interestingly, the FNR and sensitivity were more remarkable. Using the AI model could help to reduce missed diagnoses of PCa, especially csPCa. For the human-machine synergy in our study. The AI-led method was the most time-saving and showed optimal consistency and satisfactory accuracy. However, the human-led method was the best choice. Although this was not the most efficient method, the method demonstrated comparable performance to the senior radiologist, and for the junior radiologist, this method saved time while maintaining accuracy, which allowed greater focus on those “positive” results; the internal consistency was also improved compared to the radiologist analysis. In this study, the performance of both the AI and the junior radiologist were decreased in the external test set, meanwhile, the improvement in diagnostic performance was maintained with the human-machine synergy method, this result suggests that combining AI with radiologists may be a feasible clinical application under the current technical conditions. The acquisition of accurate label information is crucial for AI model training. Our results showed that the performance of the AI did not exceed that of the person who did the labelling, thereby demonstrating the importance of improving labelling methods. In addition, compared to the single-stage networks7,8, our two-stage cascading networks can ensure that the first network solely concentrates on learning discriminative features for the whole prostate gland vs. background segmentation, and the second network concentrates on learning how to segment PCa from the whole prostate gland.Conclusion

Our study developed an AI model, which considers the diversity among different MR scanning protocols and medical centers, for prostate segmentation and PCa detection. Although the automated approach performed suboptimally, the human-led synergy method performed equivalent to clinical assessment with improved consistency, which can serve as a comparison standard when developing more complex deep learning and synergistic approaches in the future.Acknowledgements

This study was supported by the National Natural Science Foundation of China [grant numbers 81601487, 81601453], the Science and Technology Commission of Shanghai Municipality [grant number 18DZ1930104] and Shanghai Pujiang Program [grant number 19PJ1431900] .References

1. Pickersgill N A , Vetter J M , Andriole G L , et al. Accuracy and Variability of Prostate Multiparametric Magnetic Resonance Imaging Interpretation Using the Prostate Imaging Reporting and Data System: A Blinded Comparison of Radiologists. Eur Urol Focus 2020;6:267-272.

2. Hoda A. El-Kareem A. El-Samei , Mohamed Farghaly Amin, Ebtesam Esmail Hassan. Assessment of the accuracy of multi-parametric MRI with PI-RADS 2.0 scoring system in the discrimination of suspicious prostatic focal lesions. The Egyptian Journal of Radiology and Nuclear Medicine 2016;47:1075-1082.

3. Kasel-Seibert M, Lehmann T, Aschenbach R , et al. Assessment of PI-RADS V2 for the Detection of Prostate Cancer. Eur J Radiol 2016;85:726–731.

4. Lin WC, Muglia VF, Silva GE, Chodraui Filho S, Reis RB, Westphalen AC. Multiparametric MRI of the prostate: diagnostic performance and interreader agreement of two scoring systems. Br J Radiol. 2016;89(1062):20151056.

5. Mertan FV, Greer MD, Shih JH, et al. Prospective Evaluation of the Prostate Imaging Reporting and Data System Version 2 for Prostate Cancer Detection. J Urol. 2016;196:690-696.

6. Park SY, Jung DC, Oh YT, et al. Prostate Cancer: PI-RADS Version 2 Helps Preoperatively Predict Clinically Significant Cancers. Radiology. 2016;280:108-116.

7. Ishioka J, Matsuoka Y ,Uehara S, et al. Computer-aided diagnosis of prostate cancer on magnetic resonance imaging using a convolutional neural network algorithm. BJU Int 2018; 122:411-417.

8. Schelb P, Kohl S, Radtke JP, et al. Classification of Cancer at Prostate MRI: Deep Learning versus Clinical PI-RADS Assessment. Radiology. 2019;293:607-617.

Figures