4108

A mutual communicated model based on multi-parametric MRI for automated prostate cancer segmentation and classification

Piqiang Li1, Zhao Li2, Qinjia Bao2, Kewen Liu1, Xiangyu Wang3, Guangyao Wu4, and Chaoyang Liu2

1School of Information Engineering, Wuhan University of Technology, Wuhan, China, 2State Key Laboratory of Magnetic Resonance and Atomic and Molecular Physics, Wuhan Institute of Physics and Mathmatics, Innovation Academy for Precision Measurement Science and Technology, Wuhan, China, 3Department of Radiology, The First Affiliated Hospital of Shenzhen University, Shenzhen, China, 4Department of Radiology, Shenzhen University General Hospital, Shenzhen, China

1School of Information Engineering, Wuhan University of Technology, Wuhan, China, 2State Key Laboratory of Magnetic Resonance and Atomic and Molecular Physics, Wuhan Institute of Physics and Mathmatics, Innovation Academy for Precision Measurement Science and Technology, Wuhan, China, 3Department of Radiology, The First Affiliated Hospital of Shenzhen University, Shenzhen, China, 4Department of Radiology, Shenzhen University General Hospital, Shenzhen, China

Synopsis

We proposed a Mutual Communicated Deep learning Segmentation and Classification Network (MC-DSCN) for prostate cancer based on multi-parametric MRI. The network consists of three mutual bootstrapping components: the coarse segmentation component provides coarse-mask information for the classification component, the mask-guided classification component based on multi-parametric MRI generates the location maps, and the fine segmentation component guided by the located maps. By jointly performing segmentation based on pixel-level information and classification based on image-level information, both segmentation and classification accuracy are improved simultaneously.

INTRODUCTION

Prostate cancer (PCa) is currently the most common cancer in the male urinary system worldwide. For the diagnosis of PCa, systematic biopsy has remained the standard diagnostic route despite its associated risks1. MRI, and in particular the multi-parametric MRI, can provide a noninvasive way to study the characteristics of prostate cancer and diagnose prostate cancer. Recent years have seen rapid developments in multi-parametric MRI, in particular utilizing the Prostate Imaging Reporting and Data System (PI-RADS) scoring system2. However, the reviewing and coupling of multiple images places an additional burden on the radiologist and complicates the reviewing process.In recent years, deep learning methods have been applied to the PCa segmentation and classification due to their accuracy and efficiency3,4,5. Unlike traditional methods, deep learning-based methods can implicitly learn multi-parametric information (T2w, ADC, etc.). However, these adopted network architectures are generally designed for only segmentation tasks or classification tasks, ignoring the potential benefits of jointly performing both tasks6. This work aims to design a mutual communicated deep learning network architecture, which jointly performs segmentation based on pixel-level information and classification based on image-level information. The results show that the proposed network could effectively transfer mutual information between segmentation and classification components and facilitate each other in a bootstrapping way.

METHODS

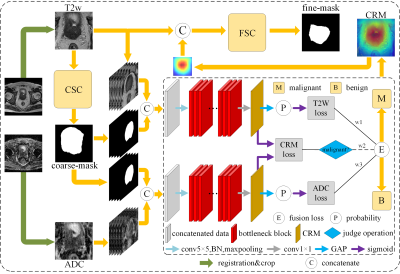

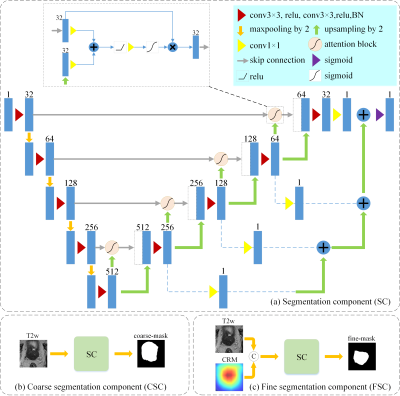

The architecture of this new network (MC-DSCN) is shown in Figure 1. It contains three components:1) Coarse segmentation component. It is based on a residual U-net with an attention block, shown in figure 2. The main function is to generate coarse prostate masks that provide preliminary information about prostate locations for the classification component. The loss function of this network consisted of the dice loss and the online rank loss6. The dice loss measures the degree of agreement between the prediction and ground truth. And the online rank loss is used to pay more attention to those pixels with bigger prediction errors and thus learn more discriminative information.

2) Classification component. The input of this component contains the T2w, ADC maps, and coarse prostate masks. The coarse prostate masks generated by the coarse segmentation boost the classification network's lesion localization and discrimination ability. To explore both feature maps from T2w and ADC, we propose the hybrid loss function: E=ω1×γ(PT2w, y) + ω2×γ(PADC, y) + ε(y)×ω2γ(MT2w, MADC) . The first two terms indicate the classification loss functions (cross-entropy error) of T2w and ADC, respectively. The third term indicates the normalized inconsistency loss function representing the differences between the cancer response maps (CRM) respectively calculated from malignant T2w images and malignant ADC. The CRM is obtained from the last convolutional layer(1×1, shown in gray arrow in Figure 1). It is the single feature map in which each pixel's value indicates the likelihood of this position to be cancerous. The ε(y) is the step function, and ω1,ω2,ω3 are hyperparameters.

3) Fine segmentation component. The architecture of this component is the same as the coarse segmentation component and we concatenate the T2w images with the located map as input to combine the multi-parametric location information. Ultimately, the fine-mask image is obtained.

We conducte extensive experimental evaluations and make the comparison on a dataset including 63 patients with prostate biopsy as the reference standard. All 63 patients are scanned on Simens scanner, including 21 patients with prostate cancer and 42 benign patients (total 1490 images). 5 malignant patients and 5 benign patients (total 220 images) are used for testing, and the others are used for training and validating. Training data is also augmented using a non-rigid image deformation method (total 4364 images). The four quantitative metrics evaluate the performance of the segmentation network: intersection over union (IOU), dice similarity coefficient (DICE), recall, and precision. And the performance of classification is evaluated by the ROC curve graph.

RESULTS

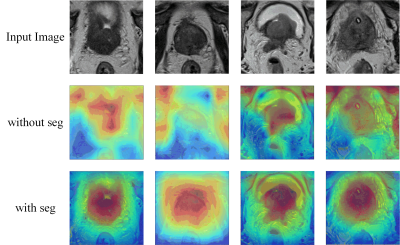

Figure 3 shows the comparison of the location maps obtained by the classification component with or without the coarse-mask. The visual assessment finds the mask-guided method has a better characterization effect on lesions than the normal method. Figure 4 shows the segmentation results by the coarse segmentation component and the fine segmentation component. It indicates the fine segmentation component which guided by location maps can achieve better performance. Both figure 3 and figure 4 certificate the proposed network could effectively transfer mutual information between segmentation and classification components.Figure 5(a-d) list the results of the ablation experiment, in which we investigate the effects of attention block, residual structure, and fusion loss. Figure 5(e) shows the comparison of the ROC curve of utilizing the ADC, T2w, or multi-parametric MRI data. We find the quantitative AUC of multi-parametric MRI is best. Moreover, figure 5(f) shows segmentation and judge operation can make performance further improved.

DISCUSSION & CONCLUSION

A new mutual communicated deep learning network architecture (MC-DSCN) for prostate segmentation and classification based on mp-MRI. This network can learn both the T2w and ADC image feature effectively by completing the segmentation and classification task and achieve mutual guidance and promotion. The proposed network has certain application value for prostate segmentation, classification, and simple lesion detection in clinical study benefit from its high efficiency and high quality.Acknowledgements

We gratefully acknowledge the financial support by National Major Scientific Research Equipment Development Project of China (81627901), the National key of R&D Program of China (Grant 2018YFC0115000, 2016YFC1304702), National Natural Science Foundation of China (11575287, 11705274), and the Chinese Academy of Sciences (YZ201677).References

- Peng Y, Jiang Y, Yang C, et al. Quantitative analysis of multi-parametric prostate MR images: differentiation between prostate cancer and normal tissue and correlation with Gleason score--a computer-aided diagnosis development study. Radiology, 2013, 267(3): 787-796.

- Weinreb J C, Barentsz J O, Choyke P L, et al. PI-RADS prostate imaging–reporting and data system: 2015, version 2. European urology, 2016, 69(1): 16-40.

- Yang X, Liu C, Wang Z, et al. Co-trained convolutional neural networks for automated detection of prostate cancer in multi-parametric MRI. Medical image analysis, 2017, 42: 212-227

- Wang Z, Liu C, Cheng D, et al. Automated detection of clinically significant prostate cancer in mp-MRI images based on an end-to-end deep neural network. IEEE Transactions on Medical Imaging, 2018, 37(5): 1127-1139.

- Qin X, Zhu Y, Wang W, et al. 3D multi-scale discriminative network with multi-directional edge loss for prostate zonal segmentation in bi-parametric MR images. Neurocomputing, 2020, 418: 148-161.

- Xie Y, Zhang J, Xia Y, et al. A mutual bootstrapping model for automated skin lesion segmentation and classification. IEEE Transactions on Medical Imaging, 2020, 39(7): 2482-2493.

Figures

The

overall architecture of the proposed MC-DSCN network for prostate segmentation and classification. The coarse segmentation

component (CSC) is constructed to generate coarse masks. The classification

component extracts and fuses multi-parametric feature information and produce the lesion

localization maps (CRM). The T2w images are concatenated with the CRM, then fed

into the fine segmentation component (FSC) to generate the fine-mask.

(a) The

architecture of the segmentation

component based on a residual U-net with an attention block (b) The input and

output of the coarse segmentation component. (c) The input and output of the fine

segmentation component.

Comparison

of the location maps obtained by the classification component with or without

the coarse-mask. First row: input images. Second row: the location maps obtains

without the coarse-mask. Third row: the location maps obtains when utilizing

the coarse-mask.

Segmentation

results of the proposed network on the T2w images by the coarse segmentation component and the fine segmentation component. Each column from left to

right shows input images, ground truth images, coarse-masks by the coarse segmentation component, and fine-masks by the fine segmentation

component, respectively.

(a-d)The

comparisons for different segmentation network, including Unet, Res-Unet, coarse segmentation network(CSC), fine segmentation network (FSC).

The loss is indicated in brackets, including dice loss and the fusion loss(dice

loss, the online rank loss). Both CSC and FSC are based on a residual

U-net with an attention block. (e-f) the comparison of PCa Classification ROC curve based

on different inputs. (e) the inputs include only T2w, T2w with coarse-mask, only

ADC, ADC with coarse-mask. (f) the inputs include ADC with coarse-mask, multi-parametric images

with coarse-mask.