3977

Generalization of deep learning-based QSM by expanding the diversity of spatial gradient in training data1Department of Electrical and Computer Engineering, Seoul National University, Seoul, Korea, Republic of, 2The University of Queensland, Brisbane, Australia, 3Biomedical Engineering, Hankuk University of Foreign Studies, Yongin, Korea, Republic of

Synopsis

In this work, the effect of spatial gradients in the training data on deep learning-based QSM is explored. We observe that deep learning-based QSM underestimates the susceptibility values when spatial gradients differ between training and test data. For demonstration, three types of networks were trained by using different spatial gradients of training images and evaluated on test data with varying spatial gradients. The results indicate that expanding the spatial gradient distribution of training data improves the performance of deep learning-based QSM. Furthermore, we demonstrate that augmenting spatial gradients may improve deep-learning based QSM to work for various image resolutions.

Introduction

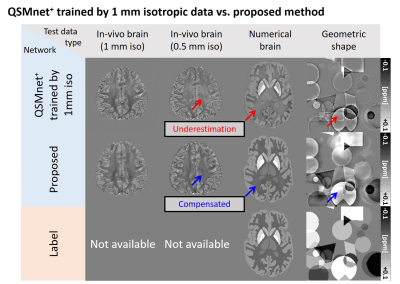

Recently, deep neural networks have shown great potential in quantitative susceptibility mapping (QSM).1-4 Although a few studies have shown out-performance of deep learning-based QSM compared to conventional algorithms, generalization of the network to test data with different characteristic to the training data is limited.5,6 In particular, recent deep learning-based QSM (QSMnet+) shows unexpected underestimation patterns in a few image types (Figure 1). To understand the source of this underestimation, we explore the effects of the spatial gradient of training images in deep learning-based QSM. The spatial gradient is a metric for the spatial variation of images.7,8 The experiment was performed by comparing the networks, which were trained on susceptibility maps with different spatial gradients.Methods

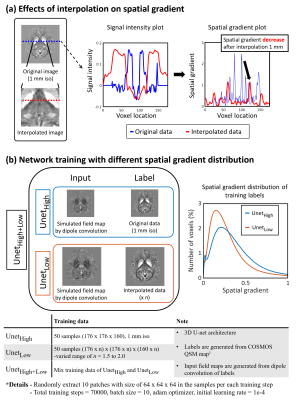

[Network training with different spatial gradient]To explore the spatial gradient of the susceptibility maps as one factor for generalization of the network, the spatial gradient map is calculated using 3D-Sobel filter,7 which measures the numerical gradient of an image, as follows:$$g_{i}=s_{i}*\chi \,\,\,\,\,where\,\,\, i = x,y,and,z\,\,\,\,\,\,[Eq.1]$$ $$G=\sqrt{g_{x}^2+g_{y}^2+g_{z}^2}\,\,\,\,\,\,\,[Eq.2]$$where gi is spatial gradient map of dimension i, si is Sobel filter value of dimension i, * is convolution operator, χ is susceptibility map, G is total spatial gradient map.

For demonstration, we trained two types of networks with different spatial gradient distributions: UnetHigh and UnetLow. Additionally, we trained UnetHigh+Low by combining training data of UnetHigh and UnetLow to evaluate the performance of data augmentation. All networks were trained by labels as susceptibility maps, and inputs as dipole convolution of these labels. For UnetHigh, susceptibility maps for labels were set from existing 1mm-isotropic COSMOS-QSM maps (QSMnet dataset).1 To generate low spatial gradient training data for UnetLow, sinc-interpolation was performed in the COSMOS-QSM maps. The effects of interpolation on spatial gradients are demonstrated in Figure 2a. All training details are summarized in Figure 2b. In each network, the histogram of the spatial gradient distributions of training data was estimated (Figure 2b).

[Evaluation]

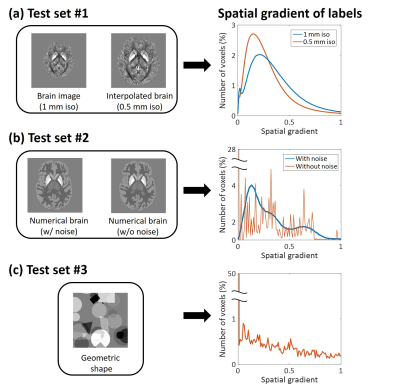

To evaluate the effects of spatial gradients on network performance, three networks were developed and applied to three different types of test data: brain images, numerical brain,9 and geometric shapes. First, the brain image dataset was generated from the QSMnet test dataset. Test dataset was generated in two different resolutions (0.5/1mm isotropic) in the same way as generating the training dataset (i.e.,sinc-interpolation). The second dataset was generated from the numerical brain. To test the networks in high spatial gradient images, additional numerical brain was generated by adding Gaussian noise. Lastly, to test the networks in low spatial gradient, geometric shapes were generated. All test data are summarized in Figure 3 including the histograms of the spatial gradients of the susceptibility maps. In all reconstructions, NRMSE with respect to the ground-truth was estimated.

Additionally, to test the networks in high-resolution brain images without interpolation, in-vivo brain images with 0.5mm-isotropic resolution were acquired at 7T using multi-echo gradient-echo sequence with the following parameters: TE=4.7:5.2:25.5ms/TR=36ms and matrix size=384x384x160. An ROI analysis is performed in low spatial gradient regions, which is manually segmented.

Results

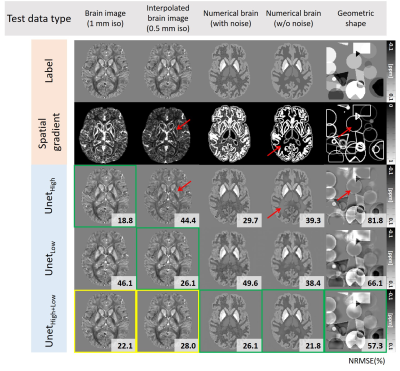

Figure 4 shows the QSM maps of the five inputs (brain images with 0.5mm and 1mm resolution, numerical brain with/without noise, and geometric shapes) reconstructed by three networks (UnetHigh, UnetLow, and UnetHigh+Low). The first-two rows show the labels and the corresponding spatial gradient maps. The best performance is highlighted by green boxes with NRMSE in the right corner. As highlighted by red arrows, UnetHigh shows underestimation in the low spatial gradient regions (0.5mm brain, numerical brain without noise, and geometric shapes) similar to Figure 1. This underestimation of UnetHigh is reduced in 1mm brain or numerical brain with noise, which have higher spatial gradients. On the other hand, when the network was trained with low spatial gradients (UnetLow), the underestimations in low spatial gradient regions (0.5mm brain, numerical brain without noise, and geometric shapes) are reduced as demonstrated by lower NRMSEs than the results of UnetHigh. These outcomes suggest that the network performance is degraded when the spatial gradient distribution of the training data is different to the test data. Furthermore, data augmentation using the interpolated data (UnetHigh+Low) demonstrates improved performance for all inputs as shown in the last row (yellow and green boxes), confirming the validity of the data augmentation approach for network generalization.Figuer 5a shows the QSM maps of 0.5mm in-vivo brain reconstructed by UnetHigh, UnetLow, and UnetHigh+Low. The results are compared with QSMnet+ reconstruction in 1mm resolution as a reference. As highlighted by the red box, UnetHigh shows considerable underestimation compared to other networks. When the ROI analysis is performed, such underestimation is demonstrated in Figure 5b, reporting the mean/standard deviation of susceptibility values in the ROIs. The results suggest that the spatial gradient is an important consideration to the generalization of deep learning-based QSM for various resolutions.

Discussion and Conclusion

In this study, we demonstrate that deep-learning based QSM shows performance degradation when the spatial gradients of the training dataset is different from the test data. Particularly, the underestimations are consistently observed when the test data have lower spatial gradients than the training data. Using the training data with augmented spatial gradient distributions may improve deep learning-based QSM performance to work for a wide range of resolutions.Acknowledgements

This research was supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government (MSIT) (NRF-2019M3C7A1031994 and NRF-2018R1A2B3008445).References

[1] Yoon, Jaeyeon, et al. "Quantitative susceptibility mapping using deep neural network: Unet." NeuroImage 179 (2018): 199-206.

[2] Bollmann, Steffen, et al. "DeepQSM-using deep learning to solve the dipole inversion for quantitative susceptibility mapping." NeuroImage 195 (2019): 373-383.

[3] Zhang, Jinwei, et al. "Fidelity imposed network edit (FINE) for solving ill-posed image reconstruction." NeuroImage 211 (2020): 116579.

[4] Gao, Yang, et al. "xQSM-Quantitative Susceptibility Mapping with Octave Convolutional Neural Networks." arXiv preprint arXiv:2004.06281 (2020).

[5] Jung, Woojin, et al. "Exploring linearity of deep neural network trained QSM: QSMnet+." NeuroImage 211 (2020): 116619.

[6] Jochmann, Thomas, Jens Haueisen, and Ferdinand Schweser. "How to train a Deep Convolutional Neural Network for Quantitative Susceptibility Mapping (QSM)." Proc Intl Soc Mag Reson Med. Vol. 28. 2020.

[7] Kanopoulos, Nick, Nagesh Vasanthavada, and Robert L. Baker. "Design of an image edge detection filter using the Sobel operator." IEEE Journal of solid-state circuits 23.2 (1988): 358-367.

[8] Liu, J., Nencka, A.S., Muftuler, L.T., Swearingen, B., Karr, R., Koch, K.M.: Quantitative susceptibility inversion through parcellated multiresolution neural networks and k-space substitution. arXiv:1903.04961 (2019)

[9] Zubal, I. George, et al. "Computerized three‐dimensional segmented human anatomy." Medical physics 21.2 (1994): 299-302.

Figures