3826

Low-to-High Field MR Image Quality Transfer1Centre for Medical Image Computing, Department of Computer Science, University College London, London, United Kingdom, 2Department of Radiology, Great Ormond Street Hospital, London, United Kingdom, 3Department of Radiology, College of Medicine, University of Ibadan, Ibadan, Nigeria, 4Great Ormond Street Institute of Child Health, University College London, London, United Kingdom, 5Department of Biomedical Engineering, King’s College London, London, United Kingdom, 6Department of Paediatrics, College of Medicine, University of Ibadan, Ibadan, Nigeria, 7Great Ormond Street Hospital for Children, London, United Kingdom, 8Department of Computer Science, University College London, London, United Kingdom

Synopsis

We devise and demonstrate an image quality transfer (IQT) system to estimate, from low-field (<0.5T) magnetic resonance (MR) structural images, the corresponding high-field (1.5T or 3T) images. The intended application scenario is to improve clinical lesion-detection, classification, and decision-making in childhood epilepsy in lower and middle income countries where T1w, T2w and FLAIR sequences on 0.36T scanners remain the clinical standard. Results on synthetic and real data verify substantial resolution and contrast enhancements, aiding conspicuity of pathological lesions.

Introduction

Low-field (<0.5T) permanent-magnet MR systems remain the clinical standard in low and middle income countries (LMICs). Compared to the current high-field (typically 1.5/3T) standard in higher income countries (HICs), low-field MR images have lower resolution and lower contrast, which may hinder clinical decision making. Image quality transfer (IQT) has shown great potential to enhance the resolution in diffusion MRI using linear regression and random forests1 or deep learning2-4 .We adapt IQT to estimate images that a high field scanner would have provided, from acquired low-field structural images. Preliminary work5,6 improves resolution and contrast on T1-weighted (T1w) images using deep neural networks; we report a refined approach and extend to T2-weighted (T2w), and fluid-attenuated inversion recovery (FLAIR) images. IQT trains on matched pairs of low- and high-quality images. Typically IQT synthesises low-quality images approximating the input domain by degrading high-quality examples; this avoids problems of misalignment in separately acquired images. We generate low-field images to provide data sets that support robust learning via stochastic data-driven low-field image simulation and histogram normalisation.

Method

We show the IQT components in Figure 1.Data Sets. As high-field references for building and training IQT models, we used T1w and T2w images from the 3T WU-Minn HCP dataset7 and FLAIR images in the 3T LEMON dataset8 . We used a test set of low-field T1w, T2w, and FLAIR images from 12 subjects provided by an LMIC clinical partner hospital.

Low-Field MR Simulation. We implemented a probabilistic decimation simulator to generate synthetic low-field images from high-field reference images by stochastic contrast change, down-sampling, smoothing, and addition of Gaussian random noise. We estimated the mean and standard deviation signal-to-noise ratios (SNRs) in grey matter (GM) and white matter (WM) regions from 30 0.36T images independent of our 12-subject test set. We sampled specific GM and WM SNRs per image from the corresponding Gaussian distributions and used the probabilistic GM and WM masks from SPM9 to assign intensities to each voxel in a downsampled reference, obtaining the synthetic low-field images.

Histogram Normalisation. In practice, the simulated low-field images may still deviate in intensity distribution from real LMIC low-field images. A subsequent histogram normalisation step further aligns the intensity range of each image-pair. We follow Nyul's algorithm10 by first learning average histogram percentile-landmarks from a set of synthetic data, and then mapping all synthetic and real image histograms to have corresponding landmarks via a piecewise linear transformation.

IQT Net Architecture. We used the anisotropic U-Net, described in Lin et al5, to learn a patch-to-patch mapping. The network combines two common modules to flexibly deepen the network architecture: the bottleneck block inspired by FSRCNN11 , and the residual block12 . We implemented the IQT Net on Tensorflow 2.0. We employed the optimiser ADAM13 , Glorot normal initialisation14 , and MSE as the loss function. We trained for 100 epochs with a batch size of 32. We uniformly cropped up to 1750 non-background 3D patches of the shape 32x32x32 from each subject.

Experiment Design and Results

We first verified the simulation and the histogram normalisation steps through a synthetic experiment. We used hold-out synthetic low-field images as a test set and evaluated the ability of the trained system to recover high-field T1w reference images after training on three distinct training sets:(i) ORACLE: synthetic data using only the WM and GM sample mean SNRs.

(ii) BIAS: synthetic data with each contrast mapping purposefully baised from the sample mean.

(iii) BIAS+NORM: as (ii) but with the bias corrected through histogram normalisation as described above.

Figure 2 plots peak signal-to-noise ratio (PSNR) for each of the three training strategies. ORACLE results provide a gold standard attainable performance. While the BIAS strategy fails, BIAS+NORM approximates ORACLE reasonably well. We also observe the performance begins to plateau as the training size approaches 60 images. In Figure 3, BIAS+NORM shows better contrast than BIAS, and has comparable quality with the ORACLE result and the high-field reference.

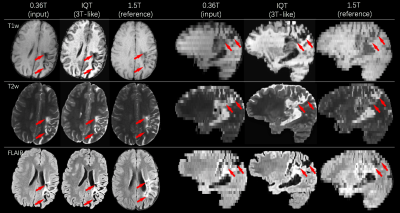

Figures 4 and 5 show results from real LMIC images. The IQT enhanced images of both normal and pathological brains have better contrast and show finer details than 1.5T references from the same subject. We obtained ratings from experienced radiologists on patient data c.f. Figure 5. A score between 1 (poor) and 4 (excellent) was used to assess the WM-GM differentiation and lesion visibility. For the 0.36T/IQT/1.5T images in Figure 5, the average scores are 2.67/3.33/2.67 in GM-WM differentiation and 2.67/3/2.67 in lesion visibility for T1w, T2w, and FLAIR.

Discussion

We demonstrate IQT of multiple low-field structural image contrasts. Experiments show that histogram normalisation of both synthetic low-field images and test images enhances performance. Smaller number of training data introduces tiling and false contrast into the reconstructed images. On our currently modest test set, these artifacts disappear once the training set size reaches 60, but this needs confirmation on larger test sets in the future. The output has undergone preliminary qualitative approval by neuroradiologists, which shows great promise for future adoption of this technology. This motivates future clinical trials and field studies to evaluate its impact on clinical decision making in a representative LMIC and the development of interfaces that can exploit its benefits while mitigating potential mis-interpretation from processing artefacts.Acknowledgements

This work was supported by EPSRC grants (EP/R014019/1, EP/R006032/1 and EP/M020533/1) and the NIHR UCLH Biomedical Research Centre. Data were provided in part by the Human Connectome Project, WU-Minn Consortium (Principal Investigators: David Van Essen and Kamil Ugurbil; 1U54MH091657) funded by NIH and Washington University. Special thanks to Ryutaro Tanno at UCL/Microsoft Research Cambridge, Stefano Blumberg, and Enrico Kaden at UCL for meaningful discussion and valuable feedback.References

1. Alexander DC, Zikic D, Ghosh A, Tanno R, Wottschel V, Zhang J, Kaden E, Dyrby TB, Sotiropoulos SN, Zhang H, Criminisi A. Image quality transfer and applications in diffusion MRI. NeuroImage. 2017;152:283-98.

2. Tanno R, Worrall DE, Ghosh A, Kaden E, Sotiropoulos SN, Criminisi A, Alexander DC. Bayesian image quality transfer with CNNs: exploring uncertainty in dMRI super-resolution. In MICCAI 2017; pp. 611-619. Springer, Cham.

3. Blumberg SB, Tanno R, Kokkinos I, Alexander DC. Deeper image quality transfer: Training low-memory neural networks for 3D images. In MICCAI 2018; pp. 118-125. Springer, Cham.

4. Tanno R, Worrall DE, Kaden E, Ghosh A, Grussu F, Bizzi A, Sotiropoulos SN, Criminisi A, Alexander DC. Uncertainty modelling in deep learning for safer neuroimage enhancement: Demonstration in diffusion MRI. NeuroImage. 2020;225:117366.

5. Lin H, Figini M, Tanno R, Blumberg SB, Kaden E, Ogbole G, Brown BJ, D’Arco F, Carmichael DW, Lagunju I, Cross HJ, Fernandez-Reyes D, Alexander DC. Deep learning for low-field to high-field mr: Image quality transfer with probabilistic decimation simulator. In MLMIR 2019; pp. 58-70. Springer, Cham.

6. Figini M, Lin H, Ogbole G, Arco FD, Blumberg SB, Carmichael DW, Tanno R, Kaden E, Brown BJ, Lagunju I, Cross HJ, Fernandez-Reyes D, Alexander DC. Image Quality Transfer Enhances Contrast and Resolution of Low-Field Brain MRI in African Paediatric Epilepsy Patients. 2020; arXiv preprint arXiv:2003.07216.

7. Sotiropoulos SN, Jbabdi S, Xu J, Andersson JL, Moeller S, Auerbach EJ, Glasser MF, Hernandez M, Sapiro G, Jenkinson M, Feinberg DA. Advances in diffusion MRI acquisition and processing in the Human Connectome Project. Neuroimage. 2013;80:125-43.

8. Babayan A, Erbey M, Kumral D, Reinelt JD, Reiter AM, Röbbig J, Schaare HL, Uhlig M, Anwander A, Bazin PL, Horstmann A. A mind-brain-body dataset of MRI, EEG, cognition, emotion, and peripheral physiology in young and old adults. Scientific data. 2019;6:180308.

9. Penny WD, Friston KJ, Ashburner JT, Kiebel SJ, Nichols TE. Statistical parametric mapping: the analysis of functional brain images. Elsevier; 2011.

10. Nyúl LG, Udupa JK, Zhang X. New variants of a method of MRI scale standardization. IEEE transactions on medical imaging. 2000;19(2):143-50.

11. Dong C, Loy CC, Tang X. Accelerating the super-resolution convolutional neural network. In ECCV 2016; pp. 391-407. Springer, Cham.

12. He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In CVPR 2016; pp. 770-778.

13. Kingma DP, Ba J. Adam: A method for stochastic optimization. 2014; arXiv preprint arXiv:1412.6980.

14. Glorot X, Bengio Y. Understanding the difficulty of training deep feedforward neural networks. In AISTAT 2010; pp. 249-256.

Figures