3738

Semi-Automated 3D Cochlea Subregional Segmentation on T2-Weighted MRI Scans1Laboratory of Neuro Imaging, USC Mark and Mary Stevens Neuroimaging and Informatics Institute, Keck School of Medicine, University of Southern California, Los Angeles, CA, United States, 2Department of Otorhinolaryngology-Head and Neck Surgery, Asan Medical Center, Seoul, Korea, Republic of

Synopsis

For MRI-based studies of cochlea-related diseases, segmenting the cochlea and modiolus is often essential for quantifying disease-related morphological and intensity changes. Methods for automatic 3D segmentation of these structures on MRI scans, however, are not well established. We aimed to develop a semi-automated, multi-atlas based approach to segmenting the cochlea and modiolus, distinguishing between the cochlear basal and mid-apical turns. We found this approach to have good agreement with manual segmentation, demonstrating its potential in quantitative analyses. Moreover, the definition of the three subregions had good validity, as indicated by the high inter-rater agreement in segmentation.

Introduction

Segmenting the cochlea on MRI scans is often essential for imaging-based studies of diseases involving the cochlea. For instance, imaging-based studies of sudden sensorineural hearing loss have been used to investigate the level of inflammation specific to the cochlea.1 Few such studies have used 3D segmentations of the cochlea as part of investigating cochlear changes to enhancing agents like gadolinium. This is in part due to the lack of availability of automated methods for segmenting the cochlea region of MRI scans in typically used head MRI segmentation software. Moreover, existing efforts to automatically segment the cochlea on MRI scans do not differentiate between the cochlear basal turn, mid-apical turns, and modiolus. Distinguishing these subregions is important because of their unique functional roles. To help enable large-scale imaging-based studies of cochlea-related diseases, we therefore aimed to develop a semi-automated approach for segmenting the cochlea and modiolus on T2-weighted MRI scans.Methods

T2-weighted MRI scans including the cochlea region were acquired from 120 patients with sudden sensorineural hearing loss at the Asan Medical Center. Informed consent was obtained and approval was received by the ethics committee. All scans were first preprocessed with N4 bias-field correction for intensity inhomogeneity. Next, the center points of the left and right cochlea regions, specified as the lateral-most point of the internal auditory canal at the axial level of the horizontal semicircular canal, were manually determined and used to extract spherical masks centered at these points. These spheres encompassing the cochlea region were then histogram-matched to normalize the intensity distribution of individual images. We then rigidly registered each cochlea sphere to the same space defined as a randomly selected individual sphere and formed bilateral image templates by nonlinearly registering data from 58 participants into their average image using the antsMultivariateTemplateConstruction.sh script in ANTs.2 The templates each had a voxel size of 0.35 × 0.35 × 0.6 mm3, the median voxel size of the original MRI scans. Finally each individual cochlea sphere was affinely registered to the nonlinear template. For automatic segmentation, five left and five right cochleae were manually segmented, distinguishing between the modiolus, cochlear basal turn, and cochlear mid-apical turn subregions, for use in the multi-atlas label-fusion algorithm developed by Wang et al.3 The cochlear basal turn was defined as the first full turn of the cochlea spiral starting at the cochlea base. The cochlear mid-apical turn was defined as the remainder of this cochlea spiral continuing from the end of the cochlear basal turn. The modiolus was defined as the space enclosed by the entire cochlea spiral. Automatic segmentation accuracy was examined by comparing the output with manual segmentions for 15 participants using the dice overlap index. Inter-rater agreement was assessed using the dice index of segmentations from two different raters for 5 randomly selected scans from all participants. All manual segmentations were completed using ITK-SNAP.4Results

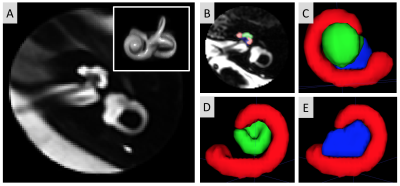

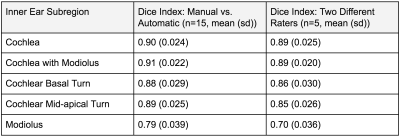

The “nonlinearly built” image templates for the left and right cochleae were successfully created. The left template is shown in Figure 1a. The definition of the cochlear subregions are shown in Figure 1b-e. The automatic segmentation had excellent (cochlear basal and mid-apical turns: Dice = 0.88-89) or good agreement (modiolus: Dice = 0.79) with the manual segmentation. The agreement of the automatic segmentation results with manual segmentation, in addition to the inter-rater segmentation agreement, as assessed using the dice score, are shown in Table 1.Discussion

We developed a semi-automated approach to segmentation of the cochlea of a T2-weighted MRI scan. The only manual procedure involved in this approach is specifying the coordinates of the lateral-most point of the internal auditory canal at the level of the horizontal canal for each scan, which can be done within less than 30 seconds for an expert. This method, which takes advantage of the multi-atlas label fusion algorithm, was found to be both accurate and reliable based on comparisons to manual segmentation. The good agreement between automatic and manual segmentation results demonstrates that this semi-automatic approach to cochlea and modiolus segmentation is sufficiently accurate and reliable for studies of cochlea-related diseases. Manual segmentations were also found to be comparable between two independent raters, indicating the validity of the subregional classifications. This cochlea segmentation method is unique in that it distinguishes between cochlea subregions. This method can define the morphological difference of the cochlea in cochlea-related diseases using T2-weighted images and possible different inflammatory patterns of the cochlear subregions using T1-weighted images with/without enhancement.Conclusion

Here we demonstrate the effectiveness of a semi-automated approach to segmenting the cochlea on T2-weighted MRI scans. Based on agreement with manual segmentation results, this is an effective approach for facilitating imaging-based studies in which a large number of cochleae need to be segmented. In addition, this method may help to help with investigating imaging-based morphological changes and inflammatory patterns using enhancing agents in cochlea-related diseases.Acknowledgements

This study was supported by the National Institutes of Health grants (P50NS035902, P01NS082330, R01NS046432, R01HD072074; P41EB015922; U54EB020406; U19AG024904; U01NS086090; 003585-00001) and BrightFocus Foundation Award (A2019052S).References

[1] Liao W-H, Wu H-M, Wu H-Y, et al. Revisiting the relationship of three-dimensional fluid attenuation inversion recovery imaging and hearing outcomes in adults with idiopathic unilateral sudden sensorineural hearing loss. European Journal of Radiology. 2016;85(12):2188-2194. doi:10.1016/j.ejrad.2016.10.005

[2] Avants BB, Tustison NJ, Song G, Cook PA, Klein A, Gee JC. A reproducible evaluation of ANTs similarity metric performance in brain image registration. Neuroimage. 2011;54(3):2033-2044. doi:10.1016/j.neuroimage.2010.09.025

[3] Wang H, Suh JW, Das SR, Pluta J, Craige C, Yushkevich PA. Multi-Atlas Segmentation with Joint Label Fusion. IEEE Trans Pattern Anal Mach Intell. 2013;35(3):611-623. doi:10.1109/TPAMI.2012.143

[4] Yushkevich PA, Piven J, Hazlett HC, Smith RG, Ho S, Gee JC, Gerig G. User-guided 3D active contour segmentation of anatomical structures: Significantly improved efficiency and reliability. Neuroimage 2006;31(3):1116-28.

Figures