3523

Streaking artifact reduction of free-breathing undersampled stack-of-radial MRI using a 3D generative adversarial network

Chang Gao1,2, Vahid Ghodrati1,2, Dylan Nguyen3, Marcel Dominik Nickel4, Thomas Vahle4, Brian Dale5, Xiaodong Zhong6, and Peng Hu1,2

1Department of Radiological Sciences, University of California Los Angeles, Los Angeles, CA, United States, 2Department of Physics and Biology in Medicine, University of California Los Angeles, Los Angeles, CA, United States, 3Department of Bioengineering, University of California Los Angeles, Los Angeles, CA, United States, 4MR Application Predevelopment, Siemens Healthcare GmbH, Erlangen, Germany, 5MR R&D Collaborations, Siemens Medical Solutions USA, Inc., Cary, NC, United States, 6MR R&D Collaborations, Siemens Medical Solutions USA, Inc., Los Angeles, CA, United States

1Department of Radiological Sciences, University of California Los Angeles, Los Angeles, CA, United States, 2Department of Physics and Biology in Medicine, University of California Los Angeles, Los Angeles, CA, United States, 3Department of Bioengineering, University of California Los Angeles, Los Angeles, CA, United States, 4MR Application Predevelopment, Siemens Healthcare GmbH, Erlangen, Germany, 5MR R&D Collaborations, Siemens Medical Solutions USA, Inc., Cary, NC, United States, 6MR R&D Collaborations, Siemens Medical Solutions USA, Inc., Los Angeles, CA, United States

Synopsis

Undersampling in free-breathing stack-of-radial MRI is desirable to shorten the scan time but introduces streaking artifacts. Deep learning has shown an excellent performance in removing image artifacts. We developed a 3D residual generative adversarial network (3D-GAN) to remove streaking artifacts caused by radial undersampling. We trained and tested the network using paired images that were undersampled with acceleration factors of 3.1x to 6.3x and fully-sampled from single echo and multi-echo acquisitions. We demonstrate the feasibility of the network with 3.1x to 6.3x acceleration factors and 6 different echo times.

INTRODUCTION

3D golden-angle stack-of-radial trajectories combined with respiratory self-gating can eliminate respiratory motion in free-breathing abdominal imaging but prolongs the acquisition1-4. Radial undersampling methods can shorten the acquisition time but introduce streaking artifacts. Deep neural networks have shown potential for image artifact reduction5,6. However, 2D networks cannot take full advantage of the multi-slice information. This inspired us to implement a 3D network to remove the streaking artifacts from the 3D images. To reduce a commonly observed over-smoothing effect, we used the adversarial loss to train our network.METHODS

Data acquisition and processingOur study was HIPPA compliant and approved by our Institutional Review Board. Written informed consent was obtained for each subject. We used a prototype 3D free-breathing golden-angle stack-of-radial gradient echo sequence to acquire multi-echo and single-echo images for 14 healthy volunteers on two 3T MRI scanners (13 on MAGNETOM PrismaFit/ 1 on MAGNETOM Skyra, Siemens Healthcare, Erlangen, Germany). For the multi-echo images, six echoes were used (TEs: 1.23, 2.46, 3.69, 4.92, 6.15, 7.38ms, TR=9ms, acquisition time=9min), matrix size=160x160, 1500 radial spokes/partition (except 1306 radial spokes/partition for one subject), field of view=450x450mm2, 64 slices, slice thickness=3.5mm, flip angle=4°, bandwidth=1080Hz/pixel. For the single-echo images, all parameters were the same as the multi-echo images except matrix size=256x256, 3500 radial spokes/partition, TE=1.23ms and TR=3ms, acquisition time=7min.

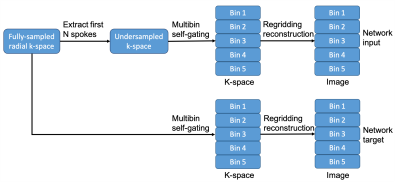

As shown in Figure 1, a prototype retrospective undersampling framework implemented with the image reconstruction framework of the MRI scanner (Siemens Healthcare, Erlangen, Germany), MATLAB (MathWorks, Natick, MA, USA) and command script was performed to extract specified number of spokes from the fully-sampled data. The radial spokes of the fully-sampled and undersampled data (3.1x, 4.2x and 6.3x acceleration) were subsequently grouped into 5 respiratory states using self-gating7,8 and then reconstructed. Datasets were randomly divided into three sets: the training set (8 subjects), the validation set (2 subjects), and the testing set (4 subjects).

Network architecture and training

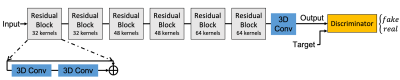

Due to the limited number of the training set, we used a residual network for the generator as a parameter-efficient network architecture. A separate residual GAN was trained for each acceleration factor, respectively. As shown in Figure 2, for each network, the generator consisted of 6 residual blocks, each with two 3D convolution layers. Aside from the adversarial loss, additional L2 (weight=0.6) and SSIM (weight=0.4) losses were employed to constrain the output of the generator. The input data was 64x64x64x2 complex image patches randomly cropped from the original 3D stacks, while the output and the target were 3D magnitude images.

For training, the Adam optimizer was used with the momentum parameter β=0.9, mini-batch size=16, an initial learning rate 0.0001 for the generator, and an initial learning rate 0.00001 for the discriminator. Weights for the networks were initiated with random normal distributions with a variance σ=0.01 and mean µ=0. The training was performed with the Pytorch interface on a commercially available graphics processing unit (NVIDIA Titan RTX, 24GB RAM).

Statistical Analysis

Quantitative analysis was conducted by putting two ROIs in the background air and two ROIs inside the liver for three representative slices (near-dome, mid-level, and inferior liver), and measuring the mean and standard deviation (SD) values of the pixel intensities within each ROI. The ROI data were analyzed using a Bayesian linear model9 to model the effects of the destreaking network, the tissue type, and the mean and SD in the ROI. The dependent ROI mean and SD were log-transformed to avoid fitting negative values. Estimated marginal mean [95% credible intervals] and Bayesian p-value were computed10. All analyses were done in R11.

RESULTS

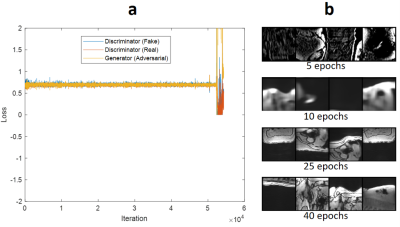

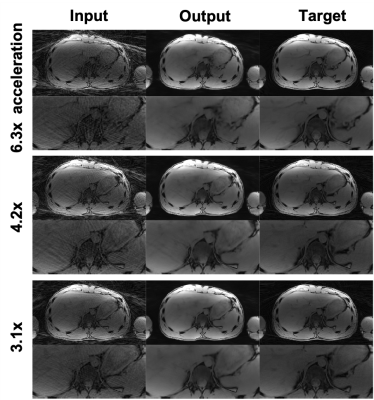

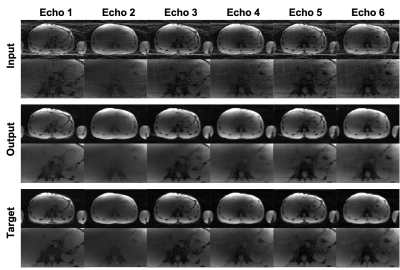

Figure 3(a) shows the adversarial part of the loss function over the training iterations. As seen in Figure 3(a), all three components oscillated around the equilibrium state (0.7). Figure 3(b) shows the qualitative output for validation cases for different epoch numbers. We stopped the training at epoch 30 because the network trained with epoch numbers 25-40 improved the image quality sufficiently based on our experience.Figure 4 shows qualitative results of a single-echo test data with 6.3x to 3.1x acceleration factors. The proposed networks successfully reduced streaking artifacts for all acceleration factors. Figure 5 shows qualitative results of a multi-echo test data for a 4.2x acceleration factor. The networks successfully reduced streaking artifacts for six different echo times.

The ROI SD in the background fell significantly (p < 0.001) after destreaking, from 1182 [1071, 1301] to 193 [175, 213]. Similarly, the ROI SD in the liver parenchyma fell significantly (p < 0.001) after destreaking, from 777 [699, 850] to 534 [480, 584]. The ROI mean in the background also fell significantly (p < 0.001) after destreaking, from 2821 [2560, 3091] to 1730 [1575, 1915]. In contrast, the ROI mean in the liver parenchyma increased significantly (p = 0.013) after destreaking, from 6208 [5657, 6840] to 7630 [6859, 8356].

DISCUSSION AND CONCLUSION

We developed a 3D residual GAN for streaking artifact reduction of free-breathing stack-of-radial MRI. We have shown the feasibility of the residual GAN for different undersampling rates and various echo times. The proposed 3D residual GAN has the potential to shorten the acquisition time and improve the image quality of the free-breathing stack-of-radial MRI.Acknowledgements

This study was supported in part by Siemens Medical Solutions USA, Inc and the Department of Radiological Sciences at UCLA.References

- Block KT, Chandarana H, Milla S, et al. Towards Routine Clinical Use of Radial Stack-of-Stars 3D Gradient-Echo Sequences for Reducing Motion Sensitivity. J Korean Soc Magn Reson Med. 2014;18(2):87.

- Fujinaga Y, Kitou Y, Ohya A, et al. Advantages of radial volumetric breath-hold examination (VIBE) with k-space weighted image contrast reconstruction (KWIC) over Cartesian VIBE in liver imaging of volunteers simulating inadequate or no breath-holding ability. Eur Radiol. 2016;26(8):2790-2797.

- Armstrong T, Dregely I, Stemmer A, et al. Free-breathing liver fat quantification using a multiecho 3D stack-of-radial technique: Free-Breathing Radial Liver Fat Quantification. Magn Reson Med. 2018;79(1):370-382.

- Zhong X, Hu HH, Armstrong T, et al. Free‐Breathing Volumetric Liver and Proton Density Fat Fraction Quantification in Pediatric Patients Using Stack‐of‐Radial MRI With Self‐Gating Motion Compensation. J Magn Reson Imaging. Published online June 2020:jmri.27205.

- Han Y, Yoo J, Kim HH, Shin HJ, Sung K, Ye JC. Deep learning with domain adaptation for accelerated projection‐reconstruction MR. Magnetic resonance in medicine. 2018 Sep;80(3):1189-205.

- Hauptmann A, Arridge S, Lucka F, Muthurangu V, Steeden JA. Real‐time cardiovascular MR with spatio‐temporal artifact suppression using deep learning–proof of concept in congenital heart disease. Magn Reson Med. 2019;81(2):1143-1156.

- Grimm R, Block KT, Hutter J, Forman C, Hintze C, Kiefer B, Hornegger J. Self-gating reconstructions of motion and perfusion for free-breathing T1-weighted DCE-MRI of the thorax using 3D stack-of-stars GRE imaging. Proc. Intl. Soc. Mag. Reson. Med. 20, 2012. p598.

- Zhong X, Armstrong T, Nickel MD, et al. Effect of respiratory motion on free-breathing 3D stack-of-radial liver R2∗ relaxometry and improved quantification accuracy using self-gating. Magn Reson Med 2020;83:1964-1978.

- Goodrich B, Gabry J, Ali I & Brilleman S. (2020). rstanarm: Bayesian applied regression modeling via Stan. R package version 2.19.3 https://mc-stan.org/rstanarm.

- Makowski, D., Ben-Shachar, M., & Lüdecke, D. (2019). bayestestR: Describing Effects and their Uncertainty, Existence and Significance within the Bayesian Framework. Journal of Open Source Software, 4(40), 1541.

- R Core Team (2018). R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/.

Figures

Figure 1. Retrospective undersampling and reconstruction framework to generate the input and target data needed for training the 3D GAN network.

Figure 2. The proposed residual GAN structure for streaking artifact reduction.

Figure 3. Training convergence: (a) plots the adversarial loss over iterations for the generator and the discriminator of the network. The discriminator contains two components associated with classification performance for both real and fake images. As seen in (a), all three components (for iterations 10000-50000) converge to an equilibrium state (0.7) and oscillate around that. After iteration ≈ 50000, loss components diverged. (b) shows the qualitative validation results through the epochs. Training for 25-40 epochs improves the image quality sufficiently.

Figure 4. Example image quality of the single-echo test results with 6.3x to 3.1x accelerator factors. The input shows the undersampled images, the output shows the images after our network destreaking, and the target shows the fully-sampled ground truth images.

Figure 5. Example image quality of the multi-echo test results of a 4.2x acceleration factor. The input shows the undersampled images, the output shows the images after our network destreaking, and the target shows the fully-sampled ground truth images.