3493

A semi-supervised level-set loss for white matter hyperintensities segmentation on FLAIR without manual labels1Department of Diagnostic Radiology, The University of Hong Kong, Hong Kong, Hong Kong, 2Department of Rehabilitation Science, The Hong Kong Polytechnic University, Hong Kong, Hong Kong, 3Department of Medicine, The University of Hong Kong, HongKong, Hong Kong

Synopsis

We propose a semi-supervised training scheme for white matter hyperintensity (WMHs) segmentation using V-Net on FLAIR images. The training procedure does not require manual labeling data but only a few domain knowledge of WMHs. The segmentation result obtained by the V-Net with the proposed scheme outperformed that obtained by the supervised loss with manual labels, showing great potential and generalizability in medical image applications.

INTRODUCTION

White matter hyperintensities(WMHs) are observed in T2-weighted(T2W) and Fluid Attenuated Inversion Recovery(FLAIR) images of magnetic resonance(MR) in elderly patients.1 They are caused by cerebral small vessel diseases in presumed vascular origin and are associated with cognitive impairment and dementia.2,3 Automatic brain lesion segmentation in MR images is the prerequisite for the quantitative assessment of brain health in large-scale studies.4 Recent WMHs segmentation techniques are mostly based on the Convolutional Neural Network(CNN), which requires manual WMHs labelings for network training.5,6 In real practise, labeling lots of medical images could be challenging due to the time-consuming and cost issues.7 To overcome this issue, we developed a semi-supervised training scheme to train a 3D V-Net for WMHs segmentation on FLAIR images, which requires only some domain knowledge on WMHs.8,9METHODOLOGY

The proposed semi-supervised training scheme is based on (1) WHMs have a typically higher signal intensity compared to other brain tissues on FLAIR images, and (2) they appear only on white matter tissues. As a result, we defined a level-set(LS) loss, which is inspired by level-set energy functions. In level-set methods, the image domain is separated into a foreground area 1-H(Φ) and a background area H(Φ), where H(.) is the Heaviside step function and Φ(x) is a signed-distance function. The loss function contains three energy terms: (1) the image intensity difference between the foreground area and an estimated foreground value μf inside an ROI mask (I(x)-μf)2*(1-H(Φ))*ROI, (2) the difference between the background area and an estimated background value μb inside an ROI mask (I(x)-μb)2*H(Φ)*ROI, and (3) the area outside the ROI mask (H(Φ)-ROI)2*(1-H(Φ))*ROI. When the LS-loss reaches its minimum, the foreground 1-H(Φ) segments the bright area (i.e.,WMHs) in the FLAIR image and the background H(Φ) segments the white matter area. The ROI is the white matter mask, which limits the region-of-interest on white matter tissue only.DATA PREPARATION

We evaluated the proposed LS loss using two WMHs datasets: (1) 60 cases retrieved from the small vessel diseases(SVD) database of the MR imaging center of the University of Hong Kong(HKU), where no manual WMHs labels are available, and (2) 60 cases from the WMHs segmentation challenge initiated at the MICCAI 2017 conference, where the manual WMHs contours are publicly available.6 We preprocessed all image data using the processing scheme described as followed (summarized in figure 1). First, we reoriented all images into the transversal slices and resampled all images to the same voxel size 1mm*1mm*3mm (width*height*thickness). Then the T1W images are co-registered to the corresponding FLAIR images of the same subject using the tool Elastix 4.8.10-11 Both the aligned T1W images and the FLAIR images are preprocessed with SPM12 to correct bias field inhomogeneities.12 Afterward, we removed the skull using the BET tool of FSL and segmented the brain tissues into three classes: cerebrospinal fluid(CSF), gray matter(GM), and white matter(WM) using the FAST tool of FSL.13-15 We applied histogram transform on the FLAIR images using the obtained CSF, GM, and WM masks such that the CSF area has average intensity 0, WM area has average intensity 1, and the bright WMHs, if there are any, would have intensity above 1. At last, we cropped a 128*128*48 image patch from the FLAIR images for network training and testing. The WM mask is used as the ROI mask. The 60 cases from HKU were used for training and the 60 cases from the WMH challenge that have ground truth labels were used for testing. We trained a 3D V-Net using the proposed level-set loss, where the last layer of the V-Net is a Tanh layer, such that its output can be used as a signed distance function Φ(x), where foreground/background can be defined via H(Φ) as discussed. For comparison, we trained another V-Net with a regular cross-entropy(CE) loss using the ground truth labels of the 60 WMHs challenge data. For CE-loss, we used 3-folds cross-validation. In each fold, we randomly pick 40 cases for training and the remaining 20 cases for testing. For LS-loss, we trained the V-Net with all HKU images and the 60 test images for testing.EXPERIMENTAL RESULTS

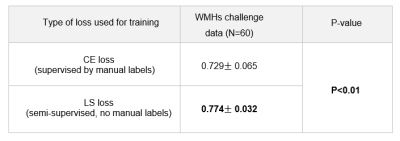

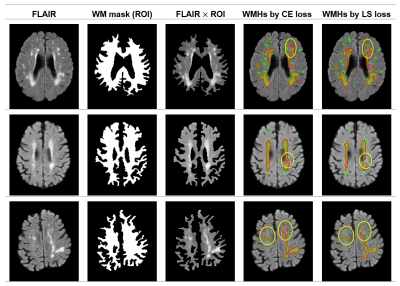

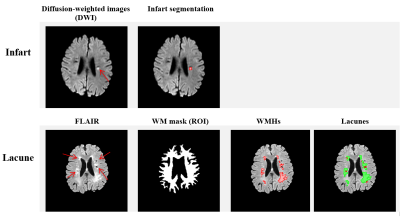

In figure 2, we show the DICE of the V-Net trained with CE loss and the proposed LS-loss respectively. The p-value of the Student's T-test indicates that the DICE difference between the LS-loss and the CE-loss is significant (p<0.01). Figure 3 shows the segmentation results obtained by the V-Net trained with both loss functions, respectively. Besides the WMHs segmentation, we applied the LS-loss for other subtypes of small vessel diseases without the need of manual labels. We segmented infarts on diffusion-weighted images, which also have higher signal intensity, and lacunes on FLAIR, which are hypointense areas near WMHs. Figure 4 shows preliminary segmentation results for infart and lacune segmentation respectively.DISCUSSION

The proposed LS loss has great potential in solving the ground truth lacking issue in medical image segmentation, demonstrated in WMHs and other two subtypes of small vessel disease. For white matter hyperintensities segmentation, we showed that we could train a V-Net without ground truth labels. The V-Net segmentation performance with LS loss is significantly better than the one trained with CE loss, which requires ground truth WMHs labels.Acknowledgements

The work is supported by the HKU URC Seed Fund.References

1. Polman CH, Reingold SC, Banwell B, et al. Diagnostic criteria for multiple sclerosis: 2010 revisions to the McDonald criteria. Annals of Neurology. 2011;69(2):292-302.

2. Wardlaw JM, Smith EE, Biessels GJ, et al. Neuroimaging standards for research into small vessel disease and its contribution to ageing and neurodegeneration. The Lancet Neurology. 2013;12(8): 822-838.

3. Harrell LE, Duvall E, Folks DG, Duke L, et al. The relationship of high-intensity signals on magnetic resonance images to cognitive and psychiatric state in Alzheimer's disease. Archives of Neurology. 1991;48(11):1136-1140.

4. Driscoll I, Davatzikos C, An Y, et al. Longitudinal pattern of regional brain volume change differentiates normal aging from MCI. Neurology. 2009;72(22): 1906-1913.

5. Moeskops P, De Bresser J, Kuijf HJ, et al. Evaluation of a deep learning approach for the segmentation of brain tissues and white matter hyperintensities of presumed vascular origin in MRI. NeuroImage: Clinical, 2018;17: 251-262.

6. Kuijf HJ, Biesbroek JM, De Bresser J, Heinen R, et al. Standardized assessment of automatic segmentation of white matter hyperintensities and results of the WMH segmentation challenge. IEEE Transactions on Medical Imaging. 2019; 38(11):2556-2568.

7. Atlason HE, Love A, Sigurdsson S, Gudnason V and Ellingsen LM. SegAE: Unsupervised white matter lesion segmentation from brain MRIs using a CNN autoencoder. NeuroImage:Clinical. 2019;24:102085.

8. Ronneberger O, Fischer P and Brox T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention. 2015.

9. Milletari F, Navab N and Ahmadi SA, V-net: Fully convolutional neural networks for volumetric medical image segmentation. In 2016 fourth International Conference on 3D Cision (3DV). 2016.

10. Klein S, Staring M, Murphy K, Viergever MA and Pluim JP. Elastix: a toolbox for intensity-based medical image registration. IEEE Transactions on Medical Imaging. 2009;29(1):196-205.

11. Shamonin DP, Bron EE, Lelieveldt BP, et al. Fast parallel image registration on CPU and GPU for diagnostic classification of Alzheimer's disease. Frontiers in Neuroinformatics. 2014; 7:50.

12. Ashburner J and Friston KJ. Unified segmentation. Neuroimage. 2005;26(3):839-851.

13. Smith SM. Fast robust automated brain extraction. Human Brain Mapping. 2002;17(3):143-155.

14. Jenkinson M, Pechaud M, Smith S, et al. BET2: MR-based estimation of brain, skull and scalp surfaces. In Eleventh Annual Meeting of the Organization for Human Brain Mapping. 2005.

15. Zhang Y, Brady M, Smith S, et al. Segmentation of brain MR images through a hidden Markov random field model and the expectation-maximization algorithm. IEEE Transactions on Medical Imaging. 2001;20(1): 45-57.

Figures