3362

3-plane Localizer-aided Background Removal in Magnetic Resonance (MR) Images Using Deep Learning1GE Healthcare, Bengaluru, India

Synopsis

This study presents a new Deep-Learning (DL) based strategy for background computation in MR images. 3-plane Localizer scans have been used for background-subtraction in all subsequent scans of same MR Examination. This is accomplished by obtaining foreground-background masks for Localizer images using U-Net model and applying Image Resampling techniques on the obtained mask to compute background for subsequent scans. Comparison with existing algorithms demonstrates that proposed method prevails in accuracy, effectiveness and provides improved visual contrast. It can also be used universally across anatomies and MR pulse-sequences as opposed to other methods requiring anatomy/sequence-specific tuning and adaptive parameter adjustments.

Introduction

Background subtraction is among the most studied fields in computer vision. Many of those studies1-3 involve mathematical models used to remove unnecessary background data. It has an important application in the field of medical imaging as well where background/non-anatomical region is clinically irrelevant. Background-subtracted images can improve diagnostic accuracy of CAD algorithms as the cost functions in the algorithms can be assigned to the image only, rather than to the image and its background combined (e.g. improved segmentation). Many studies4-8 around background identification use methods such as thresholding, boundary-tracing, clustering, etc. while recently the use of DL9 for same has also been explored with promising results.Localizers are a set of 3-plane, low-resolution, large field-of view scans obtained at the beginning of every MRI Exam used for planning subsequent scans in the exam. This study explores the potential of using Localizer DICOM (Digital Imaging and Communications in Medicine) images for background subtraction in all subsequent scans, i.e. it identifies the pixels in the non-anatomical region of MR images and sets them to zero.

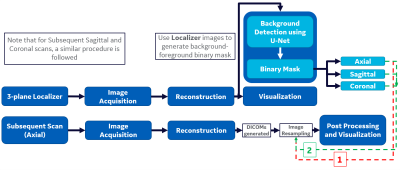

This is accomplished in three steps – 1) Obtaining foreground (pixel=1) - background (pixel=0) binary masks for Localizer images using a DL model, 2) Using Image Resampling to compute background for subsequent scans by using binary Localizer mask from Step 1 as reference image and subsequent scan DICOM as target image, 3) Multiplying the computed mask from Step 2 with subsequent scan DICOM to suppress the background. Main advantages of this approach are: 1) Improved time efficiency by performing DL-based background-identification only once per exam (as opposed to DL-based background-identification once per slice per scan9), followed by minimal processing to achieve background-free images. 2) A single Localizer scan can be used for background subtraction of all subsequent scans of the same MRI Examination, thus eliminating the need to re-train the network for different image types/contrasts as in earlier attempt9. To the best of our knowledge, this technique has not been used before.

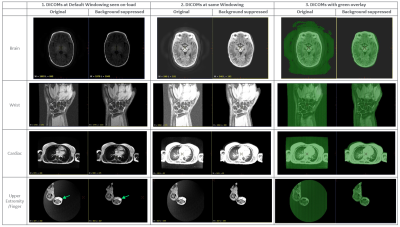

Experimental results on Brain, Cardiac, Wrist and Upper Extremity anatomical scans show visually enhanced contrast and improved Window-Width/Window-Levels (WW/WL).

Methods

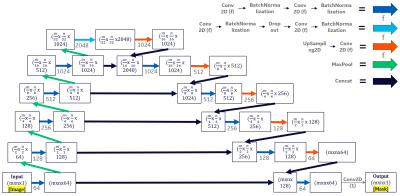

U-Net for segmentation in Localizer images:Figure 1 describes the U-Net10 architecture and model training hyperparameters used. 4,481 Localizer images (1455 Axial, 1456 Sagittal and 1570 Coronal) of sizes 256x256 and 512x512 obtained from General Electric MRI Scanners were used for training (75%) and testing (25%). Ground-truth masks were annotated using a specialized pipeline that utilized morphological operations (smoothening, histogram equalization, Otsu thresholding, dilation, erosion, hole filling and small-object removal) and fine-tuning for individual images. Online augmentation techniques (rotation, flipping, intensity gradient, noise) were used during training.

Resampling:

GE Orchestra Software Development Kit (SDK) was used for Image Reconstruction and DICOM generation. Localizer DICOMs were inferred through U-Net model to get foreground-background binary masks. These masks were saved using SimpleITK (SITK)11 toolkit in Python while retaining Localizer metadata including origin, direction and pixel spacing. SITK ImageResamplingFilter was used to obtain final resampled masks using Localizer masks as reference and subsequent scan DICOMs as target images respectively (Refer Figure 2).

The extent of slice coverage in subsequent scans by the Localizer images is used to decide which one of following two background-subtraction pipelines can be used:

1. When a slice resides completely within the bounds of the Localizer stack of the same orientation: Only masks of same orientation are resampled, i.e. only masks from Axial/Coronal/Sagittal Localizer stack are used for background removal of an Axial/Coronal/Sagittal series respectively.

2. When a slice does not reside completely within the bounds of the Localizer stack of the same orientation: A union of Localizer Masks resampled from all 3 planes is used. Resampled masks were then applied to the subsequent scan DICOMs and the background-free images can then be subject to any post-processing.

Results & Discussion

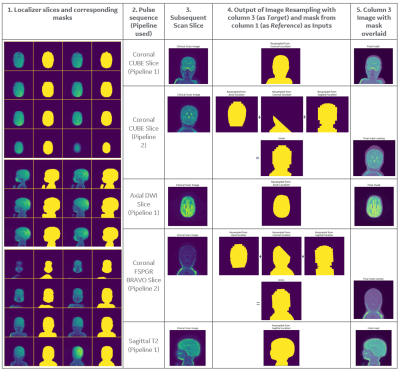

The end-to-end pipeline has been tested across 10 MRI exams of different anatomies with varying image contrasts. The evaluation strategy was two-fold – 1. (Quantitative) Evaluating performance of U-Net with respect to ground truth and other techniques, 2. (Qualitative) IQ reviews of background-free images by clinical application specialists using GE Advantage Workstation (AW). Following are the results:1. As shown in Figure 3.a, multiple background-identification techniques were compared, and the proposed DL-based approach gave superior performance in terms of DICE score.

2. Mean DICE score of 0.9829 (std=0.01689, min=0.7653, max=1.0) was achieved on training data, and mean DICE of 0.9795 (std=0.01869, min=0.7401, max=1.0) on test data for U-Net model. It was also qualitatively evaluated by visualizing model inferencing results (Figure 3.b).

3. Better windowing was observed for background-suppressed images as noted by clinical application specialists. It is in accordance with the study9, which shows how background suppression can improve windowing levels. (Refer Figure 5)

4. Observations 1-3 were confirmed on images of different anatomies acquired using different pulse sequences. (Refer Figure 4,5)

Conclusions

A new and generic strategy of obtaining background-suppressed MR images by applying background information derived from Localizer images to all subsequent scans was demonstrated. Experimental results confirmed good performance in terms of statistical image quality, robustness and improved accuracy over previous attempts.Acknowledgements

We would like to thank GE Healthcare for providing us with the necessary resources for this work. Also, a special thanks to Ananthakrishna Madhyastha for his continuous encouragement and support.References

[1] T. Bouwmans, “Recent advanced statistical background modeling for foreground detection: A systematic survey,”Recent Patents on Computer Science, vol. 4, no. 3, pp. 147–176, Sep. 2011

[2] Tian, Maoqing, et al. "Eliminating background-bias for robust person re-identification." Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018.

[3] Bouwmans T, Javed S, Sultana M, Jung SK. Deep neural network concepts for background subtraction: A systematic review and comparative evaluation. Neural Netw. 2019 Sep;117:8-66. doi: 10.1016/j.neunet.2019.04.024. Epub 2019 May 15. PMID: 31129491.

[4] R. Kalicka, A. Pietrenko-Dabrowska and S. Lipinski, "Efficiency of new method of removing the noisy background from the sequence of MRI scans depending on structuring elements used to morphological processing," 2008 1st International Conference on Information Technology, Gdansk, 2008, pp. 1-4, doi: 10.1109/INFTECH.2008.4621682.

[5] J. Zhang and H. K. Huang, "Automatic background recognition and removal (ABRR) in computed radiography images," in IEEE Transactions on Medical Imaging, vol. 16, no. 6, pp. 762-771, Dec. 1997, doi: 10.1109/42.650873.

[6] Kim, Seongjai and H. Lim. “Method of Background Subtraction for Medical Image Segmentation.” (2006).

[7] Atkins, Margaret & Mackiewich, Blair. (2000). Fully Automatic Hybrid Segmentation of the Brain. Handbook of Medical Imaging. 10.1016/B978-012077790-7/50015-1.

[8] J Rameesa Mol et al: “A Simple and Robust Strategy for Background Removal from Brain MR Images” 2018 IOP Conf. Ser.: Mater. Sci. Eng. 396 012039.

[9] Deepthi Sundaran, Dheeraj Kulkarni: “Optimal Windowing of MR Images using Deep Learning: An Enabler for Enhanced Visualization”, 2019; [http://arxiv.org/abs/1908.00822 arXiv:1908.00822].

[10] Olaf Ronneberger, Philipp Fischer: “U-Net: Convolutional Networks for Biomedical Image Segmentation”, 2015; [http://arxiv.org/abs/1505.04597 arXiv:1505.04597].

[11] Lowekamp BC, Chen DT, Ibáñez L and Blezek D (2013) The Design of SimpleITK. Front. Neuroinform. 7:45. doi: 10.3389/fninf.2013.00045

Figures

Results of different anatomies obtained using their respective Localizers: Note the change in default WW/WL on-load in AW viewer (Col. 1) (Brain: 1683/845 -> 1970/1045; Wrist: 1734/1042 -> 1775/1114; Cardiac: 871/446 -> 900/475; Finger: 427/250 -> 653/367). The range shifts right and dynamic range of visible pixel intensities increases. Observe better distinction of finger mass boundaries in Row 4. Col. 2 shows results at same, increased windowing to show the interference of background when viewing at higher brightness. Col. 3 shows removed background pixels using green overlay.