3348

A Method for Alignment of an Augmented Reality Display of Brain MRI With The Patient’s Head1Radiology, Stanford University, Stanford, CA, United States

Synopsis

We present a method for alignment of augmented reality display of brain MRI with the patient’s real-world head with potential applications to an AR-neuronavigation system that relies on a see-through display.

Introduction

Many augmented reality (AR) applications require alignment of the virtual content with the real world, e.g. visualization of a brain MRI rendering on a patient's head. While this problem is solved on 2D displays where the displacement of the virtual and the real content can be measured on the screen, with see-through displays the accurate alignment is challenging, because of the additional depth component of the display. Alignment of virtual with real objects is often achieved with computer vision methods, e.g. by attaching tracking markers to the real-world object. Once a tracking camera recognizes the tracking marker, it calculates the tracking marker pose and uses that pose to update the pose of the virtual object [1]. However, while a tracking camera might be able to determine the pose of an object with very high accuracy, on a see-through display an AR user might not perceive the virtual object accurately aligned with the real object due to limitations of the AR optics [2] or individual physiological perception differences. In this paper, we present a simple perceptual independent manual alignment method to align a virtual rendering of a patient’s head MRI data with the real subject’s head with a see-through AR display [3]. Manual alignment allows the user to place the virtual content at precisely the location where the real-world content is perceived. We then measure the accuracy with which the AR user perceived the alignment of the virtual and real head using MRI visible capsules placed at specific targets.Methods

We test the accuracy of our alignment method on seven healthy subjects (Fig. 1). Each subject’s head was initially scanned with a 3T GE MRI scanner using a 3D T1-weighted BRAVO sequence with 1mm isotropic resolution. The coordinates of four anatomical head landmarks (nasion, nose tip, left eye lateral canthus and left tragus) were measured in the MRI scan. The head surface was segmented and rendered on the AR device. Six arbitrary brain areas were chosen and projected to the head surface as targets for the validation experiments. During the actual AR alignment procedure, the AR user put on a MagicLeap see-through headset (MagicLeap, FL, USA) and aligned the virtual tip of the MagicLeap controller with real world landmarks to place virtual fiducials at the anatomical head landmark described above. A linear co-registration of the four virtual landmarks to the four virtual fiducials was then performed using the Kabsch algorithm [4] to align the virtual head rendering with the subject’s real head. The fiducial registration error (FRE) of the co-registration was displayed to the AR user. After successful alignment, the AR user attached liquid-filled MRI visible capsules with 5mm diameter to a swimming cap on the subject’s head at exactly the locations where the virtual targets were perceived. The subject then underwent another MRI scan for which we acquired the same 3D T1-weighted MRI head scan as before. To measure the target registration error (TRE), we co-registered the second to the first MRI scan and measured the distance of the capsule projected on the head surface to the brain area targets projected on the head surface.Results

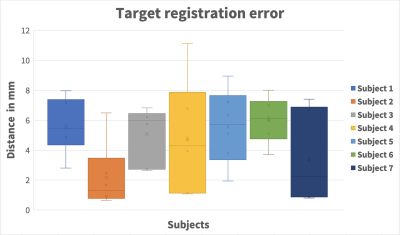

A single alignment task with the virtual fiducials was a very quick procedure that took less than 10 seconds per subject. For three subjects where the FRE was above 4mm, e.g., due to subject motion during fiducial placement or incorrect fiducial placement, the AR user repeated the alignment task until the FRE was below 4 mm and the AR user perceived the virtual model accurately aligned. Figure 2 shows the TRE, measured as the distance of the capsule center and the original target when both are projected on the head surface. The mean TRE was 4.7±2.6mm (mean±one std) for all subjects.Discussion

We have presented an alignment method that allows a user of a see-through AR display to accurately align virtual renderings of medical imaging data with the real patient. Because the AR user manually places virtual fiducials, this alignment method is robust and independent to AR user’s individual perception differences. The main difficulty of this alignment technology is the accurate virtual fiducial placement , which can depend on the AR headset’s optics and the user’s experience. We have also presented an MRI-based validation task that allows to determine the alignment accuracy of AR content with the real world by confirming exactly the locations where the user perceived certain landmarks. The accuracy measurements showed that the current alignment method already provides a very good accuracy for low-risk medical procedures that require an accuracy of less than 5mm. Possible contributors to the alignment error include the virtual fiducial placement, subject head motion between AR alignment and capsule placement, an incorrect interpupillary distance setting on the AR headset, a co-registration error between the two MRI scans and distance measurement errors between capsule and original target. Additional refinement of both the hardware and software used is expected to further improve the alignment accuracy. The proposed AR alignment method represents a simple, intuitive technique suitable for use in a clinical environment and critical component of several potential medical applications such as TMS [5] or skull-base surgery [6] that benefit from image-guidance.Acknowledgements

This work was funded through R21 MH116484.References

[1] E. Watanabe, M. Satoh, T. Konno, M. Hirai, and T. Yamaguchi, “The Trans-Visible Navigator: A See-Through Neuronavigation System Using Augmented Reality,” World Neurosurg., vol. 87, pp. 399–405, 2016.

[2] G. Singh, S. R. Ellis, and J. E. Swan, “The Effect of Focal Distance, Age, and Brightness on Near-Field Augmented Reality Depth Matching,” IEEE Trans. Vis. Comput. Graph., vol. 26, no. 2, pp. 1385–1398, 2018.

[3] C. Leuze, S. Sathyanarayana, B. Daniel, J. McNab, „Landmark-based mixed-reality perceptual alignment of medical imaging data and accuracy validation in living subjects“, IEEE Int. Symp. Mix. Augment. Real., pp. 1333, 2020

[4] W. Kabsch, “A solution for the best rotation to relate two sets of vectors,” Acta Crystallogr. Sect. A, 1976.

[5] C. Leuze, G.Yang, B. Hargreaves, B. Daniel, J. A. McNab, "Mixed-Reality Guidance for Brain Stimulation Treatment of Depression". Adjun. Proc. - IEEE Int. Symp. Mix. Augment. Real. pp. 377–380 2018.

[6] C. Leuze, C. A. Neves, A. M. Gomez, N. Navab, N. Blevins, Y. Vaisbuch, J. A. McNab, “Augmented Reality Guided Skull-Base Surgery”, NASBS 2021 Virtual Symposium, 2021.

Figures