3311

SuperMAP: Superfast MR Mapping with Joint Under-sampling using Deep Combined Network

Hongyu Li1, Mingrui Yang2, Jeehun Kim2, Chaoyi Zhang1, Ruiying Liu1, Peizhou Huang3, Sunil Kumar Gaire1, Dong Liang4, Xiaoliang Zhang3, Xiaojuan Li2, and Leslie Ying1,3

1Electrical Engineering, University at Buffalo, State University of New York, Buffalo, NY, United States, 2Program of Advanced Musculoskeletal Imaging (PAMI), Cleveland Clinic, Cleveland, OH, United States, 3Biomedical Engineering, University at Buffalo, State University of New York, Buffalo, NY, United States, 4Paul C. Lauterbur Research Center for Biomedical Imaging, Medical AI research center, SIAT, CAS, Shenzhen, China

1Electrical Engineering, University at Buffalo, State University of New York, Buffalo, NY, United States, 2Program of Advanced Musculoskeletal Imaging (PAMI), Cleveland Clinic, Cleveland, OH, United States, 3Biomedical Engineering, University at Buffalo, State University of New York, Buffalo, NY, United States, 4Paul C. Lauterbur Research Center for Biomedical Imaging, Medical AI research center, SIAT, CAS, Shenzhen, China

Synopsis

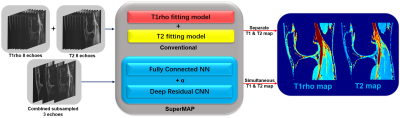

This abstract presents a combined deep learning framework SuperMAP to generate MR parameter maps from very few subsampled echo images. The method combines deep residual convolutional neural networks (DRCNN) and fully connected networks (FC) to exploit the nonlinear relationship between and within the combined subsampled T1rho/T2 weighted images and the combined T1rho/T2 maps. Experimental results show that the proposed combined network is superior to single CNN network and can generate accurate T1rho and T2 maps simultaneously from only three subsampled echoes within one scan with results comparable to reference from fully sampled 8-echo images each for two separate scans.

Introduction

With the conventional model-fitting method, 4-8 echoes are typically necessary for reliable estimation of the parameter maps, resulting in the prolonged acquisition. It is of great interest to accelerate quantitative imaging to increase its clinical use. Deep learning methods have been used recently to accelerate MR acquisition by reconstructing images from subsampled k-space data [1-6]. Although there are many works on deep reconstruction of morphological images, very few works have studied tissue parameter mapping [7-9]. In this abstract, we develop a deep learning framework for superfast quantitative MR imaging. Different from the existing works [8, 10] using deep learning for parameter mapping, our network learns the information in both spatial and temporal directions such that both the k-space measurement and the echoes can be reduced. Compared to our previous works [11-12], this framework uses a combined network to learn the complicated relationship and utilizes the merits for both networks. For the combined reconstruction of the two networks, the balancing factor $$$α$$$ is learned by the network itself during training, thus avoiding artificial tuning. The purpose of this study is to demonstrate the feasibility of SuperMAP for ultrafast T1rho/ T2 mapping, and the superiority of the combined network.Theory and Methods

The proposed SuperMAP reconstructs parametric maps directly from subsampled echo images. In our deep learning network, the goal is to learn the nonlinear relationship $$$F$$$ between input $$$x$$$ and output $$$y$$$, which is represented as $$$y=F(x;Θ)$$$, where $$$Θ$$$ is the DL parameters to be learned during training for both networks. In line with our prior study using DL for T1rho/T2 mapping [11-12], the loss term $$L(Θ)=L(FC)+α L(DRCNN) \ \ (1)$$ ensures that the reconstructed parameter maps from the end-to-end are consistent with the maps from the fully sampled echo images while by-passing the conventional error-prone model fitting step. We learn the deep learning network parameters $$$Θ$$$ that minimize the loss function, which is the mean-square error (MSE) between the network output and the ground truth T1rho/T2 maps.For the FC part, we use 5 layers FC with 300 nodes in intermediate layers. For the DRCNN part, ten weighted layers were used with four skip connections between intermediate layers. For each layer except the last one, 64 filters with a kernel size of 3 are used. In the testing stage, the three aliased echo images are fed into the SuperMAP with learned $$$Θ$$$ and $$$α$$$ to generate the desired quantitative maps $$$F(x_t;Θ)$$$. SuperMAP exploits both the spatial correlation within the selected echo images and the temporal relationships across selected echoes with two neural networks. It learns the complicated nonlinear relationship between the subsampled echo images and the joint T1rho/T2 maps where noise and artifacts are also suppressed at the same time.

In vivo data were collected in compliance with the institutional IRB at a 3T MR scanner (Prisma, Siemens Healthineers) with a 1Tx/15Rx knee coil (QED). Ten knees were scanned using the MAPSS quantification pulse sequence. Spin-lock frequency 500Hz, matrix size 160×320×8×24 (PE×FE×Echo×Slice), FOV 14cm, and slice thickness 4mm. Eight datasets were used to train and validate the proposed SuperMAP as shown in Figure 1 and the rest two were used for testing. For echo subsampling, the first, the third, and the Sixth echoes are selected. Within selected echoes, an RF2 Poisson random sampling was used for further acceleration. The combined reduction factor was 10.66. (2 x 8 echoes/3 echoes x RF2 in k-space). The training takes around 10 hours with 2x NVIDIA Quadro P6000. In contrast, it takes only 0.07 seconds to generate each desired map using the learned network, which is in contrast to the ~15 min processing time for the conventional exponential decay curve fitting.

Results

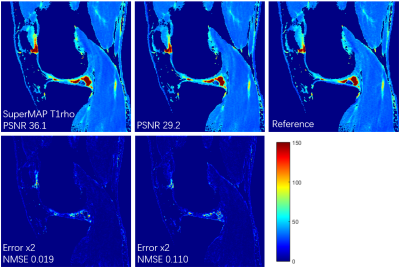

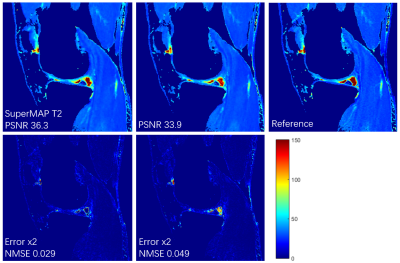

Figures 2 and 3 show the T1rho and T2 maps, respectively, generated using the proposed combined network and single CNN network. Results from all eight echoes using the conventional fitting model are shown as the reference for comparison. It can be seen that the quantitative maps generated by SuperMAP are very close to the reference and are superior to the single deep learning network used in [11-12]. The result is further verified by the peak signal-to-noise ratio (PSNR) and normalized mean squared error (NMSE) shown on the bottom left.Conclusion

In this abstract, we present a combined deep learning network SuperMAP for superfast MR quantitative imaging. The network exploits both spatial and temporal information from the training datasets and balances the merits of two types of deep learning networks. Experimental results show that our proposed network is capable of generating accurate T1rho and T2 maps simultaneously from only three subsampled echo images within one scan. With a scan time of only 5 min, we could obtain a complete set of T1rho and T2 maps. Optimal echo selections and network combinations will be studied in the future.Acknowledgements

This work is supported in part by NIH/NIAMS R01 AR077452.References

[1] Wang, et al, ISBI 2016 [2] Wang, et al, ISMRM 2017 [3] Lee, et al, ISBI 2017 [4] Wang, et al, ISMRM 2017 [5] Schlemper, et al, arXiv. 2017 [6] Jin, et al, IEEE TIP. 2017 [7] Lustig, et al, MRM, 2007 [8] Liu et.al. ISMRM ML workshop. 2018 [9] Li et.al. ISMRM ML workshop. 2018 [10] Liu et.al. MRM. 2019 [11] Li et.al. ISMRM. 2020 [12] Li et.al. ISMRM. 2020Figures

FIGURE 1. Schematic comparison of the

conventional model fitting and combined deep learning network SuperMAP with

joint spatial-temporal under-sampling.

FIGURE 2. T1rho maps from 3 echoes using combined

network SuperMAP and with single CNN network (RF 10.66), and the reference

T1rho maps from eight fully sampled echoes.

FIGURE 3. T2 maps from 3 echoes using combined

network SuperMAP and with single CNN network (RF 10.66), and the reference T2

maps from eight fully sampled echoes.