3252

Defacing and Refacing Brain MRI Using a Cycle Generative Adversarial Network

Zuojun Wang1, Peng Xia1, Wenming Cao2, Kui Kai, Gary Lau1, Henry Ka Fung Mak3, and Peng Cao1

1Diagnostic Radiology, Department of Diagnostic Radiology, HKU, Hong Kong, China, 2Department of Diagnostic Radiology, HKU, HongKong, China, 3Department of Diagnostic Radiology, HKU, Hong Kong, China

1Diagnostic Radiology, Department of Diagnostic Radiology, HKU, Hong Kong, China, 2Department of Diagnostic Radiology, HKU, HongKong, China, 3Department of Diagnostic Radiology, HKU, Hong Kong, China

Synopsis

MRI anonymizations, including face removal, are necessary for clinical data archiving and sharing. Segmentation based methods have been developed for semi-automated face removal on brain MRI. Meanwhile, the conventional methods are inefficient and unreliable, as the images have to be pre-processed and fed in the software manually. Deep learning-based methods are highly efficient in image-to-image translation on large scale databases. In this study, we utilized a cycle generative adversarial network to anonymize brain MRI data. The model showed reliable performance when testing on T1-weighted images, and we also extend it to the unseen MPRAGE images, targeting different brain MRI contrasts.

Purpose

Clinical data de-identification is required while sharing for research, as enforced by NIC policy and HIPAA requirements 1-2. Beside metadata removal 3, facial information retained in brain MRI should be erased 4-5. Traditional methods, including BIRN method 6, John-Doe algorithm 7, template-based method in Freesurfer 8, and recently Brain Imaging Center Image Processing Pipelines (BIC) defacing algorithm 9, all satisfy the objective of face removal.However, these methods are typically time-consuming for large-scale data and rely on parameter setting.Generative adversarial network (GAN) is readily applicable for medical imaging applications, such as denoising, synthesis, and superresolution 10-11. In 2019, an unsupervised CycleGAN model was first demonstrated in face removal for anonymizing T1-weighted images 12. Here we further developed the CycleGAN with improvements: 1) training a CycleGAN model with a perceptual loss to retain the irrelevant tissues; 2) testing model with unseen MPRAGE images, targeting different brain MRI contrasts.

Methods

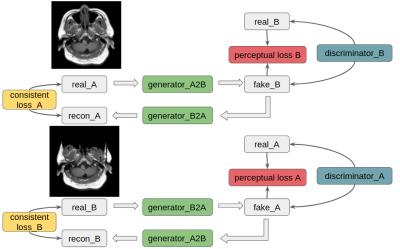

The cycleGAN model 13 is illustrated in Figure 1, with two generators and two discriminators. Resnet backbones in generators mapped two domains, i.e., defaced and refaced MRI, mutually. In each layer of the Resent backbone, kernel size was set to be 3×3, with instance normalization and ReLU activation layers applied subsequently.MSE was used as cycle consistent losses and discriminator losses. Two perceptual losses were added based on a pre-trained VGG16 for paired training. For training configuration, 80 epochs were conducted with Adam optimizers, and the initial learning rates are all 2×10-4 with beta 1 = 0.5, beta 2 = 0.999, which would start to decrease after epoch 50 linearly. All training and testing were performed on a local graphic processing unit (GPU) server with four NVIDIA 2080Ti GPUs and 64 GB RAM. It took around 6 hours to train the model on one GPU based on the Keras API.

Two groups of datasets, including 50 T1w images from a stroke cohort and 10 MPRAGE images from a Parkinson’s disease cohort, were acquired in the study. All the data were approved by the Institutional Review Board. A Philips Achieva 3.0T MRI Scanner with an 8-channel head coil was used in the acquisition. The T1w images parameters were TE/TR = 20/2000 ms, flip angle = 90°, FOV = 229×229×130 mm3, the nominal resolution is 0.45×0.45×5 mm with matrix size = 512×512×27. The whole-brain sagittal MPRAGE images were acquired with the following parameters: TE/TR = 3.2/7.0 ms, flip angle = 8°, FOV = 250 × 250 × 155 mm 3 , matrix size = 256 × 256 × 155, nominal and reconstructed resolution = 0.97 × 0.97 × 1 mm 3 , number of excitations (NEX) = 1.

The mri_deface in freesurfer and fsl_deface in FSL (https://fsl.fmrib.ox.ac.uk/fsl/fslwiki/) were used to remove the face from T1w images for the training labels. 2D slices were extracted from the raw T1w images and the defaced images, respectively, which were fed into the network. The rest T1w images and MPRAGE images were used for evaluation. Due to memory restriction, all images were resized to 256 × 256 during training and testing.

Results

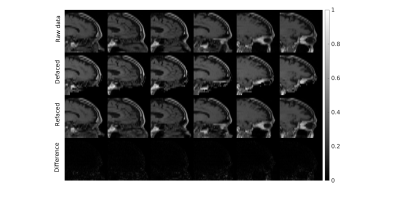

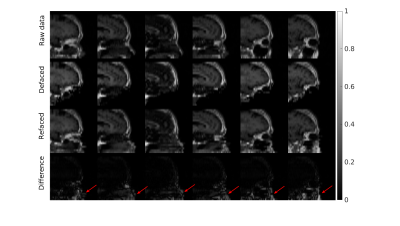

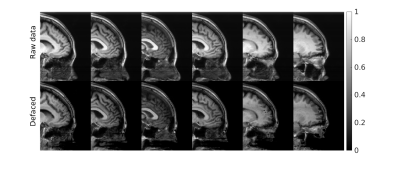

Figure 2 and Figure 3 separately presents one case from training and testing sets. It should be noted that faces were well removed with other irrelevant tissues preserved. The faces were not identically reconstructed on the testing set, which was desirable since the anonymization was irreversible. Furthermore, the evaluation of unseen MPRAGE images showed similar face removal results (in Fig 3), demonstrating model generalizability. Finally, in Figure 4, the proposed perceptual loss provided higher-quality defaced images than conventional loss functions for GAN, preserving irrelevant tissues such as the skull base and bottom of the frontal brain lobe.Conclusion

A CycleGAN with perceptual loss was used to remove the face information from MRI images. The resulted images were reliably de-identified. The anonymization done by our method was irreversible.Acknowledgements

No acknowledgement found.References

- National Institutes of Health. http://grants.nih.gov/grants/policy/ data_sharing/. Accessed 28 February 2011.

- Health and Human Services. http://www.hhs.gov/ocr/privacy/hipaa/understanding/summary/. Accessed 28 February 2011.

- Freymann, John B, Kirby, Justin S, Perry, John H, Clunie, David A, and Jaffe, C. Carl. Image Data Sharing for Biomedical Research-Meeting HIPAA Requirements for De-identification. Journal of Digital Imaging 25.1 (2011): 14-24.

- R. Poldrack and K. Gorgolewski, Making big data open: data sharing in neuroimaging, Nature Neuroscience, 2014; 17, 1510–1517.

- Connie L. Parks, and Keith L. Monson. Automated Facial Recognition of Computed Tomography-Derived Facial Images: Patient Privacy Implications. Journal of Digit Imaging. 2017; 30:204–214. DOI 10.1007/s10278-016-9932-7.

- Pieper, S., et al., The BIRN De-identification and Upload Pipeline (BIRNDUP), in Morphometry BIRN Technical Report.

- Shattuck, D., et al., John Doe: Anonymizing MRI data for the protection of research subject confidentiality, in 9th International Conference on Functional Mapping of the Human Brain. 2003: New York, NY.

- Amanda Bischoff-Grethe, I Burak Ozyurt, Evelina Busa, Brian T Quinn, Christine Fennema-Notestine, Camellia P Clark, Shaunna Morris, Mark W Bondi, Terry L Jernigan, et al., A technique for the de-identification of structural brain MR images, Human brain mapping, 2007: 28(9). 892–903, 2007.

- Vladimir S. Fonov, Louis D. Collins. BIC Defacing Algorithm. BioRxiv. 2018.

- Xin Yi, Ekta Walia, and Paul Babyn, “Generative adversarial network in medical imaging: A review,” arXiv preprint arXiv:1809.07294, 2018.

- Salome Kazeminia, Christoph Baur, Arjan Kuijper, Bram van Ginneken, Nassir Navab, Shadi Albarqouni, and Anirban Mukhopadhyay, “GANs for medical image analysis,” arXiv preprint arXiv:1809.06222, 2018.

- David Abramian, and Anders Eklund. Refacing: reconstruction anonymized facial features using gans. 2019.

- Zhu, Jun-Yan, Park, Taesung, Isola, Phillip, and Efros, Alexei A. "Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks." (2017).

Figures

Figure 1: CycleGAN applied to two domains A and B, and A for refaced MRI, B for defaced MRI. In each circle, two generators and one discriminator were trained simultaneously to map from one domain to another. Consistent loss and perceptual loss were both used in training.

Figure 2: One case from training on T1w images. The face removal was done without destroying irrelevant tissues, such as the skull base and bottom of the frontal brain lobe. Difference maps were from the subtraction of the raw and deface-refaced images.

Figure 3: One case from testing on T1w images. The model performs well regarding face removal without destroying irrelevant tissues, such as the skull base and bottom of the frontal brain lobe. The raw and deface-refaced images were unidentical, showing the deface was irreversible. Difference maps were from the subtraction of the raw and deface-refaced images. (red arrows).

Figure 4: Face removal results done on unseen MPRAGE images, targeting different MRI contrasts. The face was mostly removed, while other tissues were retained.

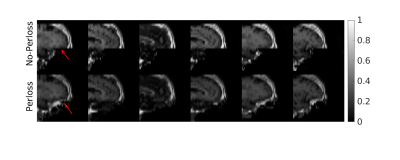

Figure 5: Comparison of defacing results with and without the perceptual loss (denoted as Perloss). The model with perceptual loss preserved most of the skull base.