3225

Deep learning improves retrospective free-breathing 4D-ZTE thoracic imaging: Initial experience1Radiology and Nuclear Medicine, Erasmus Medical Center, Rotterdam, Netherlands, 2Erasmus Medical Center, Rotterdam, Netherlands

Synopsis

Although fully convolutional neural networks (FCNNs) have been widely used for MR imaging, they have not been extended for improving free-breathing lung imaging yet. Our aim was to improve the image quality of retrospective respiratory gated version of a Zero Echo Time (ZTE) MRI sequence (4D-ZTE) in free-breathing using a FCNN so enabling free-breathing acquisition in those patients who cannot perform breath-hold imaging. Our model obtained a MSE of 0.08% on the validation set. When tested on unseen data (4D-ZTE) the predicted images from our model had improved visual image quality and artifacts were reduced in free-breathing 4D-ZTE.

Introduction

Despite a growing interest in lung MRI, its broader use in a clinical setting remains challenging. Several factors limit the image quality of lung MRI, such as the extremely short T2 and T2* relaxation times of the lung parenchyma and cardiac and breathing motion. Zero Echo Time (ZTE) sequences are sensitive to short T2 and T2* species paving the way to improved “CT-like” MR images [1]. A few respiratory phases can be measured with acceptable SNR level and without most of the breathing artefacts when using ZTE in breath-hold condition. Unfortunately, children and subjects with severe lung diseases cannot hold their breath long enough (>12 sec) [1,2,3]. To overcome this limitation, a retrospective respiratory gated version of ZTE (4D-ZTE) which can obtain images in 16 different respiratory phases during free breathing was developed [4]. Initial performance of 4D-ZTE have shown motion artifacts [4]. To improve image quality, deep learning with fully convolutional neural networks (FCNNs) has been proposed [5]. FCNNs has been widely used for MR imaging, but they have not been used for improving free-breathing lung imaging yet [6]. Our aim was to improve the image quality of 4D-ZTE in free-breathing using a FCNN so enabling free-breathing acquisition in those patients who cannot perform breath-hold imaging.Materials and methods

After signed informed consent and IRB approval, 4D-ZTE free breathing and ZTE breath-hold images were obtained from 10 healthy volunteers on a 1.5 T MRI scanner (GE Healthcare Signa Artist, Waukesha, WI). 4D-ZTE acquisition captured all 16 phases of the respiratory cycle. For the ZTE breath-hold, the subjects were instructed to hold their breath in 5 different inflation levels ranging from full expiration to full inspiration. The training dataset consisted of images of 8 volunteers. This data was divided into 80% training set and 20% validation set. In total 800 ZTE breath-hold images were constructed by adding Gaussian noise and performing image transformations (translations, rotations) to imitate the effect of motion in the respiratory cycle, and blurring from varying diaphragm positions, as it appears for 4D-ZTE. The training set was used to train a FCNN model (see figure 1. ) to remove the artificially added noise and transformations from the ZTE breath-hold images and reproduce the original quality. Mean squared error (MSE) was used as loss function. The remaining 2 healthy volunteer's 4D-ZTE free breathing images were used to test the model and qualitatively assess the predicted images.Results

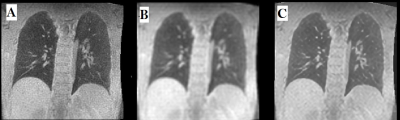

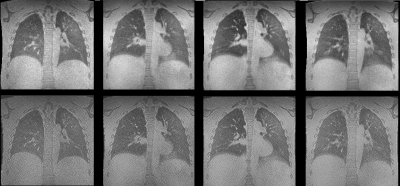

Our model obtained a MSE of 0.08% on the validation set. When tested on unseen data (4D-ZTE) the predicted images from our model improved the contrast of the pulmonary parenchyma against air filled regions (airways or air trapping). By visual inspection, it is obvious that artifacts were reduced (Gibbs ringing, visibility of the parenchyma movement) and details about the parenchyma were not lost. Figure 2. shows the results of the validation set, while the test data and the predicted result can be seen in figure 3.Discussion

Free-breathing 3D and 4D lung imaging with MRI is feasible, however its quality is not yet acceptable for clinical use. This can be improved with deep learning techniques. Our FCNN improves the visual image quality and reduces artifacts of free-breathing 4D-ZTE. A disadvantage of our method is that still during breath-hold imaging there are some spatial frequencies enhanced by the network in 4D-ZTE imaging (see Figure 3). Improvement of the network will be explored by increasing the training dataset and tuning the FCNN.Conclusions

With FCNNs, image quality of free breathing 4D-ZTE lung MRI can be improved and enable better visualization of the lung parenchyma in different respiratory phases.Acknowledgements

No acknowledgement found.References

[1] Gibiino, F., Sacolick, L., Menini, A. et al. Free-breathing, zero-TE MR lung imaging. Magn Reson Mater Phy 28, 207–215 (2015). https://doi.org/10.1007/s10334-014-0459-y

[2] Paediatric lung imaging: the times they are a-changin' Harm A.W.M Tiddens, Wieying Kuo, Marcel van Straten, Pierluigi Ciet European Respiratory Review Mar 2018, 27 (147) 170097; DOI: 10.1183/16000617.0097-2017

[3] Magnetic resonance imaging in children: common problems and possible solutions for lung and airways imaging. Ciet P, Tiddens HA, Wielopolski PA, Wild JM, Lee EY, Morana G, Lequin MH. Pediatr Radiol. 2015 Dec;45(13):1901-15.

[4] Bae K, Jeon KN, Hwang MJ, Lee JS, Park SE, Kim HC, Menini A. Respiratory motion-resolved four-dimensional zero echo time (4D ZTE) lung MRI using retrospective soft gating: feasibility and image quality compared with 3D ZTE. Eur Radiol. 2020 Sep;30(9):5130-5138. doi: 10.1007/s00330-020-06890-x. Epub 2020 Apr 24. PMID: 32333146.

[5] Yasaka K, Abe O (2018) Deep learning and artificial intelligence in radiology: Current applications and future directions. PLoS Med 15(11): e1002707. https://doi.org/10.1371/journal. pmed.1002707

[6] Tustison NJ, Avants BB, Lin Z, Feng X, Cullen N, Mata JF, Flors L, Gee JC, Altes TA, Mugler Iii JP, Qing K. Convolutional Neural Networks with Template-Based Data Augmentation for Functional Lung Image Quantification. Acad Radiol. 2019 Mar;26(3):412-423. doi: 10.1016/j.acra.2018.08.003. Epub 2018 Sep 6. PMID: 30195415; PMCID: PMC6397788.

[7] R. Souza and R. Frayne, "A Hybrid Frequency-Domain/Image-Domain Deep Network for Magnetic Resonance Image Reconstruction," 2019 32nd SIBGRAPI Conference on Graphics, Patterns and Images (SIBGRAPI), Rio de Janeiro, Brazil, 2019, pp. 257-264, doi: 10.1109/SIBGRAPI.2019.00042.

Figures