2811

Deep Multiple Sclerosis Lesion Segmentation with Anatomical Convolution and Lesion-wise Loss1Cornell University, New York, NY, United States

Synopsis

We propose an anatomical convolutional module to couple anatomical information into deep neural network. We further develop a loss function based on the mass center of individual lesions called lesion-wise loss, which can regularize the network training, thereby improving the performance of lesion localization and segmentation. We validate our methods on a public dataset, ISBI-15 Multiple Sclerosis Lesion Segmentation Challenge [1], where the results showed that we achieved the best performance on all published methods.

Introduction

Multiple sclerosis (MS) is an inflammatory demyelinating disease that affects the central nervous system of the brain. Precise segmentation of MS lesions is an essential step for clinical analysis. Conventionally, lesions are segmented by trained experts, the process of which is tedious, time-consuming. Multiple automated methods have been proposed to ease the burden, but a clinically reliable one is not yet available.Recent deep convolutional neural networks (CNNs) have demonstrated promising performance in MS lesions segmentation. Various methods including attention based [2], 2D-stacked-based [3] methods have been proposed to address the problem. However, there still exist two major drawbacks of these methods: 1) None of the existing CNN models have exploited anatomical structure information that is important for lesions identification; 2) All of the existing methods train CNN models using voxel-wise loss function, missing lesion-wise loss function which can help address the data imbalance issue. (Less than 0.3% of voxels belong to foreground lesions). Since lesions vary greatly in terms of location, size, and may share similar intensity values with other tissues, we propose an anatomical convolutional module with a lesion-wise sphere loss to address the issues mentioned above.

Methods

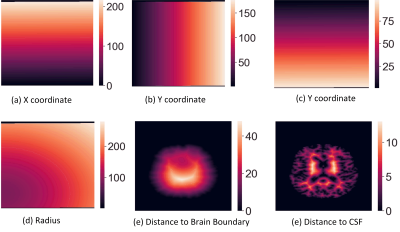

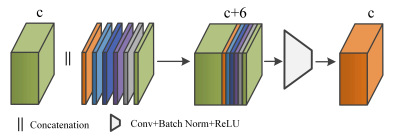

Anatomical Convolution (AC):Multi-sequence imaging can help reduce the confusion brought by con-current hyperintensities or hypointensities between MS lesions and other tissues, but it still requires anatomical structure information to distinguish MS lesions from other tissues that share similar intensity values. We propose an anatomical convolution to capture the anatomical information (the module can be found in Figure 2.). The 1mm isotropic T1-w MNI image is used to obtain standard coordinate, followed by rigid registration to the T1-w image of the patient. Once the co-registration is done, we apply the affine transformation matrix to the standard coordinates, which maps coordinates from the MNI space to the patient space. With the mapped coordinates, we further compute the radius as an additional coordinate dimension.

Besides the co-registered coordinates from the MNI space, we also compute voxel distance to the area of interests such as brain boundary and Cerebrospinal fluid (CSF). These distances are further translated to new coordinate dimensions (The brain boundary and CSF are computed by the FreeSurfer tool [5]). The visual example of the six coordinates we use can be found in Figure 1. We then stack all the coordinates into a feature tensor with size (6,H,W,D), where the H,W,D are height, width, and depth of the 3D image. The coordinate tensor is used to concatenate with the previous feature tensor, and a standard convolution layer with batch normalization and ReLU activation is followed to fuse the concatenated tensor. (see Figure 2.)

Lesion-wise Loss Function Sphere Loss (SL):

Dice and cross-entropy are commonly used loss functions to optimize the network, however, these region-based loss functions train the network based on voxel-wise error, resulting in missing small lesions. According to the McDonald criteria [7], any lesion larger than 15 mm3 matters for MS diagnosis and treatment, but to the best of our knowledge, no prior work has investigated loss function based on individual lesions. Thus, we propose a lesion-wise loss function called Sphere Loss (SL) to solve the issue.

In sphere loss, we reduce each lesion to a fixed-size sphere regardless of its original size and shape. We first compute the mass center of a lesion and then draw a sphere based on the center with an equal radius . An example can be found in Figure 3. To train the network with sphere loss, we compute spheres for every training data sample based on the ground-truth mask. Thus, in addition to the original output probability map for binary lesion masks, our network would also output a probability map of spheres. Let the output sphere probability map be $$$S$$$, ground-truth sphere mask be $$$G$$$, the sphere loss can be summarized as follows:

$$L_{sl}=\frac{-1}{N}\sum_{v\in \Omega}\left\{\begin{matrix}\alpha(1-S_v)^{\gamma}log(S_v), & \text{if} ~ G_v=1\\ (1-\alpha)S_v^{\gamma}log(1-S_v), & \text{if} ~ G_v=0 \end{matrix}\right.$$

where $$$v\in \Omega$$$ is an index vector indicating where the voxel is in the image, $$$\alpha$$$ and $$$\beta$$$ are scalar parameters that control the loss weight.

Results

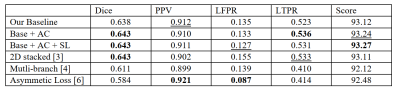

We first reproduced a state-of-the-art MS lesion segmentation algorithm from the 2D stacked method and then integrated our proposed modules into the framework. We compared our method with other state-of-the-art methods on the ISBI challenge dataset, where we can submit our results to their online website. The results are summarized in Figure 4.Discussion and Conclusion

In this abstract, we proposed an anatomical convolutional module to integrate anatomical structural information, and we further developed a lesion-wise sphere loss function to compensate for the drawback of traditional region-based loss functions on segmenting small lesions. The results showed that our method outperforms state-of-the-art methods. The AC the module provides additional anatomical information guidance for deep neural networks, and the SL module can regularize the network training and in turn benefit the segmentation of small lesions.Acknowledgements

No conflict of interests.References

1. Carass, Aaron, Snehashis Roy, Amod Jog, Jennifer L. Cuzzocreo, Elizabeth Magrath, Adrian Gherman, Julia Button et al. "Longitudinal multiple sclerosis lesion segmentation: resource and challenge." NeuroImage 148 (2017): 77-102.

2. Zhang, Hang, Jinwei Zhang, Qihao Zhang, Jeremy Kim, Shun Zhang, Susan A. Gauthier, Pascal Spincemaille, Thanh D. Nguyen, Mert Sabuncu, and Yi Wang. "RSANet: Recurrent Slice-wise Attention Network for Multiple Sclerosis Lesion Segmentation." In International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 411-419. Springer, Cham, 2019.

3. Zhang, Huahong, Alessandra M. Valcarcel, Rohit Bakshi, Renxin Chu, Francesca Bagnato, Russell T. Shinohara, Kilian Hett, and Ipek Oguz. "Multiple Sclerosis Lesion Segmentation with Tiramisu and 2.5 D Stacked Slices." In International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 338-346. Springer, Cham, 2019.

4. Aslani, Shahab, Michael Dayan, Loredana Storelli, Massimo Filippi, Vittorio Murino, Maria A. Rocca, and Diego Sona. "Multi-branch convolutional neural network for multiple sclerosis lesion segmentation." NeuroImage 196 (2019): 1-15.

5. Fischl, Bruce. "FreeSurfer." Neuroimage 62, no. 2 (2012): 774-781.

6. Hashemi, Seyed Raein, Seyed Sadegh Mohseni Salehi, Deniz Erdogmus, Sanjay P. Prabhu, Simon K. Warfield, and Ali Gholipour. "Asymmetric loss functions and deep densely-connected networks for highly-imbalanced medical image segmentation: Application to multiple sclerosis lesion detection." IEEE Access 7 (2018): 1721-1735.

7. Thompson, Alan J., Brenda L. Banwell, Frederik Barkhof, William M. Carroll, Timothy Coetzee, Giancarlo Comi, Jorge Correale et al. "Diagnosis of multiple sclerosis: 2017 revisions of the McDonald criteria." The Lancet Neurology 17, no. 2 (2018): 162-173.

Figures