2710

A progressive calibration gaze interaction interface to enable to naturalistic fMRI experiments1King's College London, London, United Kingdom

Synopsis

fMRI studies of higher level cognitive processing stretch current stimulus presentation paradigms. Adding the capability for subjects to intuitively interact with a virtual world has huge potential for advancing such studies. Gaze tracking can provide rich information about attention, but maintaining accurate gaze estimation is compromised by head movement and most systems do not allow visual interaction. We describe a gaze interaction interface that exploits each new fixation to achieve robust and accurate perfromance even during head movement. This opens opportunities for naturalistic, flexible and complex fMRI experiments, particularly for challenging populations like children and those with cognitive difficulties.

Introduction

Most fMRI studies of human cognition deploy highly controlled paradigms consisting of artificial or static visual stimuli. Importantly, these constrained stimuli do not reflect the natural visual experience and in particular those aspects related to social communication and behavior, where gaze represents a key mechanism for interacting with other people and the environment. Therefore, effective fMRI study of this behavior requires more complex, sustained, dynamic, and naturalistic visual environments. Although current MR compatible eye tracking systems can effectively monitor participant engagement and/or attention, the gaze estimation algorithms often struggle with changes in head position and do not support robust gaze interaction. We describe a novel gaze interaction interface to address these challenges and produce a new capability for advanced fMRI studies of cognitive function.Methods

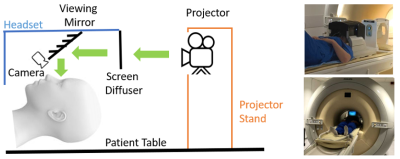

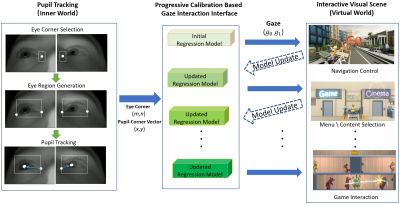

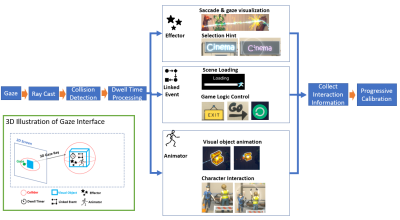

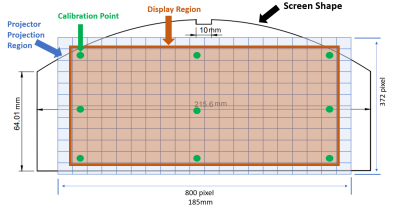

To present visual scenes and monitor subject eye and head position during MRI examinations, we developed a non-invasive coil mounted MR compatible headset that includes two MR compatible cameras for tracking eye position (figure 1) [1]. We treat gaze estimation as a regression problem to predict where a person is looking (2D screen coordinate) given detected eye features. The method has three steps (figure 2): 1. Extraction of eye features. 2. Building an initial gaze prediction model. 3. Progressive regression model updating.We first extract the pupil (px, py) and inner eye corner (m, n) coordinates for each eye from the live video stream (figure 2). The eye corner was chosen as it is the most rigid feature point in our input image, and so can provide evidence of head motion. Defining x = px – m and y = py – n, we build a quadratic regression model for each eye: g = Q(x, y) + a*m + b*n between the 2D gaze coordinate on the display screen g, and eye features (x,y,m,n) (figure 3) [2]. Higher order models for both (x,y) and (m,n) were tested but found to be less stable. The classical approach is to calibrate a static model for each subject using an initial sequence of gaze fixation points dispersed around the screen. However, head motion can render static models invalid because the initial calibration measurements do not provide data to adequately capture effects of head pose changes. To solve this problem, we make use of interaction with the displayed virtual environment to progressively update the regression model by building content into the visual scene that generates new fixation events with known g and corresponding measured (x,y,m,n). This is supported by a gaze interaction interface which interprets 2D gaze coordinate into a rich 3D virtual world. To initialize the gaze estimation model the subject is first asked to look at a sequence of 9 target locations distributed across the full display screen, as for conventional gaze model calibration.

Performance of fixed and progressive gaze models was compared using a simple sequential pattern of 180 targets (1 second between each target) (see figure 4). A provocation test was performed in which a subject deliberately moved their head as much as they could during a prolonged examination period (19 minutes and triggered 379 targets).

Results

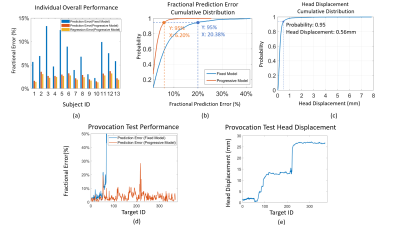

Test results for 13 subjects are shown in figure 5. Figure 5 (a) shows the error with and without progressive calibration, showing substantially improved prediction accuracy. A cumulative histogram of gaze prediction errors for all subjects (Figure 5 (b)) shows that with progressive regression modelling, 95% of gaze prediction errors are smaller than 6.20% of screen width, which is considerably better than the fixed model result (20.38%). Figure 5 (c) shows a cumulative histogram of measured eye corner displacements between detected gaze events. Despite 95% of these movements being < 0.6 mm, head movement still had a major impact on gaze estimation accuracy for the fixed model.Figure 5 (d) and (e) show results of the provocation test. The eye corner positions moved by 25mm with several large changes in pose. This caused complete failure of the model based on pre-calibration alone (Prediction MAE = 434.18%), but the progressive regression model remained accurate (Prediction MAE = 3.74%, Regression MAE = 3.31%), with only brief disruptions immediately following a large pose change.

Discussion and Conclusion:

We describe a progressive calibration gaze interaction interface, which provides accurate and robust gaze estimation despite head movement. The approach relies on embedding interaction events into the visual scene presented to the subject to provide updated calibration data. This introduces some constraints, but also creates an interactive engagement paradigm, which has great potential for novel neuroscience studies. Testing showed that subjects did not find this interaction intrusive or stressful (as assessed by qualitative feedback), with many remarking that the effect was engaging. Combining robust gaze tracking with a VR interface creates a platform for a next generation of “natural” fMRI studies of complex human processes including social interaction, communication, and behavior. The robustness of the system to changes in head pose also makes it suitable for under-studied populations such as those with cognitive disabilities and children, who cannot comply with the requirements of conventional fMRI experiments. In addition, the immersive nature of the system may help these subjects to remain comfortable in the scanner, facilitating longer duration testing.Acknowledgements

This work was supported by ERC grant agreement no. 319456 (dHCP project) and by core funding from Wellcome/EPSRC Centre for Medical Engineering [WT203148/Z/16/Z] and by the National Institute for Health Research (NIHR) Biomedical Research Centre based at Guy’s and St Thomas’ NHS Foundation Trust and King’s College London and/or the NIHR Clinical Research Facility. The views expressed are those of the author(s) and not necessarily those of the NHS, the NIHR or the Department of Health and Social Care.References

[1] Kun Qian, Tomoki Arichi, Jonathan Eden, Sofia Dall'Orso, Rui Pedro A G Teixeira, Kawal Rhode, Mark Neil, Etienne Burdet, A David Edwards, Jo V Hajnal. "Transforming The Experience of Having MRI Using Virtual Reality." Proceedings of the 28th ISMRM (Abstracts), 2020.

[2] Kar, Anuradha, and Peter Corcoran. "A review and analysis of eye-gaze estimation systems, algorithms and performance evaluation methods in consumer platforms." IEEE Access 5 (2017): 16495-16519.

[3] Lukezic, Alan, Tomas Vojir, Luka ˇCehovin Zajc, Jiri Matas, and Matej Kristan. "Discriminative correlation filter with channel and spatial reliability." In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 6309-6318. 2017.

[4] Santini, Thiago, Wolfgang Fuhl, and Enkelejda Kasneci. "PuRe: Robust pupil detection for real-time pervasive eye tracking." Computer Vision and Image Understanding 170 (2018): 40-50.

Figures