2700

Multi-task deep neural network reveals distinct and hierarchical pathways for face perception in visual cortex1Beijing Advanced Innovation Center for Big Data-Based Precision Medicine(BDBPM), Beihang University, Beijing 100083, China, Beihang University, Beijing, China, 2Key Laboratory of Molecular Imaging, Institute of Automation, Chinese Academy of Sciences, Beijing 100190, China, Beijing, China, 3Department of computer science, Beihang University, Beijing, China

Synopsis

We developed a multi-task deep neural network (DNN) model that can simultaneously classify facial expressions and identities. The model’s architecture and weights were optimized, and then used as an efficient tool to investigate the neural responses to facial expression and identity perception in an fMRI experiment. Our results revealed distinct visual pathways for facial expression and identity processing in the dorsal and ventral pathways in IT cortex, respectively. We also found hierarchical processing for facial expression and identity within the visual pathways.

INTRODUCTION

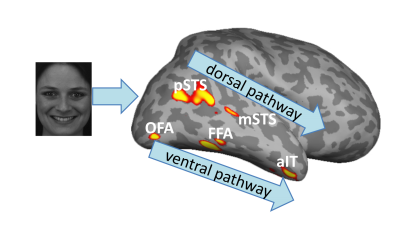

Human’s visual system has extraordinary abilities to perceive a variety of facial attributes for processing. For example, people can discriminate different categories of facial expressions and identities simultaneously and effortlessly, implying that our brain has its unique way for organizing the complex high-level features contained in the faces for expression and identity recognition. Neuroimaging studies have found several brain regions that were involved in face perception, and these brain regions showed different preferences for processing different aspects of face attributes1-3 (see Figure 1). However, it is still not clear how these regions are organized to efficiently perform multiple face perception tasks, and to what extent we can find the underlying neural computations for this ability. In our current study, we developed a multi-task DNN model4-5 that can simultaneously classify multiple face attributes. The model share the same low-level features of faces while branch into several streams for processing different high-level features of face attributes. We optimized this model to achieve the best performance for the classification of facial expressions and identities in a multi-label face image set. We then used the best-performing network as a tool to investigate two unsolved cognitive questions: how the simultaneous processing of facial expression and identity are represented in the brain visual cortex, and if the processing of the facial expression and identity show hierarchical structure in higher visual cortex of IT.METHODS

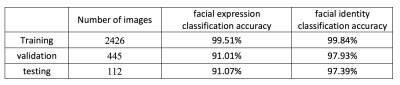

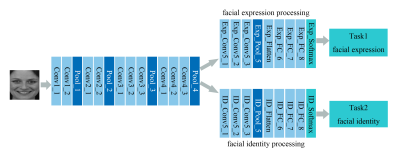

The multi-task DNN We selected the pre-trained VGG-Face6 as basic model and modify its structure. The modified structure share the first several layers and branch into two sets of streams for facial expression and identity classification respectively. We tried a variety of branch structures and found out the best-performing network shared the first 14 and then branch into two sets of 5 task-specific layers, as shown in Figure 2. We froze the parameters of the shared layers, and fine tune the network with stochastic gradient descent (SGD). The learning rate is set to 0.01. To avoid overfitting, we applied dropout learning after FC_7, the dropout ratio is 0.5, and applied L2 regularization on the full connected layers of facial expression branch. The face image dataset used for training, validation and testing our proposed network are shown in Table 1. fMRI experimentParticipants viewed the test face images in a slow event-related fMRI experiment. The images belongs During scan, each participant performed a fixation color-change discrimination task while viewing the face stimuli. Each participant also performed two localizer runs to localize this participant’s face selective regions at the individual level (see Figure 1). MRI data were collected using a GE MR 750 3.0 Tesla scanner with a GE 8-channel head coil.Face feature representation in DNN and brain visual cortexFor the images in the testing dataset, we extracted their feature representation at each DNN layer, and calculate the Pearson correlation coefficient r between all pairs of images, generating an 112 X 112 representational dissimilarly matrix (RDM, 1-r). For each brain ROI, we extracted the fMRI response patterns to each face stimulus, and compute the RDM for all pairs of the face stimuli. We calculated the Pearson correlation between RDMs derived from each DNN layers and RDMs from each brain ROI. The Pearson correlation coefficient were further transformed into normally distributed z scores.RESULTS

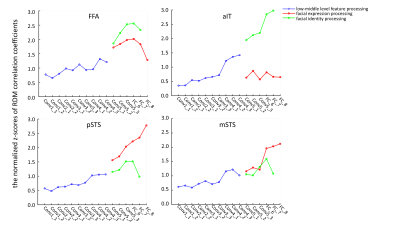

The z-scores between RDMs from each DNN layers and RDMs from each brain ROI are shown in Figure 3. In pSTS and mSTS, significant z-scores for facial expression were in higher DNN layers, while z-scores for facial identity were not significant. In FFA, the significant z-scores for both facial expression and identity were in higher DNN layers. The z-scores for facial identity in each of the three DNN layer was significantly higher than that for facial expression. In aIT, the significant z-scores for facial identity were in higher DNN layers. (p<0.05, FDR corrected).Our results indicated that pSTS and mSTS were involved in processing facial expressions, and aIT and FFA were involved in processing facial identities, suggesting facial expression and identity are processed in distinct visual pathways. Our results also suggest the hierarchical processing for facial expression in STS, and hierarchical processing for facial identity from FFA to aIT.CONCLUSION AND DISCUSSION

In our current study, we developed a multi-task deep neural network model, and optimized its architecture and weights to simultaneously classify facial expressions and identities. We used the optimized-performing network to investigate the neural responses to facial expression and identity perception. Our results revealed distinct visual pathways for facial expression and identity processing in the dorsal and ventral pathways in IT cortex, respectively. We also found hierarchical processing for facial expression and identity within the visual pathways.Acknowledgements

Research supported by the National Natural Science Foundation of China under Grant No. 81871511.References

1. Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn Sci.2000;4:223–233.

2. Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci.1997;17:4302–11.3. Kriegeskorte N, Formisano E, Sorger B, et al. Individual faces elicit distinct response patterns in human anterior temporal cortex. Proc Natl Acad Sci USA.2007;104:20600–20605.

4. Hsieh HL, Hsu W, Chen YY. Multi-task learning for face identification and attribute estimation. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), IEEE. 2017; 2981-2985.

5. Ming Z, Xia J, Luqman MM, et al. Dynamic Multi-Task Learning for Face Recognition with Facial Expression. 2019; arXiv preprint arXiv:1911.03281.

6. Simonyan K, Zisserman A. Very deep convolutional networks for large-scale image recognition. 2014; arXiv preprint arXiv:1409.1556.

Figures

Figure 2. The architecture of the multi-task deep neural network.