2662

Automatic multilabel segmentation of large cerebral vessels from MR angiography images using deep learning1Médecine nucléaire et radiobiologie, Université de Sherbrooke, Sherbrooke, QC, Canada, 2Faculté de Médecine et des Sciences de la Santé, Université de Sherbrooke, Sherbrooke, QC, Canada, 3Clinique de la Mémoire et Centre de Recherche sur le Vieillissement, CIUSSS de l’Estrie-CHUS, Sherbrooke, QC, Canada, 4Service de Neurologie, Département de Médecine, CHUS, Sherbrooke, QC, Canada, 5Radiologie diagnostique, Université de Sherbrooke, Sherbrooke, QC, Canada

Synopsis

The Circle of Willis (CW) is a system of arteries located at the base of the brain. Its structure is a key component in different cerebrovascular diseases, though quantifying this on MR images is meticulous and labor intensive. We developed an analysis pipeline that uses a convolutional neural network to do multilabel segmentation of the CW as viewed through TOF-MRA images. Results show that this approach can automatically locate and label the CW with the same accuracy as expert human annotators.

INTRODUCTION

The Circle of Willis (CW) is an arterial structure located at the base of the brain that joins the two internal carotid arteries (ICA) and basilar artery (BA). From the CW stems the anterior cerebral artery (ACA), middle cerebral artery (MCA) and posterior cerebral artery (PCA) which supply blood to the entirety of the brain1. Previous research has shown that abnormal size and shape of some of these vessels is associated with a greater risk of cognitive impairment associated with Dementia and Alzheimer’s Disease2,3. However, quantifying vessel structures is labor intensive as it typically requires manual annotation on images obtained via Time-of-Flight Magnetic Resonance Angiography (TOF-MRA). Here, we present a fully automated approach using a convolutional neural network for accurately localizing and labeling the CW and its distal branches independent of image quality, acquisition parameters and cognitive status. Preliminary results indicate that this method allows for automated and accurate analysis of the major cerebral vessels without the need of user intervention.METHODS

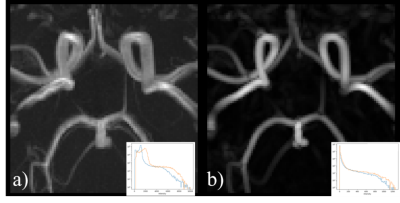

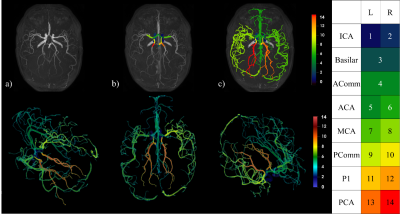

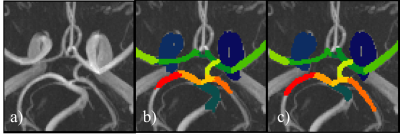

To develop our multilabel segmentation pipeline we trained a convolutional neural network using a 3D U-Net architecture4 on an annotated dataset of 116 patients built from three MRI datasets. Annotations were performed under the supervision of a medical expert (author Arès-Bruneau, N.). Three different datasets5,6 with participants acquired on different scanners with varying image FOV, resolutions and participant age, sex and cognitive status was used so that the neural network could be generalized on a heterogenous dataset. The annotations included left and right ICA from segments C4 to C7, BA, initial segments of the ACA (A1), initial segments of the left and right MCA (M1) and the first and second segments of the PCA (P1and P2) and, lastly, the anterior and posterior communicating arteries (AComm & PComm respectively). Each image was resampled to 0.625 mm3 and a vesselness7,8 process was applied to uniformize the intensity distribution across datasets. Each patch was normalized. Dropout9 and batch normalization10 were added to the network. Data augmentation11 was used by introducing translations and rotations to each image in order to simulate variations in patient orientation. At the end, a Hadamard product was made between the output prediction and an attention model built by doing a hysteresis thresholding on the raw patch. Thresholds were based on the parameters of Gaussian distributions from a Gaussian mixture model fitted on the raw image intensities with an expectation-maximization algorithm12. An example patch used for training is shown in Figure 1.The performance of the above method was quantified by comparing the results obtained on a test set containing 15 patients to their corresponding manual annotations. Similarity between the two was computed via dice score coefficients (DS) and precision score (PS). In addition, two human annotators labeled a subset of these 15 images (N=8) to compute inter-rater variability and we analyzed a new set of six images of the same participants (i.e. test-retest of 2 subjects) in order to test the reproducibility of our approach. Here, we measured the diameter13 of each artery and measured the standard deviation (STD) across the 6 acquisitions. Lastly, we propagated the A1, M1 and P1 labels using a watershed algorithm14 to reveal the full cerebral vasculature. Figure 2 shows the results of the pipeline process15,16.

RESULTS

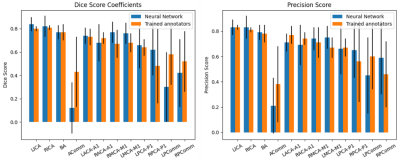

Figure 3 shows examples of predictions where all arteries were correctly segmented. The average DS and PS over all test images are shown in Figure 4. In all arteries, the similarity between the predicted CW and the manual annotation (blue bars) was statistically no different (p>0.05 corrected for multiple comparisons) than that expected from inter-rater variability (orange bar). Table 1 shows the results of the test-retest dataset. Here, results show that variability in diameter estimates in most arteries are well below the image resolution (0.625 mm), demonstrating the high reproducibility of proposed approach.DISCUSSION & CONCLUSION

Figure 1 shows that the performance of our network is about as good as the variability of two trained annotators for most of the arteries in the CW. The high variability of the communicating arteries and the imbalance of those classes makes it hard for the network to generalize well for those arteries. However, for the large arteries of the CW, we currently have a reliable algorithm that generates us a segmented arterial tree. The standard deviation of the diameter computation is less than 1 voxel. Moreover, the CW segmentation can be used as a biological prior to propagate its information for a full brain artery segmentation. This pipeline has three advantages. First, we can segment the major cerebral arteries. Second, a single prediction takes less than one second on a normal desktop computer. Third, this method is fully automated allowing us to analyze large cohort of patients rapidly. Future works will consist to improve the performances of the network over the communicating arteries of the CW and makes the full brain segmentation more reliable for the smaller arteries.Acknowledgements

Many thanks to Samantha Côté for her precious help while revising this abstract.

Data were provided [in part] by OASIS-3: Principal Investigators: T. Benzinger, D. Marcus, J. Morris; NIH P50 AG00561, P30 NS09857781, P01 AG026276, P01 AG003991, R01 AG043434, UL1 TR000448, R01 EB009352. AV-45 doses were provided by Avid Radiopharmaceuticals, a wholly owned subsidiary of Eli Lilly.

Part of the MR brain images from healthy volunteers used in this paper were collected and made available by the CASILab at The University of North Carolina at Chapel Hill and were distributed by the MIDAS Data Server at Kitware, Inc.

References

1. Blumenfeld, H. Neuroanatomy through Clinical Cases. (Stinauer Associates, 2010).

2. Gutierrez, J. Brain arterial dilatation and the risk of Alzheimer’s disease. 9 (2019).

3. The Alzheimer’s Disease Neuroimaging Initiative et al. Early role of vascular dysregulation on late-onset Alzheimer’s disease based on multifactorial data-driven analysis. Nat. Commun. 7, 11934 (2016).

4. Çiçek, Ö., Abdulkadir, A., Lienkamp, S. S., Brox, T. & Ronneberger, O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation. ArXiv160606650 Cs (2016).

5. Bullitt, E. et al. Vessel Tortuosity and Brain Tumor Malignancy. Acad. Radiol. 12, 1232–1240 (2005).

6. LaMontagne, P. J. et al. OASIS-3: Longitudinal Neuroimaging, Clinical, and Cognitive Dataset for Normal Aging and Alzheimer Disease. http://medrxiv.org/lookup/doi/10.1101/2019.12.13.19014902 (2019) doi:10.1101/2019.12.13.19014902.

7. McCormick, M., Liu, X., Jomier, J., Marion, C. & Ibanez, L. ITK: enabling reproducible research and open science. Front. Neuroinformatics 8, (2014).

8. Yoo, T. S. et al. Engineering and Algorithm Design for an Image Processing API: A Technical Report on ITK - the Insight Toolkit. 8.

9. Srivastava, N., Hinton, G., Krizhevsky, A., Sutskever, I. & Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Networks from Overfitting. 30.

10. Ioffe, S. & Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. ArXiv150203167 Cs (2015).

11. Shorten, C. A survey on Image Data Augmentation for Deep Learning. 48 (2019).

12. Pedregosa, F. et al. Scikit-learn: Machine Learning in Python. 7.

13. Bizeau, A. et al. Stimulus-evoked changes in cerebral vessel diameter: A study in healthy humans. J. Cereb. Blood Flow Metab. 38, 528–539 (2018).

14. van der Walt, S. et al. scikit-image: image processing in Python. PeerJ 2, e453 (2014).

15. McCarthy, P. FSLeyes (Version 0.34.0). (2020).

16. Ahrens, J., Geveci, B. & Law, C. ParaView: An End-User Tool for Large-Data Visualization. in Visualization Handbook 717–731 (Elsevier, 2005). doi:10.1016/B978-012387582-2/50038-1.

Figures