2399

Unsupervised reconstruction based anomaly detection using a Variational Auto Encoder1Department of Biomedical Magnetic Resonance, Otto von Guericke University, Magdeburg, Germany, 2Data and Knowledge Engineering Group, Otto von Guericke University, Magdeburg, Germany, 3Faculty of Computer Science, Otto von Guericke University, Magdeburg, Germany, 4MedDigit, Department of Neurology, Medical Faculty, University Hopspital, Magdeburg, Germany, 5German Centre for Neurodegenerative Diseases, Magdeburg, Germany, 6Center for Behavioral Brain Sciences, Magdeburg, Germany, 7Leibniz Institute for Neurobiology, Magdeburg, Germany

Synopsis

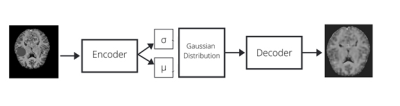

While commonly used approach for disease localization, we propose an approach to detect anomalies by differentiating them from reliable models of anatomies without pathologies. The method is based on a Variational Auto Encoder to learn the anomaly free distribution of the anatomy and a novel image subtraction approach to obtain pixel-precise segmentation of the anomalous regions. The proposed model has been trained with the MOOD dataset. Evaluation is done on BraTS 2019 dataset and a subset of the MOOD, which contain anomalies to be detected by the model.

Introduction

Unsupervised pixel-precise segmentation of brain regions that appear anomalous or not can be a valuable assistance for radiologists. Most of the classification/segmentation models proposed use supervised training for a certain task and need large training data. Unsupervised anomaly detection (UAD) 1 systems can directly learn the data distribution from a large cohort of unannotated subjects and then be used to detect out of distribution samples and thus ultimately identify diseased or suspicious cases. The approach can be made independent of human input by decoupling reference annotations from abnormality detection. Such anomaly detection systems can be trained to detect any kind of anomaly present in the data without explicitly teaching them about any specific task. In this research, we have implemented an anomaly detection approach and tested the approach to segment artificial anomalies and brain tumors.Methods

The neural network used is a Variational Auto-Encoder (VAE), an auto-regressive model, popularly used for density estimation for anomaly detection tasks3,4. We trained the network by optimizing the evidence lower bound (ELBO)2 using the MOOD dataset. It comprises 800 hand-selected brain scans of dimensions (256 x 256 x 256) containing no anomalies8. The model is trained on 700 scans, treating each slice as individual 2D image. The architecture of the network is a 5-layer fully convolutional encoder and decoder. During training, the model computes a Latent Space Representation (LSR) of the non-anomalous input image thus learning feature distribution of healthy dataset. The latent spaces in VAEs are by design continuous in nature, thus more suitable to the given problem as it allows easy random sampling and interpolation. The encodings obtained here are 2 vectors; a vector of means, μ of data samples and a vector of standard deviations, σ. The encodings are generated within these distributions and the decoder learns that not just a single point but all the points around it are referred to as a sample of the same class, thus decoding even slight variations of the encoding. The loss function used is the evidence lower bound (ELBO), a combination of the error on the pixel-wise reconstructions, along with Kullback-Leibler (KL) Divergence2. The ELBO loss is defined as log p(x) ≥ L = −DKL(q(z|x)||p(z)) + Eq(z|x) [log p(x|z)] where q(z|x) and p(x|z) are diagonal normal distributions parameterized by neural networks fµ, fσ, and gµ and constant c such that q(z|x) = N (z; fµ,θ1 (x), fσ,θ2 (x) 2 ), and p(x|z) = N (x; gµ,γ(z),I ∗ c). The feature-maps are of sizes 16-32-64-256, having 2-strided convolutions for downsampling and transposed convolutions for upsampling. The non-linearity inducing activation function used is LeakyReLU after every layer. A total of 256 latent variables are used initially. The model is trained with Adam optimizer along with a learning rate of 1e-4 over a total of 100 epochs until it reaches convergence2. It is evaluated on the BraTS dataset, where the probability of pixels in abnormal regions is low while the probability of pixels in healthy regions is high. The BraTS dataset comprises multi-institutional routine clinically-acquired preoperative multimodal MRI scans of glioblastoma (GBM/HGG) and lower grade glioma (LGG)10. To localize the anomalies, a novel post processing was performed i.e. morphological closing along with binary thresholding is performed on the mask obtained by subtracting the reconstructed image from the original and the location of maximum anomaly is detected.Results and Discussion

We benchmark the results against the results obtained in Ref.2. The reference model is trained and evaluated on the BraTS dataset, and obtained a Dice coefficient value of 0.36. The proposed model is trained on the MOOD dataset and evaluated on the BraTS dataset and thus obtained a Dice Coefficient value of 0.27 as shown in Fig 4 .Dice Coefficient is a measure of overlap between the input image and the reconstructed image. The difference lies in the approach where different datasets are used for training and testing. As shown in Fig 3 & 4 a non-zero value mask is obtained. A non-zero mask value indicates the presence of an out-of-distribution sample in the input image i.e. the anomaly, while a zero mask value indicates a healthy input image. The BraTS dataset results (Fig.4) currently show anomaly along with some additional noise . We're working on improving our image segmentation technique for precise anomaly localization suited for use in clinical applications.Conclusion and future work

We demonstrated a proof-of-concept UAD that encodes the full context of brain MR slices. This approach was successful in detecting anomalous patterns in the MOOD dataset and provides opportunities for effective unsupervised training for anomaly detection, which can be used further for disease localization without explicit annotations. It was observed while localizing anomalies, the model generated many false positives. However, this kind of UAD might be used by clinicians for interactive decision making. As a future work, we plan to investigate the projection of healthy anatomy into a latent space that follows a Gaussian Mixture Model and intend to utilize 3D autoencoding models.Acknowledgements

This work was in part conducted within the context of the International Graduate School MEMoRIAL at Otto von Guericke University (OVGU) Magdeburg, Germany, kindly supported by the European Structural and Investment Funds (ESF) under the programme "Sachsen-Anhalt WISSENSCHAFT Internationalisierung“ (project no. ZS/2016/08/80646).References

[1] Paul Bergmann, Michael Fauser, David Sattlegger, and Carsten Steger. MVTec AD – A Comprehensive Real-World Dataset for Unsupervised Anomaly Detection. In IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pages 9592–9600, 2019.

[2] Zimmerer, David & Isensee, Fabian & Petersen, Jens & Kohl, Simon Maier-Hein, Klaus. (2019). Unsupervised Anomaly Localization using Variational Auto-Encoders.

[3] An, Jinwon and S. Cho. “Variational Autoencoder based Anomaly Detection using Reconstruction Probability.” (2015).

[4] Kiran, Bangalore & Thomas, Dilip & Parakkal, Ranjith. (2018). An Overview of Deep Learning Based Methods for Unsupervised and Semi-Supervised Anomaly Detection in Videos. Journal of Imaging. 4. 10.3390/jimaging4020036.

[5] Menze, Bjoern & Jakab, András & Bauer, Stefan & Kalpathy-Cramer, Jayashree & Farahaniy, Keyvan & Kirby, Justin & Burren, Yuliya & Porz, Nicole & Slotboomy, Johannes & Wiest, Roland & Lancziy, Levente & Gerstnery, Elizabeth & Webery, Marc-Andr´e & Arbel, Tal & Avants, Brian & Ayache, Nicholas & Buendia, Patricia & Collins, Louis & Cordier, Nicolas & Van Leemput, Koen. (2014). The Multimodal Brain Tumor Image Segmentation Benchmark (BRATS). IEEE Transactions on Medical Imaging. 99. 10.1109/TMI.2014.2377694.

[6] Menze, B.H., Van Leemput, K., et al: The Multimodal Brain Tumor Image Seg-mentation Benchmark (BRATS). IEEE Trans Med Imaging (2015).

[7] MOOD challenge dataset : https://zenodo.org/record/3961376

[8] BraTS dataset : https://www.med.upenn.edu/sbia/brats2017/data.html

Figures