2240

Learning 3D structures from 2D slices with scan-specific data for fast and high-resolution neonatal brain MRI1Harvard Medical School, Boston, MA, United States, 2Boston Children's Hospital, Boston, MA, United States

Synopsis

Neonatal brain MRI is a resolution-critical task due to the small brain of neonates. Among post-acquisition resolution-enhancement techniques, deep learning has shown promising results. Most state-of-the-art deep learning-based super-resolution methods work on 2D slices, so ignore the 3D nature of the brain anatomy. Learning on 3D images requires large-scale training datasets of high-resolution volumes that are, unfortunately, difficult to acquire. We developed a methodology that enables learning 3D gradient structures from 2D slices for an individual subject without the need for large, auxiliary high-resolution datasets. Experiments on clinical data from ten neonates demonstrate our approach outperformed state-of-the-art MRI super-resolution methods.

Introduction

The brain of neonates is small in comparison to adults. Imaging at typical resolutions such as one cubic mm incurs more partial voluming artifacts in a neonate than in an adult1. The interpretation and analysis of MRI of the neonatal brain benefits from a reduction in partial volume averaging that can be achieved with high spatial resolution2. Unfortunately, direct acquisition of high spatial resolution MRI is slow, which increases the potential for motion artifacts3, and suffers from reduced signal-to-noise ratio (SNR)4. Deep learning has recently emerged to enhance spatial resolution through MRI reconstruction. However, current deep models perform the learning on 2D slices, which ignore the nature of 3D anatomical structures of the brain5,6. It has been demonstrated that 3D models outperform their 2D counterparts7,8. Learning by training on 3D images, however, requires large-scale training databases of high-resolution (HR) volumes that are practically impossible to acquire at simultaneous high resolution and SNR. Furthermore, the gray matter-white matter contrast varies as the neonate grows at different weeks. It consequently raises significant challenges to the scale and quality of the training database for the deep models with neonatal brain MRI. We sought to develop a deep learning methodology that allows for learning 3D gradient structures from 2D slices acquired from the individual subject. We reconstructed neonatal brain MRI at an isotropic resolution of 0.39mm with six minutes of imaging time, using super-resolution reconstruction from three short-duration scans. Experiments on clinical data acquired from ten neonates demonstrate that our approach achieved considerable improvement in spatial resolution and SNR, in comparison to state-of-the-art super-resolution methods, while, in parallel, substantially reduced scan time, as compared to direct high-resolution acquisitions.Methods

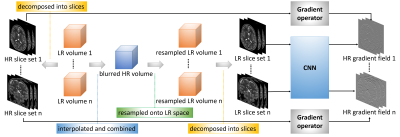

The basic idea of our approach is to construct an isotropically HR image using super-resolution reconstruction (SRR) from multiple anisotropically low-resolution (LR) images with short scan time. We exploit the spatial gradient structures of the reconstructed image to facilitate the SRR. As a 3D gradient can be decomposed onto 2D space, we can equivalently compute the gradient of a volume slice by slice. We therefore trained a deep neural network that maps an LR slice to two HR gradients with perpendicular directions. The LR inputs and the HR gradients are from the through-plane slices and the in-plane slices of the LR images, respectively. Our algorithm incorporates the learned gradients in a gradient guidance-regularized (GGR) framework9 to obtain the reconstructed HR image by deconvolution. The design of our algorithm is shown in Figure 1.We acquired three T2-weighted FSE images in orthogonal planes for each neonate on a 3T scanner with a 64 channel head coil. The field of view was 135mmx150mm. The matrix size was 348x384 and thus the in-plane resolution was 0.39mmx0.39mm. The slice thickness was 2mm, which led to a trade-off between SNR and partial volume effects (PVE). We set TR/TE=12890/93ms with an echo train length of 16 and a flip angle of 160 degrees. Scan time for each acquisition was around two minutes. We reconstructed the image at an isotropic resolution of 0.39mm, which is sufficiently high for the interpretation and analysis of the neonatal brain in clinical practices.

We conducted two experiments to assess our approach: one on simulated data to demonstrate the accuracy, and another on clinical data to show the efficacy of our approach. Cubic interpolation and GGR9 were used as the baselines in the experiments. Although many deep super-resolution methods have been proposed, most of them cannot be trained on the scan-specific data. Therefore, we employed the lightweight model, SRCNN10, as a deep baseline model.

Results

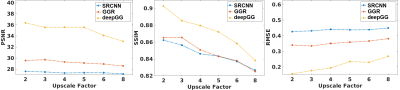

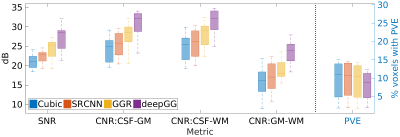

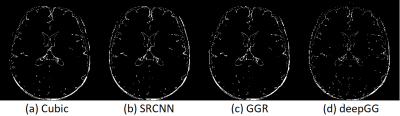

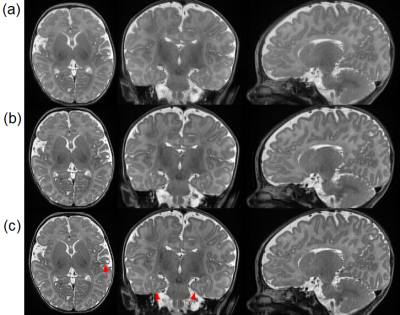

Figure 2 shows the results on the simulated dataset. Our approach achieved the highest accuracy in terms of PSNR, SSIM, and RMSE. Figure 3 shows the results on the clinical dataset in terms of SNR, CNR, and PVE. Our approach offered the highest quality on this clinical dataset with an average SNR of 27.4±3.3dB, which was improved by 30.1% (6.3dB) as compared to that in cubic interpolation. Our approach also generated the images with 9.3%±5.9% of voxels suffering from PVE on average, which was improved by 14.7% as compared to that in cubic interpolation. Figure 4 shows a qualitative result of the estimated PVE. Our approach yielded the fewest voxels with PVE. Figure 5 shows a qualitative assessment of the reconstructed HR images on the clinical dataset. Our approach offered the highest quality in terms of image contrast and sharpness.Discussion

We have developed a deep learning methodology that allows for learning 3D image structures by training on 2D slices acquired from the individual subject. We have applied this technique to ten neonates for their high-resolution brain MRI using super-resolution reconstruction from three short-duration scans. We have achieved high-quality neonatal brain MRI at a resolution of isotropic 0.39mm with six minutes of imaging time. Experiments on simulated data as well as clinical data have demonstrated that our approach achieved considerable improvement in spatial resolution and SNR, in comparison to state-of-the-art MRI super-resolution reconstruction methods, while, in parallel, substantially reduced scan time, as compared to direct high-resolution acquisitions.Acknowledgements

Research reported in this publication was supported in part by the National Institute of Biomedical Imaging and Bioengineering, the National Institute of Neurological Disorders and Stroke, and the National Institute of Mental Health of the National Institutes of Health (NIH) under Award Numbers R01 NS079788, R01 EB019483, R01 EB018988, R01 NS106030, IDDRC U54 HD090255; a research grant from the Boston Children's Hospital Translational Research Program; a Technological Innovations in Neuroscience Award from the McKnight Foundation; a research grant from the Thrasher Research Fund; and a pilot grant from National Multiple Sclerosis Society under Award Number PP-1905-34002. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH, the National Multiple Sclerosis Society, the Boston Children's Hospital, the Thrasher Research Fund, or the McKnight Foundation.References

- Dubois, J., Alison, M., Counsell, S., Lucie, H.-P., Huppi, P. S., and Benders, M. (2020). MRI of the neonatal brain: A review of methodological challenges and neuroscientific advances. Journal of magnetic resonance imaging: JMRI.

- Weisenfeld, N. and Warfield, S. (2009). Automatic segmentation of newborn brain MRI. NeuroImage 47, 564–572.

- Afacan, O., Erem, B., Roby, 359 D. P., Roth, N., Roth, A., Prabhu, S. P., et al. (2016). Evaluation of motion and its effect on brain magnetic resonance image quality in children. Pediatric Radiology 46, 1728–1735.

- Plenge, E., Poot, D. H. J., Bernsen, M., Kotek, G., Houston, G., Wielopolski, P., et al. (2012). Super-resolution methods in MRI: Can they improve the trade-off between resolution, signal-to-noise ratio, and acquisition time? Magnetic Resonance in Medicine 68, 1983–1993.

- Jurek, J., Kocinski, M., Materka, A., Elgalal, M., and Majos, A. (2020). CNN-based superresolution reconstruction of 3D MR images using thick-slice scans. Biocybern. Biomed. Eng. 40(1), 111–125.

- Zhao, C., Carass, A., Dewey, B.E., and Prince, J.L. (2018). Self super-resolution for magnetic resonance images using deep networks. In: International Symposium on Biomedical Imaging, pp. 365–368.

- Pham, C., Ducournau, A., Fablet, R., and Rousseau, F. (2017). Brain MRI super-resolution using deep 3D convolutional networks. In: International Symposium on Biomedical Imaging, pp. 197–200.

- Chen, Y., Xie, Y., Zhou, Z., Shi, F., Christodoulou, A.G., and Li, D. (2018). Brain MRI super-resolution using 3D deep densely connected neural networks. In: International Symposium on Biomedical Imaging, pp. 739–742.

- Sui, Y., Afacan, O., Gholipour, A., and Warfield, S. K. (2019). Isotropic MRI super-resolution reconstruction with multi-scale gradient field prior. In Medical Image Computing and Computer-Assisted Intervention (MICCAI).

- Dong, C., Loy, C.C., He, K., and Tang, X. (2016). Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 38(2), 295–307.

Figures