2197

Comparison of deformable registration techniques for real-time MR-based motion correction in PET/MR1Massachusetts General Hospital, Harvard Medical School, Boston, MA, United States, 2LTCI, Telecom Paris, Institut Polytechnique de Paris, Paris, France, 3GE Healthcare, Boston, MA, United States, 4GE Healthcare, Waukesha, WI, United States

Synopsis

Motion during acquisition of PET/MR data can severely degrade image quality of PET/MR studies. We have previously reported an MR-based motion correction technique capable of correcting for irregular motion patterns such as bulk motion and irregular respiratory motion. The method is based on a subspace MR model enabling reconstruction of real-time volumetric MR images (9 frames per second). In this work, we present improvements to the motion estimation method used to obtain motion fields from real-time MR images. We compare the performance of three packages for irregular motion patterns.

Introduction

Patient respiratory motion in PET/MR imaging can lead to image blurring and artifacts which can affect detectability of tumors, accuracy of SUV values, and target volume delineation for radiation therapy planning. Recently, a real-time MR imaging method for PET motion correction in PET/MR was demonstrated to handle irregular respiratory motion and bulk motion [1] using a subspace-based imaging method with radial stack-of-stars acquisitions to reconstruct high resolution, high frame-rate dynamic MR images from highly undersampled k-space data. These real-time MR images can be used to determine motion phases and motion fields for PET motion correction. This study, however, was limited by imperfect registration of MR images. In this abstract, we evaluate image registration of MR images using several available packages.Methods

All PET/MR imaging data was collected from the Gordon Center’s Medical Imaging Database in accordance with institutional approval by the Institutional Review board.- PET/MR imaging and subspace based image reconstruction:

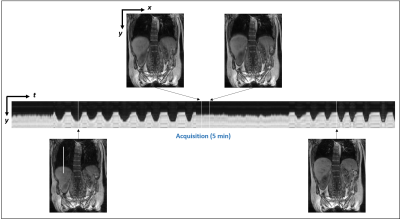

Data was collected from 18F-FDG PET/MR scans and reconstructed according to the protocol described by Marin et al. [1]. A spoiled GRE MR acquisition was performed with radial stack-of-stars sampling in the coronal plane. Three training lines and 32 kz-encoding lines were acquired per spoke angle. The subject was instructed to simulate an irregular respiratory pattern including deep and shallow breaths. Temporal basis functions were estimated using the singular vector decomposition of training data at every frame. Spatial coefficients were determined by solving an optimization problem that fits a partially separable model to the acquired undersampled (kt)-space data while enforcing a spatio-temporal total variation (TV) sparsity constraint. Reconstruction was performed on NVIDIA V100 SX2 GPU with a total of 5120 CUDA cores and 16GB RAM. Reconstruction time was around 40s per slice and coil [2].

- MR motion estimation, image registration:

Real-time MR images were reconstructed and binned into a small number of phases (12) corresponding to different body phases. Bins were assigned based on the position of the right lobe of the liver and combined MR images were formed for each bin. Image registration of MR images was performed using the Michigan Image Reconstruction Toolbox (MIRT) [3], elastix [4], and Q.Freeze2 [5]. MIRT is a collection of open source algorithms including B-spline based image registration using an intensity-based data fidelity term with regularization encouraging invertible deformations. Elastix is an open source software based on Insight Segmentation and Registration Toolkit (ITK) for medical image registration. Elastix registration was performed between pairs of bins using parameters similar to the ones used in [6], using a mutual information metric and stochastic gradient solver. Q.Freeze2 is a tool developed by GE Healthcare for motion correction of PET in PET/CT, suitable for robust estimation of PET activity under large motion [5]. Q.Freeze2 parameters were further optimized for MR registration.

- Evaluation of MR image registration:

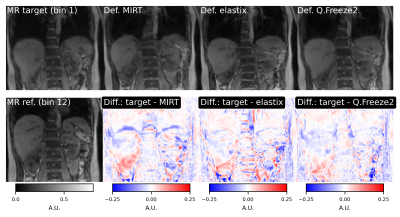

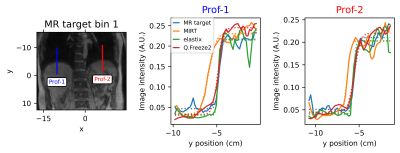

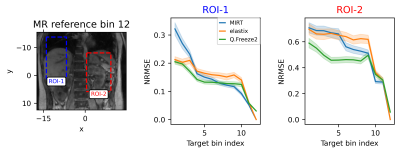

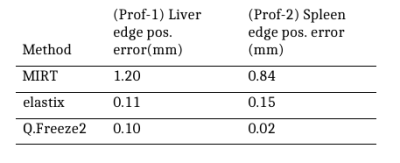

Registration was assessed by comparing warped images obtained by the three registration packages to the original MR image at a given respiratory phase bin. Registration methods were also compared quantitatively by measuring the normalized root mean square error (NRMSE) in regions of interest. An additional metric was used to assess the alignment of organs in warped images which is critical for attenuation correction in PET reconstruction. 1D profiles were drawn across interfaces between low and high PET activity regions (e.g. lung/liver) and an error function model was fitted to the profiles to estimate the edge position.

Results

Representative frames of real-time reconstructed MR images are shown in Fig. 1. A comparison of MR images and warped images is reported in Fig. 2. The figure presents MR images and corresponding difference images showing the residual error after registration. MIRT registration fails to capture the full extent of liver displacement and results in distortions in the spine and left kidney. The elastix registration better captures the amplitude of the liver displacement but also results in distorted kidneys and liver. Q.Freeze2 best captures the motion in the liver and kidneys, despite some distortion between the spine and edge of the left kidney. Figure. 3 shows 1D profiles through the liver and spleen that are used to estimate the edge position. Estimated edge positions are reported in Table 1. While MIRT introduces some error in the edge position, both elastix and Q.Freeze2 accurately preserve the edge location. Finally, Fig. 4 shows the residual error (NRMSE) between MR images and warped images for each bin. Regardless of the method, the curves show lower errors for bins closer to the reference bin (bin 12). Note that contrary to MIRT and elastix, Q.Freeze2 does not reach a zero error for the reference bin. This is expected since MIRT and elastix perform registration for pairs of bins while Q.Freeze2 performs joint, reference-less registration of all images using a temporal penalty. For early bins, Q.Freeze2 results in lower error, especially for ROI-2, which contains more structure than ROI-1.Conclusion

We have evaluated three packages for registration in the context of MR-based motion corrected PET reconstruction. We have found that Q.Freeze2 can provided high quality motion fields, resulting in fewer distortions than other packages.Acknowledgements

This work was supported in part by the National Institutes of Health under awardnumbers: T32EB013180, R01CA165221, R01HL118261, R21MH121812,R01HL137230, K01EB030045, and P41EB022544.References

[1] T. Marin, Y. Djebra, P. K. Han, Y. Chemli, I. Bloch, G. El Fakhri, J. Ouyang, Y. Petibon, and C. Ma,“Motion correction for PET data using subspace-based real-time MR imaging in simultaneous PET/MR,” Physics in Medicine and Biology, vol. 65, no. 23, p. 235022, 2020.

[2] F. Ong and M. Lustig, “SigPy: A Python Package for High Performance Iterative Reconstruction,” in Proc. ISMRM, 2019.

[3] S. Y. Chun and J. A. Fessler, “A simple regularizer for B-spline nonrigid image registration that encourages local invertibility,” IEEE Journal of Selected Topics in Signal Processing, vol. 3, no. 1, pp. 159–169, 2009.

[4] S. Klein, M. Staring, K. Murphy, M. A. Viergever, and J. P. W. Pluim, “Elastix: a toolbox for intensity-based medical image registration,” IEEE Transactions on Medical Imaging, vol. 29, no. 1, pp. 196–205, 2010.

[5] S. Thiruvenkadam, K. S. Shriram, R. M. Manjeshwar, and S. D. Wollenweber, “Robust PET Motion Correction Using Non-local Spatio-temporal Priors,” in Medical Image Computing and Computer Assisted Intervention, N. Navab, J. Hornegger, W. M. Wells, and A. Frangi, Eds. Cham: Springer International Publishing, 2015, pp. 643–650.

[6] W. Huizinga, D. H. J. Poot, J.-M. Guyader, R. Klaassen, B. F. Coolen, M. van Kranenburg, R.-J. M. van Geuns, A. Uitterdijk, M. Polfliet, J. Vandemeulebroucke, A. Leemans, W. J. Niessen, and S. Klein, “PCA-based groupwise image registration for quantitative MRI,” Medical Image Analysis, vol. 29, pp. 65–78, 2016.

Figures