2181

The sensitivity of classical and deep image similarity metrics to MR acquisition parameters1Advanced Clinical Imaging Technology, Siemens Healthineers, Lausanne, Switzerland, 2Department of Radiology, Lausanne University Hospital and University of Lausanne, Lausanne, Switzerland, 3LTS5, Ecole Polytechnique Fédérale de Lausanne, Lausanne, Switzerland

Synopsis

Post-acquisition data harmonization promises to unlock multi-site data for deep learning applications. In turn, this rests on measuring image similarity. Here, we investigate the sensitivity of several similarity measures to fourteen acquisition protocols of a 3D T1-weighted (MPRAGE) contrast. Standard similarity metrics, a deep perceptual loss and a segmentation loss are extracted between image pairs and compared. The perceptual loss is highly correlated with L1 distance and outperforms other metrics in detecting acquisition parameter changes. The segmentation loss, however, is poorly correlated with other metrics, suggesting that these image similarity metrics alone aren't sufficient to harmonize data for clinical applications.

Introduction

Recent advances in image processing and computer vision have led to the emergence of various machine learning–based clinical decision support tools for MRI1,2. The deployment of such tools across multiple institutions is however hindered by the need for large training datasets. Particularly, data heterogeneity has so far limited the amount of usable training data, and thus prevented machine learning algorithms to reach their full potential in medical imaging. In MRI, data heterogeneity arises from differences both in experimental setup (e.g. scanner vendor, field strength, acquisition protocol), and software (e.g. version of reconstruction algorithm), leading to a large inter-site variability. Data harmonization tackles this limitation by optimizing image similarity, for example using generative adversarial networks3,4,5. To better understand image similarity in MRI, we evaluate the sensitivity of several similarity metrics to different acquisition protocols of a T1-weighted 3D Magnetisation-Prepared RApid Gradient Echo (MPRAGE) contrast, which is widely used in clinical workups. We investigate L1 distance, Structural Similarity (SSIM)6, and a Learned Image Patch Similarity7 (LPIPS) metric based on a perceptual loss from a pretrained VGG16 deep neural network. Further, these metrics are compared to a segmentation loss, designed to reflect the robustness of automated volumetric estimation of the thalamus: a bilateral brain structure with informative clusters of varying contrast-to-noise ratio across acquisition parameters, and particularly affected by partial volume effects.Methods

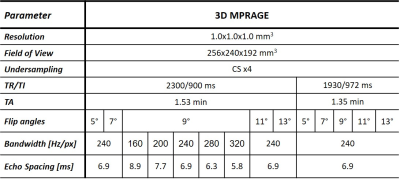

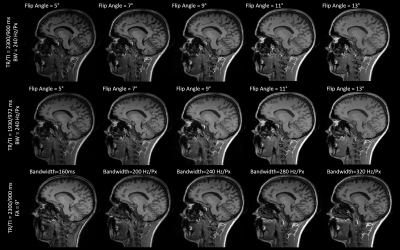

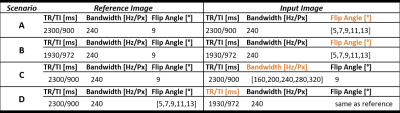

Five healthy volunteers (two males, age range=[24,32]years) underwent an MR examination at 3T (MAGNETOM Skyra Fit, Siemens Healthcare, Erlangen, Germany). Fourteen T1-weighted MPRAGE sequences were acquired with different combinations of repetition and inversion times (TR/TI), flip angles, and read-out bandwidths. Four-fold accelerated compressed sensing was used to speed up the acquisition8. Table 1 reports the complete list of acquisition protocols, whilst Figure 1 shows the resulting contrasts for one example subject.Four experimental scenarios were defined to explore the impact of each acquisition parameter on image similarity. First, we investigated the impact of different flip angles for a given read-out bandwidth (240Hz/px) and TR/TI (2300/900ms and 1930/972ms for scenarios (A) and (B), respectively), compared to a reference flip angle of 9°. In scenario (C), we considered acquisitions with different read-out bandwidths for a fixed TR/TI (2300/900ms) and flip angle (9°), and compared them to a reference read-out bandwidth of 240Hz/Px. Finally, in scenario (D), we investigated pair-wise TR/TI differences at a given flip angle (range=[5°,13°]) for a fixed bandwidth (240Hz/px). Table 2 reports the four scenarios and the corresponding reference images.

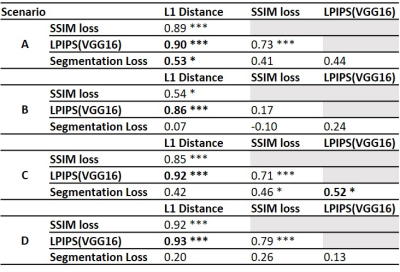

MorphoBox9, a brain segmentation prototype algorithm, was used to estimate the thalamus volume. The segmentation loss was defined as the absolute relative error in thalamus volume with respect to the reference image. Sixty central sagittal slices were selected to avoid marginal effects of background voxels, and the average similarity was computed over slices. To ensure that lower loss values reflected higher similarity, the inverse SSIM was considered. For each scenario, we investigated how similarity losses varied with differences in acquisition parameters. Furthermore, the Spearman correlation between metrics was evaluated. Significance levels were estimated from permutation tests and adjusted for multiple comparisons using FDR.

Results

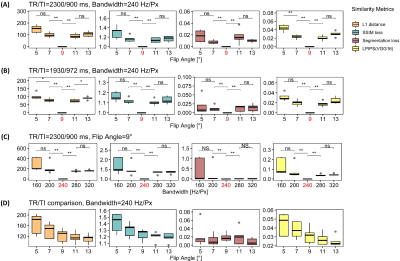

Figure 2 shows that for scenarios (A) and (B), similarity losses follow a V-shaped distribution centred around the reference flip angle, except for the segmentation loss. For scenario (A), the LPIPS loss is the only metric that highlights a significantly different similarity between low flip angles.Similar observations can be made for scenario (C), with an overall greater variance for the lowest read-out bandwidth. Further, differences between high bandwidths are not significant.

In scenario (D), larger flip angles are associated to greater similarity for all metrics but the segmentation loss.

Table 3 reports the Spearman correlations between similarity losses and corresponding significance levels. The perceptual loss is highly correlated with L1 distance in all scenarios. Overall, the correlations between the segmentation loss and other metrics are poor and highly variable between scenarios.

Discussion and Conclusion

Overall, image similarity metrics succeeded to detect slight changes in acquisition protocols. Compared to SSIM, L1 distance and LPIPS showed more significant differences and were characterized by lower variability. Altogether, these results suggest that L1 distance and perceptual loss are the most sensitive to acquisition parameter changes in MPRAGE. Due to the large correlation, the utility of adding a perceptual loss to L1 distance could be debated and should be further investigated. Notably, voxel size and slice thickness were constant in our study, meaning that contrast and SNR were the main observable changes to the images. In addition, the variability of the correlation between SSIM and other metrics in different scenarios suggests that the optimal design of hybrid losses to minimize for harmonization might depend on the nature of the acquisition parameters difference between sites. This finding should be carefully considered for harmonization tasks in MRI clinical applications.The poor correlation with the segmentation loss suggests that, although the used brain segmentation algorithm is sensitive to changes in acquisition parameters, the segmentation loss varies differently. This finding suggests that image similarity loss alone might not be sufficient to optimize data harmonization tasks for a given clinical application - provided that the drawn information is the clinical valuable evidence -, but more application-driven losses should be used.

Acknowledgements

No acknowledgement found.References

1. Akkus, Zeynettin, et al. "Deep learning for brain MRI segmentation: state of the art and future directions." Journal of digital imaging 30.4 (2017): 449-459.

2. Mazurowski, Maciej A., et al. "Deep learning in radiology: An overview of the concepts and a survey of the state of the art with focus on MRI." Journal of magnetic resonance imaging 49.4 (2019): 939-954.

3. Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., & Bengio, Y. (2014). Generative adversarial nets. In Advances in neural information processing systems (pp. 2672-2680).

4. Modanwal, G., Vellal, A., Buda, M., & Mazurowski, M. A. (2020). MRI image harmonization using cycle-consistent generative adversarial network. In Medical Imaging 2020: Computer-Aided Diagnosis (Vol. 11314, p. 1131413). International Society for Optics and Photonics.

5. Zhong, J., Wang, Y., Li, J., Xue, X., Liu, S., Wang, M. & Li, X. (2020). Inter-site harmonization based on dual generative adversarial networks for diffusion tensor imaging: application to neonatal white matter development. BioMedical Engineering OnLine, 19(1), 1-18.

6. Wang, Z., Bovik, A. C., Sheikh, H. R., & Simoncelli, E. P. (2004). Image quality assessment: from error visibility to structural similarity. IEEE transactions on image processing, 13(4), 600-612.

7. Zhang, R., Isola, P., Efros, A. A., Shechtman, E., & Wang, O. (2018). The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 586-595)..

8. Mussard, E., Hilbert, T., Forman, C., Meuli, R., Thiran, J. P., & Kober, T. (2020). Accelerated MP2RAGE imaging using Cartesian phyllotaxis readout and compressed sensing reconstruction. Magnetic Resonance in Medicine.

9. Schmitter, D., Roche, A., Maréchal, B., Ribes, D., Abdulkadir, A., Bach-Cuadra, M., ... & Meuli, R. (2015). An evaluation of volume-based morphometry for prediction of mild cognitive impairment and Alzheimer's disease. NeuroImage: Clinical, 7, 7-17.

Figures