2169

A self-supervised deep learning approach to synthesize weighted images and T1, T2, and PD parametric maps based on MR physics priors1University of Valladolid, Valladolid, Spain, 2Fundación Científica AECC, Valladolid, Spain

Synopsis

Synthetic MRI is gaining popularity due to its ability to generate realistic MR images. However, T1, T2, and PD maps are rarely used despite they provide the information needed to synthesize any image modality by applying the appropriate theoretical equations derived from MR physics or through involved simulation procedures. In this work we propose an extension of an state-of-the-art standard CNN to a self-supervised CNN by including MR physics priors to tackle confounding factors not considered in the equations, while bypassing the need of costly simulations. Our approach yields both realistic maps and weighted images from real data.

Purpose

Synthetic MRI has gained popularity due to its ability to generate realistic MR images. The synthesized images may improve diagnostic reliability without extra acquisition time and facilitate physicians training1. Various synthetic MRI approaches2-4 have been proposed that learn a rigid mapping between different image modalities, e.g., the synthesis of T1-weighted (T1w) images from T2-weighted (T2w) images4. However, these approaches do not take advantage of the quantitative T1, T2, and PD parametric maps which would ideally allow for the synthesis of any image modality by applying the appropriate theoretical equations derived from MR physics or performing costly simulations. Despite their potential, these parametric maps are rarely obtained in the clinical routine because each map needs the application of specific and lengthy relaxometry sequences5,6. To overcome this limitation, Ref7 proposed a method to compute T1, T2, and PD maps from a T1w and a T2w using a convolutional-neural-network (CNN) exclusively trained with synthetic data. However, additional sequence parameters and confounding factors were not considered in the theoretical equations8. Thus, the synthesis through these equations and maps might affect accuracy, causing differences between the synthesized and acquired images. Many aspects could be tackled with detailed and costly simulations, but non-ideal conditions would still remain8. Thus, we propose an extension of the previous standard CNN to a self-supervised CNN by including MR physics priors, and re-training it with few acquired weighted images. This way, we tailor these parametric maps to be used with the theoretical equations, counteracting the effects not considered and leveraging the synthesis of realistic weighted images.Methods

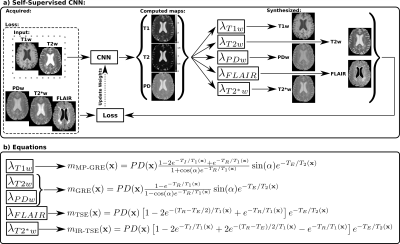

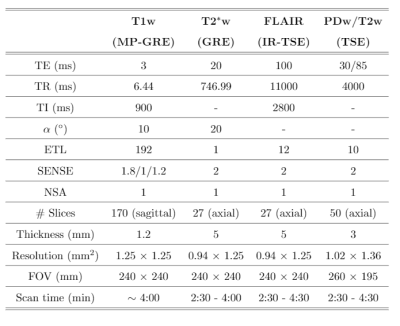

Acquisitions and preprocessing: Eight patients, suspected of Alzheimer’s disease, were recruited for Brain MRI protocol with IRB approval and informed written consent. Brain MRI was acquired with a 32-channel head coil on a 3T scanner (Philips, Best, The Netherlands). Table 1 gives details of the protocol and the five image modalities acquired. Subsequently, all image modalities were affine-registered to the FLAIR using FSL9. All images were then skull-stripped and cropped to the dimension of the network’s input layer7 (240x176 pixels). Finally, for optimization purposes we normalized the weighted images by dividing each of them by its average intensity without considering the background.CNN training: Starting from the pre-trained encoder/decoder CNN of Ref7 to synthesize T1, T2, and PD maps from a T1w and a T2w image, we converted this CNN7 into a self-supervised CNN by adding lambda layers with fixed weights after the decoders outputs (Figure 1a). Each of these layers implements a different theoretical equation corresponding to a particular sequence10 that describes MR intensity as a function of scanner parameters together with the T1, T2, and PD maps. As a result, the self-supervised CNN is able to synthesize any desired weighted image. In this case, a T1w and a T2w image (eight minutes acquisition) were input to the CNN, and five output lambda layers for the synthesis of a T1w, T2w, PDw, T2*w, and FLAIR were considered. Corresponding equations and parameters are shown in Figure 1b and Table 1, respectively. The loss function was computed as the mean absolute error between the synthesized and their corresponding acquired weighted images. The proposed self-supervised CNN was then re-trained using Adam optimizer with a batch size of 8 images. From the eight patients, we used 4 for re-training, two for validation with early-stopping to avoid overfitting, and two for testing.

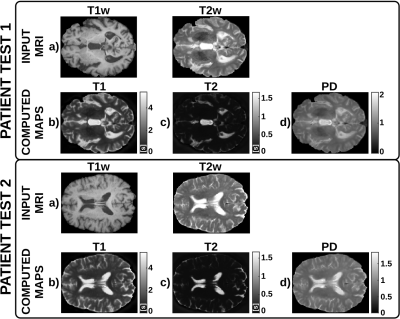

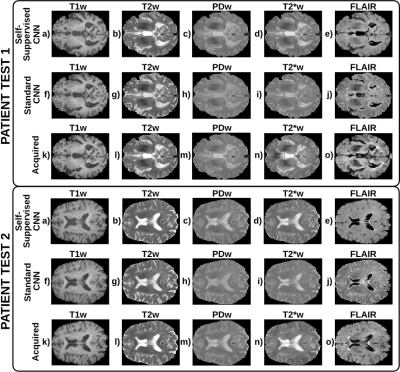

CNN testing: For the T1w and T2w images of the two test patients, the self-supervised CNN provided the computed T1, T2, and PD maps and the synthesized T1w, T2w, PDw, T2*w, and FLAIR images (see Figure 1a). The following metrics were used to evaluate the synthesis quality of the weighted outputs: mean-squared-error3, structural-similarity-index3, and peak signal-to-noise ratio3.

Results

Figure 2 shows a representative slice of the T1, T2, and PD maps computed for each of the test patients. Figure 3 shows a representative slice of the synthesized and their corresponding acquired weighted images for each of the test patients. Finally, boxplots of the evaluation metrics are shown in Figure 4.Discussion

These preliminary results show the potential of the proposed self-supervised deep learning approach to compute T1, T2, and PD parametric maps within expected ranges for 3T scanners and to synthesize any weighted image by means of MR physics priors included in the CNN. The self-supervised CNN achieves higher metrics than the standard CNN7, particularly for the PDw, T2*w, and FLAIR images, with statistical significant differences in most image modalities. Further, the metrics obtained are comparable with those obtained by state-of-the-art synthetic MRI methods2-4,7 to generate a synthetic image from one or more inputs. Note that, using the proposed approach, other modalities unseen by the network could be synthesized from the computed parametric maps. Nevertheless, this work has several limitations, we present a proof of concept with limited training and testing data, so further validation with larger databases is required.Conclusion

We proposed an approach for the synthesis of realistic weighted images based on the computation of T1, T2, and PD parametric maps which only needs two input images. Results suggest its utility for quantitative MRI in clinical viable times as well as its applications to synthesize any image modality.Acknowledgements

The authors acknowledge the Asociación Española Contra el Cáncer (AECC), Junta Provincial de Valladolid, and the Fundación Científica AECC for the predoctoral fellowship of the first author. In addition, the authors also acknowledge grants TEC2017-82408-R and RTI2018-094569-B-I00 from the Ministerio de Ciencia e Innovación of Spain.References

1. Treceño-Fernández D, et al. A Web-based Educational Magnetic Resonance Simulator: Design, Implementation and Testing. J Med Syst. 2020;44(1), 9.

2. Sohail M, et al. Unpaired Multi-contrast MR Image Synthesis Using Generative Adversarial Networks. In proceedings SASHIMI. Springer, Cham. 2019;11827:22-31

3. Chartsias A, et al. Multimodal MR synthesis via modality-invariant latent representation. IEEE Trans Med Imaging. 2018;37(3):803–814.

4. Dar SUH, et al. Image synthesis in multi-contrast MRI with conditional generative adversarial networks. IEEE Trans Med Imaging. 2019;38(10), 2375-2388.

5. Deoni SCL, et al. High-resolution T1 and T2 mapping of the brain in a clinically acceptable time with DESPOT1 and DESPOT2. Mag Reson Med. 2005;53(1):237–241.

6. Ramos-Llordén G, et al. NOVIFAST: A fast algorithm for accurate and precise VFA MRI T1 mapping. IEEE Trans Med Imaging. 2018;37(11):2414–2427.

7. Moya-Sáez E, et al. CNN-based synthesis of T1, T2 and PD parametric maps of the brain with a minimal input feeding. In proceedings of the ISMRM & SMRT Virtual Conference & Exhibition. 2020; 3806.

8. Jog A, et al. MR image synthesis by contrast learning on neighborhood ensembles. Med Image Anal. 2015;24(1), 63-76.

9. Jenkinson M, at al. FSL. NeuroImage. 2012;62(2):782-790.

10. Bernstein MA, et al. Handbook of MRI pulse sequences. Elsevier. 2004.

Figures