2015

Impact of training size on deep learning performance in in vivo 1H MRS1National Institute of Mental Health, National Institutes of Health, Bethesda, MD, United States

Synopsis

Deep learning has found an increasing number of applications in MRS. Nevertheless, few studies have addressed the impact of training data size on deep learning performance. In this work, we used density matrix simulation to generate a very large training dataset (70,000 spectra). Then comprehensive comparison was performed to evaluate deep learning performance with different training data sizes.

Introduction

Recent studies have demonstrated that deep learning techniques are applicable to MRS in addressing several issues such as spectral restoration from deteriorated signals1, ghosting artifact removal2, frequency and phase correction3, and metabolite quantification4. However, training data size is often selected empirically or arbitrarily based on data availability and computing resources. The required training data size for adequate training of the model has not been sufficiently investigated. In this study, we evaluated the impact of training data size on the performance of deep learning in solving the spectral restoration problem as in vivo 1H MRS data frequently suffer from low SNR and broad linewidth.Materials and Methods

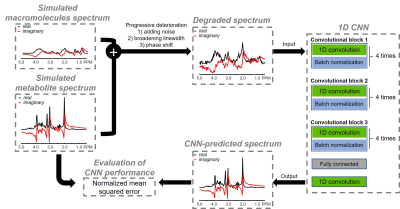

Data generation Full 3D density matrix simulations were used to generate 20 metabolite spectra including alanine, ascorbate, aspartate, creatine, gamma aminobutyric acid, glucose, glutamate, glutamine, glutathione, glycerylphos-phorylcholine, glycine, lactate, myo-inositol, N-acetylaspartate, N-acetylaspartylglutamate, phosphocholine, phosphocreatine, phosphorylethanolamine, scyllo-inositol, and taurine for a PRESS sequence at 3 T. Simulation parameters were: TE = 35 ms (TE1 = 14 ms, TE2 = 21 ms); spectral bandwidth = 4000 Hz; Shinnar-Le Roux (SLR) optimized excitation pulse (duration = 6 ms, bandwidth = 2000 Hz); and SLR optimized refocusing pulse (duration = 5 ms, bandwidth = 2000 Hz). Macromolecule signals were modeled using 13 Gaussian components.5 In total, 75,000 spectra were generated by randomly changing both relative metabolite concentration of individual metabolite and amplitude of individual macromolecule signals, which were considered to be the ground truth dataset. Subsequently individual spectrum was progressively degraded by broadening linewidth and adding white noise and then assigned to test dataset. SNR was measured as the ratio of signal intensity of total NAA to two times of the standard deviation of spectral noise ranging from 8 ppm to 10 ppm. Empirically, an SNR of 10 was selected as the threshold for distinguishing high SNR from low SNR.Convolutional neural network Figure 1 shows the convolutional neural network (CNN) architecture. Each convolutional block consisted of four pairs of 1D convolutional layer and batch normalization layer. The exponential linear unit (ELU) activation function was used for the whole network. Bayesian optimization6 was used to find optimal network parameters including number of layers per convolutional block, kernel size, and learning rate. This process took approximately 25 hours. After assigning 70,000 spectra to training data and 5000 spectra to test data, the CNN was trained with the following number of different training subsets: 300, 500, 1000, 2500, 5000, 30,000, and 70,000 spectra. During the training phase, 20% of each training dataset was split for validation dataset. Training was performed in the complex domain so that CNN-predicted spectra can be used by spectral fitting techniques such as LCModel and jMRUI for quantifying metabolite concentrations. Thus, the input and output of the CNN had two channels for real and imaginary parts of MRS data, respectively. The CNN was trained using an Adam algorithm with a fixed learning rate of 10-4, a batch size of 32, and 30 epochs. Mean squared error (MSE) was used as the loss function. Early stopping was applied to terminate training when the model performance ceased to improve for three epochs based on the validation dataset. The CNN was implemented and trained using the Keras library with a TensorFlow backend on a supercomputer cluster (32 GB NVIDIA Tesla V100).

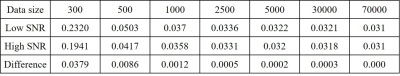

CNN evaluation The CNN was trained ten times on each of the seven training sizes and then the network close to the mean MSE was assigned to be the representative one for a corresponding training size. The normalized mean squared error (NMSE) between ground truth spectra and CNN-predicted spectra was used as a metric to assess the performance of CNN. The NMSE for high SNR and low SNR was calculated from the aforementioned representative networks.

Results

Figure 2 depicts representative simulated PRESS 1H MRS spectra at different SNRs together with corresponding CNN-predicted spectra for different training data sizes. Visually, CNN-predicted spectra improved the most when the training sizes changed from 300 cases to 500 cases. As summarized in Table 1, the mean NMSE decreased notably from training size of 300 cases to 500 cases and showed minimal improvement at training size above 2500 cases. Likewise, the highest NMSE difference between high SNR and low SNR was observed at 300 cases (0.0379) and this difference became negligible after 2500 cases (≤ 0.0005).Discussion

The present study demonstrated that the benefit of larger training data sizes could be marginal after reaching a threshold number of datasets in training CNN to restore degraded in vivo 1H MRS spectra. This threshold number is expected to be dependent on the complexity of dataset. Accordingly, a reduced threshold number is predicted for less crowed data, for example, long-TE spectra, compared to the more complex dataset such as short-TE spectra used in this work. A future study is warranted to evaluate the impact of different training data sizes on accuracy and precision of metabolites quantification using CNN-predicted spectra.Acknowledgements

No acknowledgement found.References

1. Lee HH, Kim H. Intact metabolite spectrum mining by deep learning in proton magnetic resonance spectroscopy of the brain. Magn Reson Med. 2019;82:33–48.

2. Kyathanahally SP, Doring A, Kreis R. Deep learning approaches for detection and removal of ghosting artifacts in MR spectroscopy. Magn Reson Med. 2018;80:851–863.

3. Tapper S, Mikkelsen M, Dewey BE, et al. Frequency and phase correction of J-difference edited MR spectra using deep learning. Magn Reson Med. 2020;00:1–11.

4. Das D, Coello E, Schulte RF, Menze BH. Quantification of metabolites in magnetic resonance spectroscopic imaging using machine learning. In: Proceedings of International Conference on Medical Image Computing and Computer-Assisted Intervention, Quebec, Canada; 2017:462–470.

5. Birch R, Peet AC, Dehghani H, Wilson M. Influence of macromolecule baseline on 1H MR spectroscopic imaging reproducibility. Magn Reson Med. 2017;77:34–43.

6. Snoek J, Larochelle H, Adams RP. Practical Bayesian optimization of machine learning algorithms. In: Proceedings of International Conference on Neural Information Processing Systems, Lake Tahoe, Nevada, 2012. pp 2951–2959.

Figures